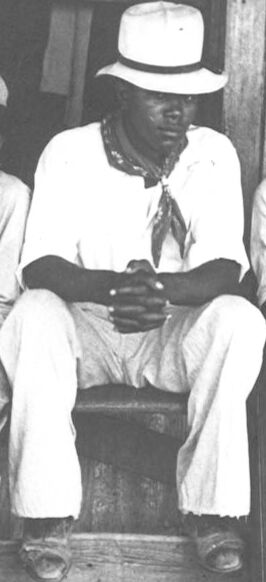

Machine Generated Data

Tags

Amazon

created on 2023-10-05

| Clothing | 100 | |

|

| ||

| Sitting | 99.8 | |

|

| ||

| Person | 99.4 | |

|

| ||

| Adult | 99.4 | |

|

| ||

| Male | 99.4 | |

|

| ||

| Man | 99.4 | |

|

| ||

| Sun Hat | 99.4 | |

|

| ||

| Person | 99.3 | |

|

| ||

| Adult | 99.3 | |

|

| ||

| Male | 99.3 | |

|

| ||

| Man | 99.3 | |

|

| ||

| Person | 99.2 | |

|

| ||

| Male | 99.2 | |

|

| ||

| Boy | 99.2 | |

|

| ||

| Child | 99.2 | |

|

| ||

| Person | 99.1 | |

|

| ||

| Adult | 99.1 | |

|

| ||

| Male | 99.1 | |

|

| ||

| Man | 99.1 | |

|

| ||

| Person | 97.4 | |

|

| ||

| Baby | 97.4 | |

|

| ||

| Face | 97 | |

|

| ||

| Head | 97 | |

|

| ||

| Person | 96.5 | |

|

| ||

| Person | 93 | |

|

| ||

| Adult | 93 | |

|

| ||

| Male | 93 | |

|

| ||

| Man | 93 | |

|

| ||

| Footwear | 91.7 | |

|

| ||

| Shoe | 91.7 | |

|

| ||

| Shoe | 91.1 | |

|

| ||

| Photography | 84.9 | |

|

| ||

| Hat | 84.8 | |

|

| ||

| Portrait | 82.3 | |

|

| ||

| Reading | 82 | |

|

| ||

| Shoe | 81.9 | |

|

| ||

| Jeans | 69.4 | |

|

| ||

| Pants | 69.4 | |

|

| ||

| Shoe | 64.1 | |

|

| ||

| Furniture | 62.9 | |

|

| ||

| Shoe | 60.3 | |

|

| ||

| Shoe | 59.9 | |

|

| ||

| Coat | 56.9 | |

|

| ||

| Cap | 56.9 | |

|

| ||

| Bench | 55.7 | |

|

| ||

| Couch | 55.6 | |

|

| ||

Clarifai

created on 2018-05-10

Imagga

created on 2023-10-05

| electric chair | 36.4 | |

|

| ||

| device | 31.9 | |

|

| ||

| instrument of execution | 29.4 | |

|

| ||

| barbershop | 28.3 | |

|

| ||

| man | 28.2 | |

|

| ||

| shop | 24.8 | |

|

| ||

| male | 24.1 | |

|

| ||

| people | 22.9 | |

|

| ||

| instrument | 22.8 | |

|

| ||

| person | 20.4 | |

|

| ||

| mercantile establishment | 19.6 | |

|

| ||

| washboard | 18.7 | |

|

| ||

| adult | 17.7 | |

|

| ||

| portrait | 14.9 | |

|

| ||

| men | 13.7 | |

|

| ||

| place of business | 13.1 | |

|

| ||

| black | 12.6 | |

|

| ||

| fashion | 11.3 | |

|

| ||

| travel | 11.3 | |

|

| ||

| old | 11.1 | |

|

| ||

| chair | 11.1 | |

|

| ||

| art | 11 | |

|

| ||

| musical instrument | 10.7 | |

|

| ||

| hat | 10.7 | |

|

| ||

| worker | 10.5 | |

|

| ||

| mask | 10.3 | |

|

| ||

| work | 10.3 | |

|

| ||

| sculpture | 10.3 | |

|

| ||

| smile | 10 | |

|

| ||

| sexy | 9.6 | |

|

| ||

| sitting | 9.4 | |

|

| ||

| happy | 9.4 | |

|

| ||

| two | 9.3 | |

|

| ||

| face | 9.2 | |

|

| ||

| attractive | 9.1 | |

|

| ||

| dress | 9 | |

|

| ||

| religion | 9 | |

|

| ||

| looking | 8.8 | |

|

| ||

| statue | 8.6 | |

|

| ||

| human | 8.2 | |

|

| ||

| women | 7.9 | |

|

| ||

| couple | 7.8 | |

|

| ||

| happiness | 7.8 | |

|

| ||

| culture | 7.7 | |

|

| ||

| hand | 7.6 | |

|

| ||

| city | 7.5 | |

|

| ||

| one | 7.5 | |

|

| ||

| vintage | 7.4 | |

|

| ||

| tourism | 7.4 | |

|

| ||

| wind instrument | 7.4 | |

|

| ||

| clothing | 7.4 | |

|

| ||

| emotion | 7.4 | |

|

| ||

| uniform | 7.3 | |

|

| ||

| business | 7.3 | |

|

| ||

| protection | 7.3 | |

|

| ||

| industrial | 7.3 | |

|

| ||

| smiling | 7.2 | |

|

| ||

| history | 7.2 | |

|

| ||

Google

created on 2018-05-10

| photograph | 95.8 | |

|

| ||

| black and white | 84.9 | |

|

| ||

| snapshot | 81.8 | |

|

| ||

| human behavior | 81.6 | |

|

| ||

| standing | 80.4 | |

|

| ||

| sitting | 78.2 | |

|

| ||

| vintage clothing | 69.1 | |

|

| ||

| monochrome photography | 66.6 | |

|

| ||

| family | 63.3 | |

|

| ||

| monochrome | 57.8 | |

|

| ||

| gentleman | 55.7 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 6-12 |

| Gender | Female, 99.9% |

| Sad | 100% |

| Calm | 11.7% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Angry | 0.1% |

| Confused | 0.1% |

| Happy | 0% |

| Disgusted | 0% |

AWS Rekognition

| Age | 27-37 |

| Gender | Female, 99.8% |

| Sad | 100% |

| Surprised | 6.4% |

| Fear | 6% |

| Calm | 6% |

| Confused | 0.5% |

| Disgusted | 0.3% |

| Happy | 0.3% |

| Angry | 0.3% |

AWS Rekognition

| Age | 24-34 |

| Gender | Male, 100% |

| Calm | 88.9% |

| Surprised | 6.7% |

| Sad | 6.3% |

| Fear | 6% |

| Angry | 0.9% |

| Confused | 0.8% |

| Disgusted | 0.4% |

| Happy | 0.1% |

AWS Rekognition

| Age | 6-16 |

| Gender | Male, 96.6% |

| Sad | 99.7% |

| Calm | 30.9% |

| Surprised | 6.3% |

| Fear | 6% |

| Confused | 0.3% |

| Angry | 0.1% |

| Disgusted | 0.1% |

| Happy | 0.1% |

AWS Rekognition

| Age | 23-31 |

| Gender | Female, 98.9% |

| Confused | 72.7% |

| Surprised | 9% |

| Calm | 8.8% |

| Fear | 8.6% |

| Angry | 3.8% |

| Sad | 3.2% |

| Happy | 0.6% |

| Disgusted | 0.5% |

Microsoft Cognitive Services

| Age | 7 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 59 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Amazon

Person

Adult

Male

Man

Boy

Child

Baby

Shoe

Hat

Jeans

| Person | 99.4% | |

|

| ||

| Person | 99.3% | |

|

| ||

| Person | 99.2% | |

|

| ||

| Person | 99.1% | |

|

| ||

| Person | 97.4% | |

|

| ||

| Person | 96.5% | |

|

| ||

| Person | 93% | |

|

| ||

| Adult | 99.4% | |

|

| ||

| Adult | 99.3% | |

|

| ||

| Adult | 99.1% | |

|

| ||

| Adult | 93% | |

|

| ||

| Male | 99.4% | |

|

| ||

| Male | 99.3% | |

|

| ||

| Male | 99.2% | |

|

| ||

| Male | 99.1% | |

|

| ||

| Male | 93% | |

|

| ||

| Man | 99.4% | |

|

| ||

| Man | 99.3% | |

|

| ||

| Man | 99.1% | |

|

| ||

| Man | 93% | |

|

| ||

| Boy | 99.2% | |

|

| ||

| Child | 99.2% | |

|

| ||

| Baby | 97.4% | |

|

| ||

| Shoe | 91.7% | |

|

| ||

| Shoe | 91.1% | |

|

| ||

| Shoe | 81.9% | |

|

| ||

| Shoe | 64.1% | |

|

| ||

| Shoe | 60.3% | |

|

| ||

| Shoe | 59.9% | |

|

| ||

| Hat | 84.8% | |

|

| ||

| Jeans | 69.4% | |

|

| ||

Categories

Imagga

| paintings art | 94.3% | |

|

| ||

| people portraits | 4.9% | |

|

| ||

Captions

Microsoft

created on 2018-05-10

| a group of people sitting on a bench posing for the camera | 94.6% | |

|

| ||

| a group of people sitting on a bench | 94.1% | |

|

| ||

| a group of people sitting on a bench posing for a photo | 92.5% | |

|

| ||