Machine Generated Data

Tags

Amazon

created on 2023-10-05

Clarifai

created on 2018-05-10

Imagga

created on 2023-10-05

| man | 38.3 | |

|

| ||

| male | 31.2 | |

|

| ||

| people | 26.2 | |

|

| ||

| brass | 26 | |

|

| ||

| person | 24.6 | |

|

| ||

| adult | 20.1 | |

|

| ||

| portrait | 20 | |

|

| ||

| black | 19.9 | |

|

| ||

| wind instrument | 19 | |

|

| ||

| men | 18.9 | |

|

| ||

| shop | 18 | |

|

| ||

| business | 16.4 | |

|

| ||

| device | 15.7 | |

|

| ||

| face | 15.6 | |

|

| ||

| cornet | 15.5 | |

|

| ||

| businessman | 14.1 | |

|

| ||

| hand | 13.7 | |

|

| ||

| looking | 13.6 | |

|

| ||

| musical instrument | 12.8 | |

|

| ||

| work | 12.5 | |

|

| ||

| mercantile establishment | 12.1 | |

|

| ||

| mature | 12.1 | |

|

| ||

| barbershop | 11.6 | |

|

| ||

| serious | 11.4 | |

|

| ||

| guy | 11.3 | |

|

| ||

| hat | 10.8 | |

|

| ||

| handsome | 10.7 | |

|

| ||

| human | 10.5 | |

|

| ||

| office | 10.3 | |

|

| ||

| suit | 10 | |

|

| ||

| old | 9.7 | |

|

| ||

| technology | 9.6 | |

|

| ||

| corporate | 9.4 | |

|

| ||

| professional | 9.4 | |

|

| ||

| weapon | 9.4 | |

|

| ||

| happy | 9.4 | |

|

| ||

| lifestyle | 9.4 | |

|

| ||

| smile | 9.3 | |

|

| ||

| gun | 9.1 | |

|

| ||

| world | 8.9 | |

|

| ||

| job | 8.8 | |

|

| ||

| look | 8.8 | |

|

| ||

| call | 8.7 | |

|

| ||

| happiness | 8.6 | |

|

| ||

| expression | 8.5 | |

|

| ||

| casual | 8.5 | |

|

| ||

| head | 8.4 | |

|

| ||

| clothing | 8.4 | |

|

| ||

| attractive | 8.4 | |

|

| ||

| one | 8.2 | |

|

| ||

| place of business | 8.1 | |

|

| ||

| model | 7.8 | |

|

| ||

| sitting | 7.7 | |

|

| ||

| building | 7.6 | |

|

| ||

| fashion | 7.5 | |

|

| ||

| equipment | 7.5 | |

|

| ||

| drink | 7.5 | |

|

| ||

| smoke | 7.4 | |

|

| ||

| style | 7.4 | |

|

| ||

| occupation | 7.3 | |

|

| ||

| lady | 7.3 | |

|

| ||

| protection | 7.3 | |

|

| ||

| worker | 7.2 | |

|

| ||

| hair | 7.1 | |

|

| ||

| indoors | 7 | |

|

| ||

| restaurant | 7 | |

|

| ||

| modern | 7 | |

|

| ||

Google

created on 2018-05-10

| photograph | 95.7 | |

|

| ||

| black and white | 91.6 | |

|

| ||

| monochrome photography | 79.3 | |

|

| ||

| photography | 79.2 | |

|

| ||

| monochrome | 70.9 | |

|

| ||

| poster | 70.3 | |

|

| ||

| human behavior | 65.8 | |

|

| ||

| vintage clothing | 58.8 | |

|

| ||

| stock photography | 52 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

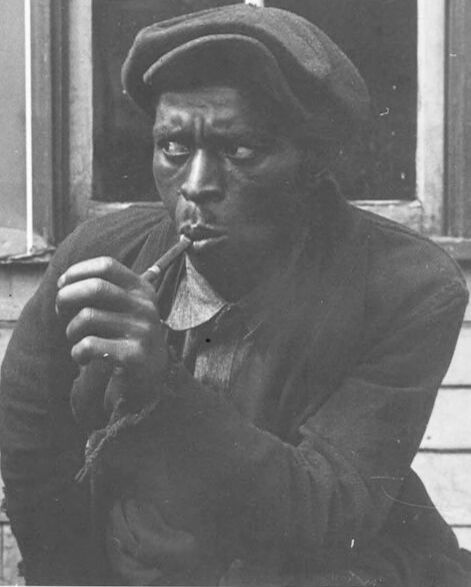

| Age | 36-44 |

| Gender | Male, 99.8% |

| Sad | 98.1% |

| Calm | 29% |

| Confused | 9.1% |

| Surprised | 7.1% |

| Fear | 6.7% |

| Angry | 1.6% |

| Disgusted | 1.3% |

| Happy | 1% |

AWS Rekognition

| Age | 35-43 |

| Gender | Male, 99.1% |

| Calm | 98.3% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.3% |

| Happy | 0.4% |

| Angry | 0.3% |

| Disgusted | 0.2% |

| Confused | 0.1% |

Microsoft Cognitive Services

| Age | 73 |

| Gender | Male |

Feature analysis

Categories

Imagga

| paintings art | 47.5% | |

|

| ||

| streetview architecture | 22.3% | |

|

| ||

| interior objects | 16.3% | |

|

| ||

| food drinks | 7.6% | |

|

| ||

| text visuals | 4% | |

|

| ||

Captions

Microsoft

created on 2018-05-10

| a black and white photo of a man | 83.7% | |

|

| ||

| an old photo of a man | 83.6% | |

|

| ||

| a man standing in front of a building | 80.4% | |

|

| ||

Text analysis

Amazon

BEER

Lito