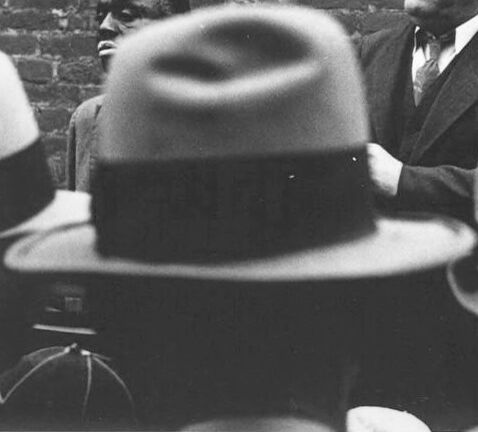

Machine Generated Data

Tags

Amazon

created on 2023-10-06

| Clothing | 100 | |

|

| ||

| Brick | 100 | |

|

| ||

| Sun Hat | 100 | |

|

| ||

| Adult | 98.4 | |

|

| ||

| Male | 98.4 | |

|

| ||

| Man | 98.4 | |

|

| ||

| Person | 98.4 | |

|

| ||

| Adult | 97.8 | |

|

| ||

| Male | 97.8 | |

|

| ||

| Man | 97.8 | |

|

| ||

| Person | 97.8 | |

|

| ||

| Adult | 92.9 | |

|

| ||

| Male | 92.9 | |

|

| ||

| Man | 92.9 | |

|

| ||

| Person | 92.9 | |

|

| ||

| Face | 92.6 | |

|

| ||

| Head | 92.6 | |

|

| ||

| Photography | 92.6 | |

|

| ||

| Portrait | 92.6 | |

|

| ||

| Coat | 89.7 | |

|

| ||

| Hat | 80.9 | |

|

| ||

| Hat | 80.7 | |

|

| ||

| Adult | 78.7 | |

|

| ||

| Male | 78.7 | |

|

| ||

| Man | 78.7 | |

|

| ||

| Person | 78.7 | |

|

| ||

| Formal Wear | 77.3 | |

|

| ||

| Suit | 77.3 | |

|

| ||

| Person | 71.9 | |

|

| ||

| Baby | 71.9 | |

|

| ||

| Hat | 60.2 | |

|

| ||

| Architecture | 56.1 | |

|

| ||

| Building | 56.1 | |

|

| ||

| Wall | 56.1 | |

|

| ||

| Cowboy Hat | 55.4 | |

|

| ||

Clarifai

created on 2018-05-10

Imagga

created on 2023-10-06

| hat | 33 | |

|

| ||

| cup | 30.8 | |

|

| ||

| coffee | 19.9 | |

|

| ||

| headdress | 18.2 | |

|

| ||

| cowboy hat | 16.8 | |

|

| ||

| drink | 16.7 | |

|

| ||

| black | 16.4 | |

|

| ||

| hot | 15.9 | |

|

| ||

| person | 15.8 | |

|

| ||

| beverage | 14.9 | |

|

| ||

| people | 13.9 | |

|

| ||

| man | 13.8 | |

|

| ||

| sitting | 12.9 | |

|

| ||

| clothing | 12.7 | |

|

| ||

| saucer | 12.6 | |

|

| ||

| male | 12.5 | |

|

| ||

| tea | 12.4 | |

|

| ||

| sombrero | 12 | |

|

| ||

| business | 11.5 | |

|

| ||

| brown | 11 | |

|

| ||

| working | 10.6 | |

|

| ||

| food | 10.3 | |

|

| ||

| breakfast | 10 | |

|

| ||

| office | 9.8 | |

|

| ||

| table | 9.6 | |

|

| ||

| work | 9.4 | |

|

| ||

| aroma | 9.4 | |

|

| ||

| traditional | 9.1 | |

|

| ||

| happy | 8.8 | |

|

| ||

| caffeine | 8.7 | |

|

| ||

| child | 8.5 | |

|

| ||

| portrait | 8.4 | |

|

| ||

| dark | 8.3 | |

|

| ||

| one | 8.2 | |

|

| ||

| student | 8.1 | |

|

| ||

| computer | 8 | |

|

| ||

| close | 8 | |

|

| ||

| businessman | 7.9 | |

|

| ||

| attractive | 7.7 | |

|

| ||

| mug | 7.7 | |

|

| ||

| businesspeople | 7.6 | |

|

| ||

| fashion | 7.5 | |

|

| ||

| sugar | 7.5 | |

|

| ||

| technology | 7.4 | |

|

| ||

| laptop | 7.4 | |

|

| ||

| tradition | 7.4 | |

|

| ||

| espresso | 7.4 | |

|

| ||

| morning | 7.2 | |

|

| ||

| color | 7.2 | |

|

| ||

| covering | 7.1 | |

|

| ||

Google

created on 2018-05-10

| white | 96.1 | |

|

| ||

| photograph | 96.1 | |

|

| ||

| black | 95.8 | |

|

| ||

| black and white | 92.4 | |

|

| ||

| photography | 83.9 | |

|

| ||

| monochrome photography | 82.9 | |

|

| ||

| snapshot | 81.8 | |

|

| ||

| sitting | 77.4 | |

|

| ||

| monochrome | 75.2 | |

|

| ||

| vintage clothing | 67.9 | |

|

| ||

| human behavior | 64.4 | |

|

| ||

| gentleman | 54.6 | |

|

| ||

| stock photography | 52 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 47-53 |

| Gender | Male, 100% |

| Calm | 84.2% |

| Sad | 14.1% |

| Surprised | 6.4% |

| Fear | 5.9% |

| Confused | 0.9% |

| Angry | 0.3% |

| Happy | 0.1% |

| Disgusted | 0.1% |

AWS Rekognition

| Age | 23-33 |

| Gender | Male, 99.7% |

| Calm | 39% |

| Disgusted | 19.3% |

| Fear | 14.9% |

| Surprised | 10.4% |

| Confused | 8.9% |

| Sad | 4.3% |

| Angry | 3.3% |

| Happy | 2.5% |

AWS Rekognition

| Age | 11-19 |

| Gender | Female, 80.9% |

| Sad | 94.4% |

| Disgusted | 23.5% |

| Fear | 10.3% |

| Happy | 8.2% |

| Surprised | 7.1% |

| Calm | 6.2% |

| Confused | 2.6% |

| Angry | 2.3% |

AWS Rekognition

| Age | 19-27 |

| Gender | Female, 87.7% |

| Sad | 80.3% |

| Calm | 25.9% |

| Happy | 15.5% |

| Fear | 9% |

| Confused | 8.2% |

| Surprised | 7.4% |

| Angry | 2.8% |

| Disgusted | 2.1% |

Microsoft Cognitive Services

| Age | 48 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 36 |

| Gender | Male |

Feature analysis

Categories

Imagga

| interior objects | 97.9% | |

|

| ||

| food drinks | 1.7% | |

|

| ||

Captions

Microsoft

created on 2018-05-10

| a person sitting at a table in front of a window | 76.8% | |

|

| ||

| a person sitting in front of a window | 69.5% | |

|

| ||

| a person sitting on a table | 69.4% | |

|

| ||