Machine Generated Data

Tags

Amazon

created on 2023-10-07

| Face | 100 | |

|

| ||

| Head | 100 | |

|

| ||

| Photography | 100 | |

|

| ||

| Portrait | 100 | |

|

| ||

| Person | 99.3 | |

|

| ||

| Adult | 99.3 | |

|

| ||

| Male | 99.3 | |

|

| ||

| Man | 99.3 | |

|

| ||

| Person | 99.2 | |

|

| ||

| Baby | 99.2 | |

|

| ||

| Person | 99.2 | |

|

| ||

| Adult | 99.2 | |

|

| ||

| Male | 99.2 | |

|

| ||

| Man | 99.2 | |

|

| ||

| Blouse | 96.8 | |

|

| ||

| Clothing | 96.8 | |

|

| ||

| People | 91 | |

|

| ||

| Wood | 87 | |

|

| ||

| Person | 84.6 | |

|

| ||

| Door | 78.2 | |

|

| ||

| Architecture | 76.2 | |

|

| ||

| Building | 76.2 | |

|

| ||

| Outdoors | 76.2 | |

|

| ||

| Shelter | 76.2 | |

|

| ||

| Furniture | 72.1 | |

|

| ||

| Closet | 57.1 | |

|

| ||

| Cupboard | 57.1 | |

|

| ||

| Accessories | 56.8 | |

|

| ||

| Earring | 56.8 | |

|

| ||

| Jewelry | 56.8 | |

|

| ||

| Housing | 56.4 | |

|

| ||

| Dress | 56.1 | |

|

| ||

| Window | 56.1 | |

|

| ||

| Art | 56 | |

|

| ||

| Painting | 56 | |

|

| ||

| Happy | 55.8 | |

|

| ||

| Smile | 55.8 | |

|

| ||

| Plywood | 55.3 | |

|

| ||

| Lady | 55.2 | |

|

| ||

Clarifai

created on 2018-05-10

| people | 100 | |

|

| ||

| two | 99.6 | |

|

| ||

| adult | 99.6 | |

|

| ||

| man | 98 | |

|

| ||

| group | 96.5 | |

|

| ||

| three | 96.2 | |

|

| ||

| wear | 95.5 | |

|

| ||

| one | 95.2 | |

|

| ||

| administration | 94.9 | |

|

| ||

| portrait | 94.7 | |

|

| ||

| woman | 93.3 | |

|

| ||

| facial expression | 92 | |

|

| ||

| furniture | 91.2 | |

|

| ||

| group together | 90.6 | |

|

| ||

| leader | 89.7 | |

|

| ||

| four | 88 | |

|

| ||

| actress | 87.7 | |

|

| ||

| actor | 87.3 | |

|

| ||

| medical practitioner | 87 | |

|

| ||

| music | 83.3 | |

|

| ||

Imagga

created on 2023-10-07

| portrait | 26.5 | |

|

| ||

| people | 24.6 | |

|

| ||

| adult | 22.7 | |

|

| ||

| sexy | 21.7 | |

|

| ||

| person | 21.1 | |

|

| ||

| hair | 20.6 | |

|

| ||

| pretty | 19.6 | |

|

| ||

| attractive | 19.6 | |

|

| ||

| dress | 19 | |

|

| ||

| fashion | 18.9 | |

|

| ||

| man | 18.8 | |

|

| ||

| love | 17.4 | |

|

| ||

| face | 15.6 | |

|

| ||

| model | 15.6 | |

|

| ||

| bride | 15.4 | |

|

| ||

| male | 15.2 | |

|

| ||

| couple | 14.8 | |

|

| ||

| world | 14.6 | |

|

| ||

| black | 14.6 | |

|

| ||

| happy | 14.4 | |

|

| ||

| cute | 13.6 | |

|

| ||

| sensuality | 13.6 | |

|

| ||

| old | 13.2 | |

|

| ||

| posing | 12.4 | |

|

| ||

| call | 12.1 | |

|

| ||

| room | 12.1 | |

|

| ||

| wedding | 12 | |

|

| ||

| window | 11.5 | |

|

| ||

| lady | 11.4 | |

|

| ||

| child | 11.3 | |

|

| ||

| youth | 11.1 | |

|

| ||

| women | 11.1 | |

|

| ||

| elegance | 10.9 | |

|

| ||

| human | 10.5 | |

|

| ||

| brunette | 10.5 | |

|

| ||

| looking | 10.4 | |

|

| ||

| body | 10.4 | |

|

| ||

| groom | 10.4 | |

|

| ||

| style | 10.4 | |

|

| ||

| home | 10.4 | |

|

| ||

| happiness | 10.2 | |

|

| ||

| sensual | 10 | |

|

| ||

| gorgeous | 10 | |

|

| ||

| vintage | 9.9 | |

|

| ||

| mother | 9.8 | |

|

| ||

| newspaper | 9.6 | |

|

| ||

| skin | 9.6 | |

|

| ||

| smiling | 9.4 | |

|

| ||

| two | 9.3 | |

|

| ||

| relaxation | 9.2 | |

|

| ||

| house | 9.2 | |

|

| ||

| romantic | 8.9 | |

|

| ||

| family | 8.9 | |

|

| ||

| look | 8.8 | |

|

| ||

| sepia | 8.7 | |

|

| ||

| sculpture | 8.7 | |

|

| ||

| ancient | 8.7 | |

|

| ||

| glamor | 8.6 | |

|

| ||

| smile | 8.6 | |

|

| ||

| wall | 8.6 | |

|

| ||

| clothing | 8.5 | |

|

| ||

| relationship | 8.4 | |

|

| ||

| one | 8.2 | |

|

| ||

| make | 8.2 | |

|

| ||

| lifestyle | 8 | |

|

| ||

| art | 7.9 | |

|

| ||

| bridal | 7.8 | |

|

| ||

| sitting | 7.7 | |

|

| ||

| culture | 7.7 | |

|

| ||

| married | 7.7 | |

|

| ||

| hand | 7.6 | |

|

| ||

| head | 7.6 | |

|

| ||

| decoration | 7.4 | |

|

| ||

| light | 7.4 | |

|

| ||

| makeup | 7.3 | |

|

| ||

| history | 7.2 | |

|

| ||

| romance | 7.1 | |

|

| ||

Google

created on 2018-05-10

| photograph | 96.3 | |

|

| ||

| standing | 91.4 | |

|

| ||

| black and white | 88.7 | |

|

| ||

| snapshot | 81.8 | |

|

| ||

| vintage clothing | 73.5 | |

|

| ||

| portrait | 71.9 | |

|

| ||

| monochrome photography | 71.4 | |

|

| ||

| monochrome | 64 | |

|

| ||

| picture frame | 63.5 | |

|

| ||

| history | 61.4 | |

|

| ||

| gentleman | 57.6 | |

|

| ||

| artwork | 54 | |

|

| ||

| family | 54 | |

|

| ||

| stock photography | 53.7 | |

|

| ||

| drawing | 50.4 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

| Age | 48-56 |

| Gender | Female, 97.9% |

| Happy | 98.2% |

| Surprised | 6.4% |

| Fear | 6% |

| Sad | 2.2% |

| Angry | 0.8% |

| Disgusted | 0.1% |

| Calm | 0.1% |

| Confused | 0.1% |

AWS Rekognition

| Age | 0-6 |

| Gender | Female, 88.2% |

| Sad | 99.7% |

| Surprised | 12.5% |

| Confused | 9.4% |

| Calm | 8.3% |

| Fear | 6.2% |

| Angry | 2.8% |

| Disgusted | 1.1% |

| Happy | 0.2% |

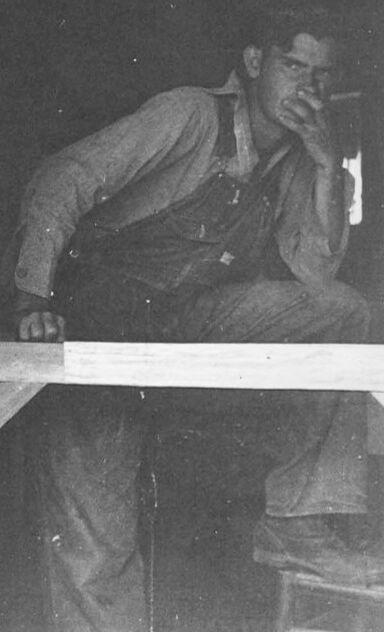

AWS Rekognition

| Age | 26-36 |

| Gender | Male, 100% |

| Calm | 97.3% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.2% |

| Confused | 1.6% |

| Angry | 0.4% |

| Disgusted | 0.1% |

| Happy | 0.1% |

Microsoft Cognitive Services

| Age | 54 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 54 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 5 |

| Gender | Female |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very likely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Imagga

| Traits | no traits identified |

Imagga

| Traits | no traits identified |

Imagga

| Traits | no traits identified |

Feature analysis

Categories

Imagga

| paintings art | 99.8% | |

|

| ||

Captions

Microsoft

created by unknown on 2018-05-10

| a black and white photo of a person | 88.3% | |

|

| ||

| a vintage photo of a person | 88.2% | |

|

| ||

| an old black and white photo of a person | 85.8% | |

|

| ||

Clarifai

created by general-english-image-caption-blip on 2025-05-15

| a photograph of a woman holding a baby in a doorway | -100% | |

|

| ||

Google Gemini

Created by gemini-2.0-flash on 2025-05-09

The black-and-white photograph depicts a mother and child standing near a doorway, with a man smoking a cigarette visible through another doorway.

On the left side of the image, a woman stands near a wooden wall, holding a young child in her arms. The woman is smiling, and the child has blonde hair and is holding a white cloth. The woman is wearing a dress and a white apron.

To the right, a man sits inside another doorway, smoking a cigarette. He is wearing overalls and a long-sleeved shirt. The man appears to be looking off to the side, and his expression is difficult to discern due to the quality of the image.

The overall setting appears to be a modest home or dwelling. The composition of the photograph divides the scene into two distinct areas: the mother and child in the foreground, and the man in the background, smoking.

Created by gemini-2.0-flash-lite on 2025-05-09

Here's a description of the image:

The black and white photograph shows a woman holding a young child, while a man sits in the background.

In the foreground, the woman stands centered in the frame, holding a small child in her arms. The woman wears a simple, sleeveless dress and a white apron. She has a kind smile on her face. The child is lightly clothed and is wrapped in a towel. They appear to be outdoors, with a wooden wall behind them.

In the background, to the right, a man is seated. He appears to be wearing work clothes and has a cigarette in his mouth. The man is positioned within what appears to be a simple frame, possibly a doorway or porch. The lighting in this area is much darker.