Machine Generated Data

Tags

Amazon

created on 2023-10-07

Clarifai

created on 2018-05-10

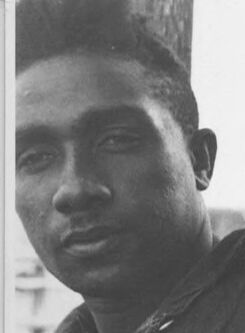

| people | 99.9 | |

|

| ||

| adult | 98.9 | |

|

| ||

| portrait | 98.4 | |

|

| ||

| man | 98.2 | |

|

| ||

| monochrome | 97.8 | |

|

| ||

| two | 96.9 | |

|

| ||

| one | 96.3 | |

|

| ||

| wear | 95.8 | |

|

| ||

| street | 93.7 | |

|

| ||

| coat | 92 | |

|

| ||

| outerwear | 91.8 | |

|

| ||

| group | 86.7 | |

|

| ||

| administration | 84.7 | |

|

| ||

| veil | 80.2 | |

|

| ||

| outfit | 79.6 | |

|

| ||

| three | 79.1 | |

|

| ||

| group together | 78.3 | |

|

| ||

| leader | 77 | |

|

| ||

| black and white | 76.8 | |

|

| ||

| woman | 76.1 | |

|

| ||

Imagga

created on 2023-10-07

Google

created on 2018-05-10

| photograph | 96 | |

|

| ||

| black | 95.3 | |

|

| ||

| person | 94.1 | |

|

| ||

| black and white | 91.3 | |

|

| ||

| photography | 83.8 | |

|

| ||

| monochrome photography | 83.8 | |

|

| ||

| snapshot | 81.8 | |

|

| ||

| standing | 75.4 | |

|

| ||

| human | 68.7 | |

|

| ||

| monochrome | 66.7 | |

|

| ||

| vintage clothing | 60.8 | |

|

| ||

| portrait | 51.9 | |

|

| ||

| stock photography | 50.3 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

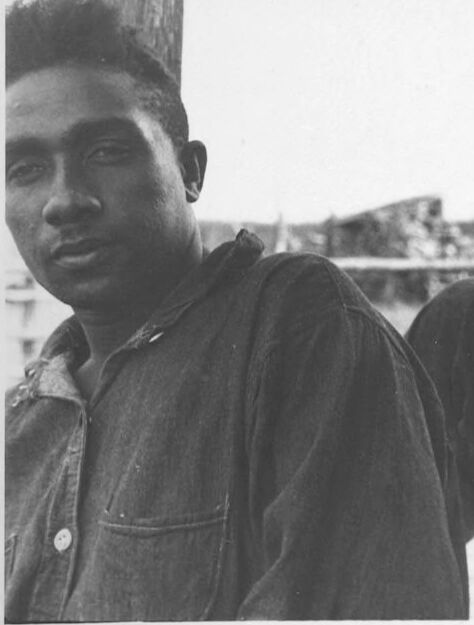

| Age | 24-34 |

| Gender | Male, 100% |

| Calm | 95.7% |

| Surprised | 6.4% |

| Fear | 6% |

| Confused | 2.3% |

| Sad | 2.2% |

| Angry | 0.6% |

| Disgusted | 0.4% |

| Happy | 0.2% |

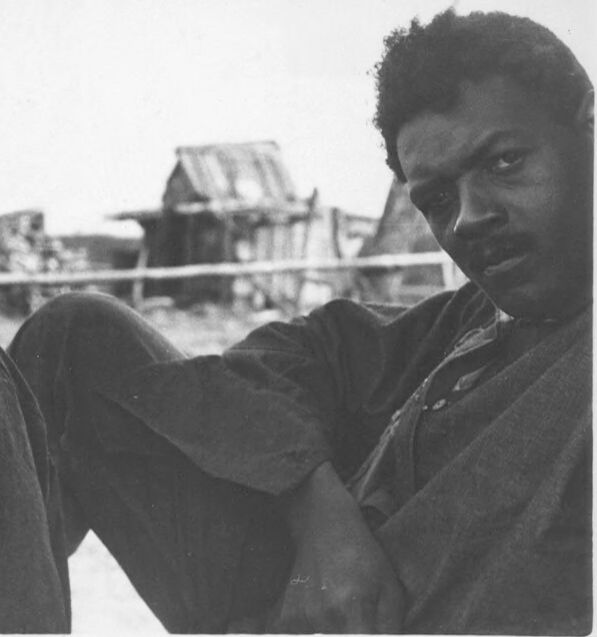

AWS Rekognition

| Age | 25-35 |

| Gender | Male, 99.7% |

| Calm | 98.1% |

| Surprised | 6.4% |

| Fear | 5.9% |

| Sad | 2.4% |

| Confused | 0.2% |

| Angry | 0.2% |

| Happy | 0.1% |

| Disgusted | 0.1% |

Microsoft Cognitive Services

| Age | 30 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 38 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| paintings art | 99.4% | |

|

| ||

Captions

Microsoft

created on 2018-05-10

| a black and white photo of a man | 96.8% | |

|

| ||

| an old black and white photo of a man | 95.3% | |

|

| ||

| a vintage photo of a man | 95.2% | |

|

| ||