Machine Generated Data

Tags

Amazon

created on 2023-10-06

| Adult | 99.3 | |

|

| ||

| Male | 99.3 | |

|

| ||

| Man | 99.3 | |

|

| ||

| Person | 99.3 | |

|

| ||

| Clothing | 93.6 | |

|

| ||

| Hat | 93.6 | |

|

| ||

| Animal | 93.5 | |

|

| ||

| Horse | 93.5 | |

|

| ||

| Mammal | 93.5 | |

|

| ||

| Outdoors | 92.3 | |

|

| ||

| Nature | 88.5 | |

|

| ||

| Person | 87.4 | |

|

| ||

| Soil | 78.3 | |

|

| ||

| Garden | 77.7 | |

|

| ||

| Countryside | 75 | |

|

| ||

| Face | 71 | |

|

| ||

| Head | 71 | |

|

| ||

| Transportation | 66.5 | |

|

| ||

| Vehicle | 66.5 | |

|

| ||

| Gardener | 63.7 | |

|

| ||

| Gardening | 63.7 | |

|

| ||

| Machine | 63.2 | |

|

| ||

| Wheel | 63.2 | |

|

| ||

| Rural | 57.9 | |

|

| ||

| Colt Horse | 56.2 | |

|

| ||

| Sun Hat | 55.7 | |

|

| ||

| Farm | 55.5 | |

|

| ||

| Wagon | 55.1 | |

|

| ||

Clarifai

created on 2018-05-10

Imagga

created on 2023-10-06

| vessel | 69.6 | |

|

| ||

| bucket | 63 | |

|

| ||

| container | 40.2 | |

|

| ||

| tool | 23.2 | |

|

| ||

| outdoor | 21.4 | |

|

| ||

| outdoors | 21 | |

|

| ||

| shovel | 18.9 | |

|

| ||

| park | 18.9 | |

|

| ||

| people | 16.2 | |

|

| ||

| rake | 15.9 | |

|

| ||

| old | 13.2 | |

|

| ||

| water | 12.7 | |

|

| ||

| autumn | 12.3 | |

|

| ||

| summer | 12.2 | |

|

| ||

| tree | 12 | |

|

| ||

| garden | 11.7 | |

|

| ||

| leisure | 11.6 | |

|

| ||

| man | 11.4 | |

|

| ||

| child | 11 | |

|

| ||

| cleaner | 10.9 | |

|

| ||

| fall | 10.9 | |

|

| ||

| male | 10.7 | |

|

| ||

| person | 10.4 | |

|

| ||

| tub | 10.3 | |

|

| ||

| boat | 10.3 | |

|

| ||

| outside | 10.3 | |

|

| ||

| swing | 9.5 | |

|

| ||

| grass | 9.5 | |

|

| ||

| work | 9.5 | |

|

| ||

| play | 9.5 | |

|

| ||

| happy | 9.4 | |

|

| ||

| hand tool | 9.4 | |

|

| ||

| barrow | 9.3 | |

|

| ||

| fun | 9 | |

|

| ||

| recreation | 9 | |

|

| ||

| activity | 8.9 | |

|

| ||

| childhood | 8.9 | |

|

| ||

| trees | 8.9 | |

|

| ||

| lifestyle | 8.7 | |

|

| ||

| spring | 8.6 | |

|

| ||

| holiday | 8.6 | |

|

| ||

| adult | 8.4 | |

|

| ||

| wood | 8.3 | |

|

| ||

| countryside | 8.2 | |

|

| ||

| vacation | 8.2 | |

|

| ||

| landscape | 8.2 | |

|

| ||

| active | 8.1 | |

|

| ||

| sunset | 8.1 | |

|

| ||

| kid | 8 | |

|

| ||

| portrait | 7.8 | |

|

| ||

| travel | 7.7 | |

|

| ||

| gardening | 7.6 | |

|

| ||

| relax | 7.6 | |

|

| ||

| sky | 7 | |

|

| ||

Google

created on 2018-05-10

| photograph | 95.4 | |

|

| ||

| black | 95 | |

|

| ||

| black and white | 92.3 | |

|

| ||

| snapshot | 81.8 | |

|

| ||

| monochrome photography | 80 | |

|

| ||

| tree | 76.4 | |

|

| ||

| monochrome | 71.4 | |

|

| ||

| human behavior | 62.7 | |

|

| ||

| stock photography | 55 | |

|

| ||

| vintage clothing | 53.4 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

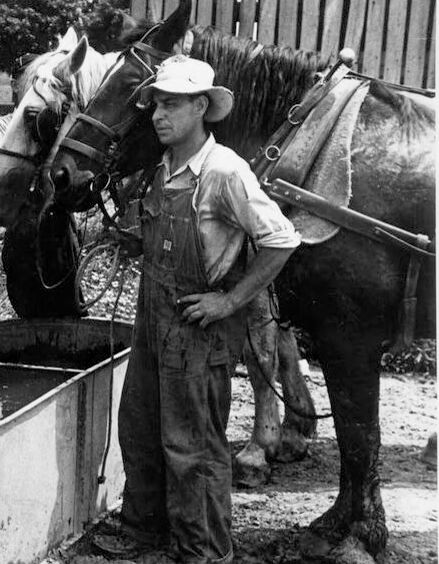

| Age | 39-47 |

| Gender | Male, 99% |

| Calm | 99.8% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.2% |

| Angry | 0.1% |

| Confused | 0% |

| Disgusted | 0% |

| Happy | 0% |

Microsoft Cognitive Services

| Age | 51 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very likely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| paintings art | 97% | |

|

| ||

| streetview architecture | 1.4% | |

|

| ||

| people portraits | 1.2% | |

|

| ||

Captions

Microsoft

created on 2018-05-10

| a black and white photo of a man | 84.3% | |

|

| ||

| an old photo of a man | 84.2% | |

|

| ||

| old photo of a man | 84.1% | |

|

| ||