Machine Generated Data

Tags

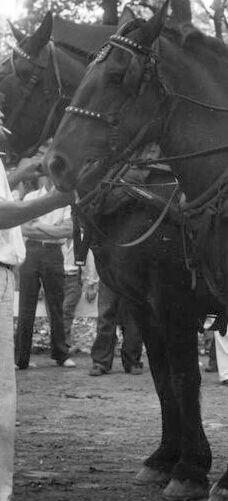

Amazon

created on 2023-10-06

| Adult | 98.8 | |

|

| ||

| Male | 98.8 | |

|

| ||

| Man | 98.8 | |

|

| ||

| Person | 98.8 | |

|

| ||

| Adult | 98.5 | |

|

| ||

| Male | 98.5 | |

|

| ||

| Man | 98.5 | |

|

| ||

| Person | 98.5 | |

|

| ||

| Person | 98.5 | |

|

| ||

| Person | 96.2 | |

|

| ||

| Animal | 94 | |

|

| ||

| Horse | 94 | |

|

| ||

| Mammal | 94 | |

|

| ||

| Person | 94 | |

|

| ||

| Person | 91.5 | |

|

| ||

| Person | 85.6 | |

|

| ||

| Clothing | 82.8 | |

|

| ||

| Footwear | 82.8 | |

|

| ||

| Shoe | 82.8 | |

|

| ||

| Person | 81.3 | |

|

| ||

| Shoe | 77.9 | |

|

| ||

| Person | 76.4 | |

|

| ||

| Person | 75.4 | |

|

| ||

| Horse | 70.9 | |

|

| ||

| Shoe | 70.5 | |

|

| ||

| Person | 68.6 | |

|

| ||

| Outdoors | 65.9 | |

|

| ||

| Person | 62.2 | |

|

| ||

| Hat | 59.3 | |

|

| ||

| Andalusian Horse | 57.5 | |

|

| ||

| Stallion | 56.4 | |

|

| ||

| Nature | 55.8 | |

|

| ||

| Transportation | 55.8 | |

|

| ||

| Vehicle | 55.8 | |

|

| ||

| Wagon | 55.8 | |

|

| ||

| Colt Horse | 55.5 | |

|

| ||

| Person | 55.2 | |

|

| ||

Clarifai

created on 2018-05-10

Imagga

created on 2023-10-06

| horse | 76.7 | |

|

| ||

| harness | 67.9 | |

|

| ||

| plow | 48.4 | |

|

| ||

| horses | 46.8 | |

|

| ||

| horse cart | 42.3 | |

|

| ||

| animal | 39.5 | |

|

| ||

| cart | 36.5 | |

|

| ||

| tool | 34.8 | |

|

| ||

| carriage | 31.7 | |

|

| ||

| riding | 30.2 | |

|

| ||

| wagon | 29.7 | |

|

| ||

| support | 29.6 | |

|

| ||

| farm | 28.5 | |

|

| ||

| equestrian | 27.5 | |

|

| ||

| stallion | 26.4 | |

|

| ||

| brown | 25 | |

|

| ||

| animals | 23.2 | |

|

| ||

| equine | 22.7 | |

|

| ||

| device | 22.6 | |

|

| ||

| bridle | 20.6 | |

|

| ||

| mammal | 19.8 | |

|

| ||

| sport | 19.8 | |

|

| ||

| grass | 19.8 | |

|

| ||

| rural | 19.4 | |

|

| ||

| mane | 18.6 | |

|

| ||

| ranch | 18 | |

|

| ||

| ride | 17.5 | |

|

| ||

| saddle | 16.9 | |

|

| ||

| wheeled vehicle | 16.5 | |

|

| ||

| head | 16 | |

|

| ||

| field | 15.9 | |

|

| ||

| horseback | 15.8 | |

|

| ||

| mare | 15.7 | |

|

| ||

| outdoors | 15.7 | |

|

| ||

| cowboy | 15.4 | |

|

| ||

| rider | 14.7 | |

|

| ||

| competition | 14.6 | |

|

| ||

| stable gear | 14.3 | |

|

| ||

| race | 13.4 | |

|

| ||

| pet | 12.9 | |

|

| ||

| jockey | 12.8 | |

|

| ||

| gear | 12.3 | |

|

| ||

| summer | 10.9 | |

|

| ||

| racing | 10.8 | |

|

| ||

| fence | 10.6 | |

|

| ||

| pasture | 10.5 | |

|

| ||

| friend | 10.5 | |

|

| ||

| stable | 9.8 | |

|

| ||

| vehicle | 9.7 | |

|

| ||

| agriculture | 9.7 | |

|

| ||

| black | 9.6 | |

|

| ||

| man | 9.4 | |

|

| ||

| outdoor | 9.2 | |

|

| ||

| speed | 9.2 | |

|

| ||

| team | 9 | |

|

| ||

| meadow | 9 | |

|

| ||

| trees | 8.9 | |

|

| ||

| western | 8.7 | |

|

| ||

| running | 8.6 | |

|

| ||

| dirt | 8.6 | |

|

| ||

| sports | 8.3 | |

|

| ||

| sky | 8.3 | |

|

| ||

| fun | 8.2 | |

|

| ||

| active | 8.1 | |

|

| ||

| farmer | 8 | |

|

| ||

| country | 7.9 | |

|

| ||

| dressage | 7.9 | |

|

| ||

| hay | 7.8 | |

|

| ||

| cow | 7.8 | |

|

| ||

| equipment | 7.7 | |

|

| ||

| culture | 7.7 | |

|

| ||

| tail | 7.7 | |

|

| ||

| two | 7.6 | |

|

| ||

| show | 7.6 | |

|

| ||

| tourism | 7.4 | |

|

| ||

| action | 7.4 | |

|

| ||

| color | 7.2 | |

|

| ||

| rein | 7.2 | |

|

| ||

| transportation | 7.2 | |

|

| ||

| activity | 7.2 | |

|

| ||

Google

created on 2018-05-10

| horse | 94.3 | |

|

| ||

| black and white | 91.8 | |

|

| ||

| horse like mammal | 88.9 | |

|

| ||

| horse harness | 88.4 | |

|

| ||

| pack animal | 82.1 | |

|

| ||

| monochrome photography | 79 | |

|

| ||

| monochrome | 67.2 | |

|

| ||

| livestock | 60.6 | |

|

| ||

| rein | 58.9 | |

|

| ||

| stallion | 58.7 | |

|

| ||

| horse tack | 55.4 | |

|

| ||

| mane | 55 | |

|

| ||

| stock photography | 52.7 | |

|

| ||

| horse supplies | 51.3 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 35-43 |

| Gender | Male, 99.6% |

| Happy | 88.5% |

| Surprised | 8.9% |

| Fear | 6.2% |

| Angry | 4.5% |

| Sad | 2.4% |

| Confused | 0.4% |

| Calm | 0.3% |

| Disgusted | 0.2% |

AWS Rekognition

| Age | 11-19 |

| Gender | Female, 81.4% |

| Sad | 66.6% |

| Calm | 23.3% |

| Surprised | 14.3% |

| Fear | 13.2% |

| Angry | 9.3% |

| Confused | 5.5% |

| Disgusted | 3.6% |

| Happy | 2.8% |

AWS Rekognition

| Age | 23-31 |

| Gender | Male, 94.5% |

| Sad | 99.9% |

| Calm | 23.1% |

| Surprised | 6.5% |

| Fear | 6% |

| Angry | 0.7% |

| Happy | 0.6% |

| Confused | 0.6% |

| Disgusted | 0.6% |

AWS Rekognition

| Age | 22-30 |

| Gender | Male, 97.8% |

| Calm | 90.2% |

| Surprised | 6.4% |

| Fear | 6.1% |

| Angry | 5.8% |

| Sad | 2.6% |

| Confused | 1.1% |

| Disgusted | 0.4% |

| Happy | 0.4% |

AWS Rekognition

| Age | 6-14 |

| Gender | Male, 88.1% |

| Surprised | 66% |

| Disgusted | 16.8% |

| Fear | 12.5% |

| Sad | 10.5% |

| Angry | 10% |

| Calm | 6% |

| Confused | 3% |

| Happy | 1% |

AWS Rekognition

| Age | 6-16 |

| Gender | Female, 62.7% |

| Calm | 77.5% |

| Surprised | 8.7% |

| Fear | 7.8% |

| Confused | 4.3% |

| Angry | 3.7% |

| Sad | 2.9% |

| Disgusted | 2.7% |

| Happy | 0.9% |

AWS Rekognition

| Age | 24-34 |

| Gender | Male, 55.4% |

| Calm | 87.9% |

| Surprised | 6.5% |

| Fear | 6% |

| Happy | 3.4% |

| Sad | 3.3% |

| Confused | 2.1% |

| Angry | 1.5% |

| Disgusted | 1.2% |

Feature analysis

Amazon

Adult

Male

Man

Person

Horse

Shoe

Hat

Categories

Imagga

| nature landscape | 93.9% | |

|

| ||

| pets animals | 5.4% | |

|

| ||

Captions

Microsoft

created on 2018-05-10

| a man riding a horse drawn carriage | 95.1% | |

|

| ||

| a man on a horse drawn carriage | 95% | |

|

| ||

| a man standing next to a horse drawn carriage | 94.9% | |

|

| ||

Text analysis

Amazon

FAIR

CO.

UNTON CO. FAIR

UNTON

MARTSVILLE

23-79

0. FAIR

0.

FAIR