Machine Generated Data

Tags

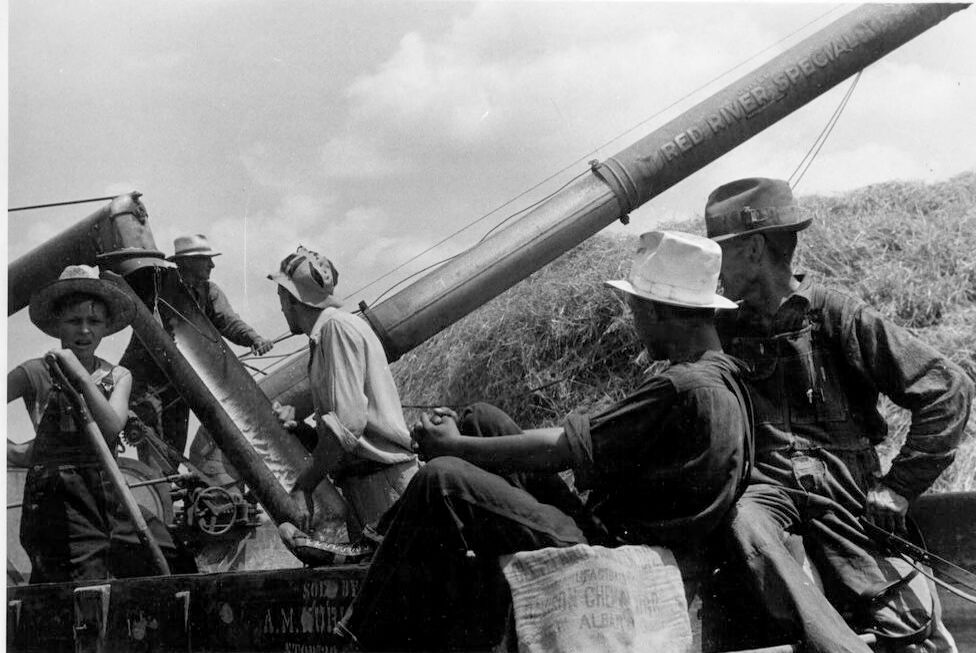

Amazon

created on 2023-10-06

| Adult | 99.1 | |

|

| ||

| Male | 99.1 | |

|

| ||

| Man | 99.1 | |

|

| ||

| Person | 99.1 | |

|

| ||

| Adult | 98.7 | |

|

| ||

| Male | 98.7 | |

|

| ||

| Man | 98.7 | |

|

| ||

| Person | 98.7 | |

|

| ||

| Adult | 98.4 | |

|

| ||

| Male | 98.4 | |

|

| ||

| Man | 98.4 | |

|

| ||

| Person | 98.4 | |

|

| ||

| War | 97.6 | |

|

| ||

| Male | 97.5 | |

|

| ||

| Person | 97.5 | |

|

| ||

| Boy | 97.5 | |

|

| ||

| Child | 97.5 | |

|

| ||

| Adult | 97.2 | |

|

| ||

| Male | 97.2 | |

|

| ||

| Man | 97.2 | |

|

| ||

| Person | 97.2 | |

|

| ||

| Weapon | 95.6 | |

|

| ||

| Artillery | 91.2 | |

|

| ||

| Clothing | 90.7 | |

|

| ||

| Face | 80.5 | |

|

| ||

| Head | 80.5 | |

|

| ||

| Aircraft | 66.5 | |

|

| ||

| Airplane | 66.5 | |

|

| ||

| Transportation | 66.5 | |

|

| ||

| Vehicle | 66.5 | |

|

| ||

| Hat | 56.7 | |

|

| ||

| Worker | 55.1 | |

|

| ||

Clarifai

created on 2018-05-10

Imagga

created on 2023-10-06

| cannon | 100 | |

|

| ||

| gun | 84 | |

|

| ||

| artillery | 81.5 | |

|

| ||

| weapon | 66.7 | |

|

| ||

| armament | 53.1 | |

|

| ||

| field artillery | 51.9 | |

|

| ||

| weaponry | 41.3 | |

|

| ||

| high-angle gun | 34.3 | |

|

| ||

| military | 32.8 | |

|

| ||

| war | 31.1 | |

|

| ||

| army | 27.3 | |

|

| ||

| tank | 25.3 | |

|

| ||

| sky | 24.2 | |

|

| ||

| battle | 22.5 | |

|

| ||

| soldier | 20.5 | |

|

| ||

| history | 18.8 | |

|

| ||

| camouflage | 18.6 | |

|

| ||

| power | 18.5 | |

|

| ||

| industry | 17.9 | |

|

| ||

| danger | 17.3 | |

|

| ||

| vehicle | 16.6 | |

|

| ||

| machine | 16.2 | |

|

| ||

| defense | 13.6 | |

|

| ||

| building | 13.5 | |

|

| ||

| male | 13.5 | |

|

| ||

| man | 13.4 | |

|

| ||

| equipment | 13 | |

|

| ||

| construction | 12.8 | |

|

| ||

| industrial | 12.7 | |

|

| ||

| outdoors | 12.7 | |

|

| ||

| long tom | 12.5 | |

|

| ||

| city | 12.5 | |

|

| ||

| old | 11.8 | |

|

| ||

| barrel | 11.8 | |

|

| ||

| architecture | 11.7 | |

|

| ||

| steel | 11.5 | |

|

| ||

| protection | 10.9 | |

|

| ||

| armor | 10.8 | |

|

| ||

| forces | 10.8 | |

|

| ||

| transportation | 10.8 | |

|

| ||

| conflict | 10.7 | |

|

| ||

| heavy | 10.5 | |

|

| ||

| metal | 10.5 | |

|

| ||

| historical | 10.3 | |

|

| ||

| smoke | 10.2 | |

|

| ||

| fighting | 9.8 | |

|

| ||

| gunnery | 9.6 | |

|

| ||

| wheel | 9.4 | |

|

| ||

| monument | 9.3 | |

|

| ||

| tourism | 9.1 | |

|

| ||

| tower | 9 | |

|

| ||

| landscape | 8.9 | |

|

| ||

| turret | 8.9 | |

|

| ||

| warfare | 8.9 | |

|

| ||

| combat | 8.8 | |

|

| ||

| armed | 8.8 | |

|

| ||

| steam | 8.7 | |

|

| ||

| fight | 8.7 | |

|

| ||

| protect | 8.7 | |

|

| ||

| track | 8.7 | |

|

| ||

| mask | 8.6 | |

|

| ||

| arms | 8.6 | |

|

| ||

| travel | 8.4 | |

|

| ||

| environment | 8.2 | |

|

| ||

| world | 8 | |

|

| ||

| mission | 7.9 | |

|

| ||

| crane | 7.8 | |

|

| ||

| target | 7.8 | |

|

| ||

| victory | 7.8 | |

|

| ||

| truck | 7.7 | |

|

| ||

| rifle | 7.6 | |

|

| ||

| bridge | 7.6 | |

|

| ||

| safety | 7.4 | |

|

| ||

| historic | 7.3 | |

|

| ||

| grass | 7.1 | |

|

| ||

Google

created on 2018-05-10

| motor vehicle | 91.7 | |

|

| ||

| black and white | 91 | |

|

| ||

| monochrome photography | 78.5 | |

|

| ||

| vehicle | 65.9 | |

|

| ||

| monochrome | 61.7 | |

|

| ||

| stock photography | 59.8 | |

|

| ||

| militia | 57.8 | |

|

| ||

| troop | 53.6 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 7-17 |

| Gender | Male, 78.7% |

| Angry | 48.6% |

| Disgusted | 19% |

| Confused | 13.3% |

| Calm | 10% |

| Surprised | 6.9% |

| Fear | 6.6% |

| Sad | 4.6% |

| Happy | 0.5% |

AWS Rekognition

| Age | 41-49 |

| Gender | Male, 97.9% |

| Sad | 39% |

| Calm | 36.2% |

| Surprised | 17.1% |

| Angry | 9.9% |

| Fear | 6.6% |

| Disgusted | 5.3% |

| Confused | 5.2% |

| Happy | 4.8% |

Microsoft Cognitive Services

| Age | 64 |

| Gender | Female |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Amazon

Categories

Imagga

| paintings art | 97.5% | |

|

| ||

Captions

Microsoft

created on 2018-05-10

| a vintage photo of a person | 87.9% | |

|

| ||

| a vintage photo of a baseball field | 69.7% | |

|

| ||

| a black and white photo of a person | 69.6% | |

|

| ||

Text analysis

Amazon

RIVER

A.M.

ALB

sole

LIVRED RIVER ESPECIALSU

A.M. with

sole BY

LIVRED

BY

with

ESPECIALSU

CHEWA