Machine Generated Data

Tags

Amazon

created on 2023-10-05

| Photography | 99.8 | |

|

| ||

| Person | 99 | |

|

| ||

| Person | 98.7 | |

|

| ||

| Person | 98.3 | |

|

| ||

| Adult | 98.3 | |

|

| ||

| Male | 98.3 | |

|

| ||

| Man | 98.3 | |

|

| ||

| Clothing | 98.2 | |

|

| ||

| Hat | 98.2 | |

|

| ||

| Person | 97.8 | |

|

| ||

| Child | 97.8 | |

|

| ||

| Female | 97.8 | |

|

| ||

| Girl | 97.8 | |

|

| ||

| Person | 93.3 | |

|

| ||

| Face | 93 | |

|

| ||

| Head | 93 | |

|

| ||

| Portrait | 93 | |

|

| ||

| Skirt | 92 | |

|

| ||

| Shorts | 83.4 | |

|

| ||

| Person | 72.6 | |

|

| ||

| Adult | 72.6 | |

|

| ||

| Male | 72.6 | |

|

| ||

| Man | 72.6 | |

|

| ||

| Urban | 72.1 | |

|

| ||

| City | 63.6 | |

|

| ||

| Shop | 61.3 | |

|

| ||

| Dress | 57.7 | |

|

| ||

| Slum | 57.1 | |

|

| ||

| Undershirt | 56.1 | |

|

| ||

| Shirt | 55.8 | |

|

| ||

| Road | 55.4 | |

|

| ||

| Street | 55.4 | |

|

| ||

Clarifai

created on 2018-05-10

| people | 100 | |

|

| ||

| adult | 99.3 | |

|

| ||

| group | 99.1 | |

|

| ||

| monochrome | 98.5 | |

|

| ||

| man | 98.3 | |

|

| ||

| woman | 97.5 | |

|

| ||

| two | 97.3 | |

|

| ||

| wear | 97 | |

|

| ||

| music | 96.5 | |

|

| ||

| transportation system | 96.4 | |

|

| ||

| group together | 95.6 | |

|

| ||

| vehicle | 95.4 | |

|

| ||

| several | 92.1 | |

|

| ||

| three | 91.9 | |

|

| ||

| child | 90.6 | |

|

| ||

| recreation | 90.4 | |

|

| ||

| instrument | 88.8 | |

|

| ||

| coverage | 86.8 | |

|

| ||

| musician | 84.6 | |

|

| ||

| actor | 81.7 | |

|

| ||

Imagga

created on 2023-10-05

| people | 29 | |

|

| ||

| person | 24.7 | |

|

| ||

| adult | 23.1 | |

|

| ||

| man | 21.5 | |

|

| ||

| black | 17.5 | |

|

| ||

| happy | 16.9 | |

|

| ||

| leisure | 16.6 | |

|

| ||

| male | 16.4 | |

|

| ||

| portrait | 16.2 | |

|

| ||

| lady | 14.6 | |

|

| ||

| men | 14.6 | |

|

| ||

| fashion | 14.3 | |

|

| ||

| lifestyle | 13.7 | |

|

| ||

| shop | 13.6 | |

|

| ||

| love | 12.6 | |

|

| ||

| smiling | 12.3 | |

|

| ||

| one | 11.9 | |

|

| ||

| outdoors | 11.9 | |

|

| ||

| attractive | 11.9 | |

|

| ||

| city | 11.6 | |

|

| ||

| modern | 11.2 | |

|

| ||

| pretty | 11.2 | |

|

| ||

| sitting | 11.2 | |

|

| ||

| women | 11.1 | |

|

| ||

| casual | 11 | |

|

| ||

| urban | 10.5 | |

|

| ||

| looking | 10.4 | |

|

| ||

| billboard | 10.2 | |

|

| ||

| day | 10.2 | |

|

| ||

| world | 10 | |

|

| ||

| smile | 10 | |

|

| ||

| dress | 9.9 | |

|

| ||

| old | 9.7 | |

|

| ||

| fun | 9.7 | |

|

| ||

| style | 9.6 | |

|

| ||

| sexy | 9.6 | |

|

| ||

| couple | 9.6 | |

|

| ||

| mercantile establishment | 9.5 | |

|

| ||

| school | 9.5 | |

|

| ||

| happiness | 9.4 | |

|

| ||

| youth | 9.4 | |

|

| ||

| sport | 9.3 | |

|

| ||

| indoor | 9.1 | |

|

| ||

| holding | 9.1 | |

|

| ||

| human | 9 | |

|

| ||

| adults | 8.5 | |

|

| ||

| two | 8.5 | |

|

| ||

| signboard | 8.3 | |

|

| ||

| room | 8.3 | |

|

| ||

| vacation | 8.2 | |

|

| ||

| fitness | 8.1 | |

|

| ||

| seller | 8 | |

|

| ||

| water | 8 | |

|

| ||

| clothing | 7.9 | |

|

| ||

| standing | 7.8 | |

|

| ||

| face | 7.8 | |

|

| ||

| model | 7.8 | |

|

| ||

| performer | 7.7 | |

|

| ||

| enjoy | 7.5 | |

|

| ||

| structure | 7.5 | |

|

| ||

| dance | 7.4 | |

|

| ||

| guy | 7.4 | |

|

| ||

| classroom | 7.4 | |

|

| ||

| light | 7.3 | |

|

| ||

| teenager | 7.3 | |

|

| ||

| road | 7.2 | |

|

| ||

| handsome | 7.1 | |

|

| ||

| posing | 7.1 | |

|

| ||

| travel | 7 | |

|

| ||

Google

created on 2018-05-10

| photograph | 95.8 | |

|

| ||

| black | 95.4 | |

|

| ||

| black and white | 91.8 | |

|

| ||

| monochrome photography | 82.8 | |

|

| ||

| snapshot | 81.8 | |

|

| ||

| standing | 81.6 | |

|

| ||

| photography | 80.4 | |

|

| ||

| monochrome | 68.2 | |

|

| ||

| human behavior | 61.7 | |

|

| ||

| vintage clothing | 59.3 | |

|

| ||

| product | 52.4 | |

|

| ||

| stock photography | 52.3 | |

|

| ||

| pattern | 51.8 | |

|

| ||

Microsoft

created on 2018-05-10

| person | 98.5 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 23-33 |

| Gender | Female, 99.9% |

| Happy | 99.3% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.2% |

| Angry | 0.2% |

| Disgusted | 0.1% |

| Calm | 0.1% |

| Confused | 0% |

AWS Rekognition

| Age | 26-36 |

| Gender | Female, 61.8% |

| Happy | 95.2% |

| Surprised | 6.9% |

| Fear | 6% |

| Sad | 2.3% |

| Calm | 1.5% |

| Angry | 0.9% |

| Disgusted | 0.2% |

| Confused | 0.1% |

Feature analysis

Categories

Imagga

| streetview architecture | 53.9% | |

|

| ||

| paintings art | 21% | |

|

| ||

| people portraits | 15.8% | |

|

| ||

| nature landscape | 2.8% | |

|

| ||

| pets animals | 2.4% | |

|

| ||

| events parties | 2.1% | |

|

| ||

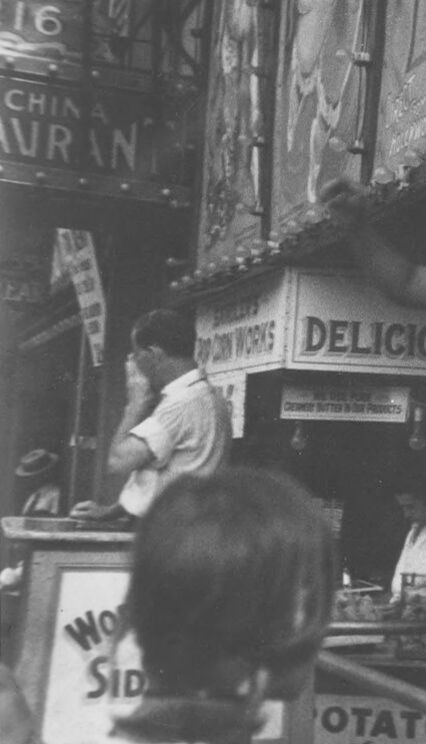

Captions

Microsoft

created on 2018-05-10

| a man and a woman standing in front of a store | 69.2% | |

|

| ||

| a group of people standing in front of a store | 69.1% | |

|

| ||

| a person standing in front of a store | 69% | |

|

| ||

Text analysis

Amazon

WORKS

CHIN

OTATO

CHIN A

DELICIOU

A

SID

PRODUCTS

Wo

16

LLKI

BOTTER

AVR

HOLLYWOOD

CERVE BOTTER PRODUCTS

THE

300 CORN WORKS

AVR PAN'

PAN'

CORN

WIDE

FRI THE PSS

to

300

CERVE

PSS

FRI

I 6

URAN

DELICi

OTAT

I

6

URAN

DELICi

OTAT