Machine Generated Data

Tags

Amazon

created on 2023-10-06

| Clothing | 99.5 | |

|

| ||

| Hat | 99.5 | |

|

| ||

| Adult | 99.1 | |

|

| ||

| Male | 99.1 | |

|

| ||

| Man | 99.1 | |

|

| ||

| Person | 99.1 | |

|

| ||

| Adult | 98.8 | |

|

| ||

| Male | 98.8 | |

|

| ||

| Man | 98.8 | |

|

| ||

| Person | 98.8 | |

|

| ||

| Adult | 98.2 | |

|

| ||

| Male | 98.2 | |

|

| ||

| Man | 98.2 | |

|

| ||

| Person | 98.2 | |

|

| ||

| Jeans | 96.5 | |

|

| ||

| Pants | 96.5 | |

|

| ||

| Coat | 96.5 | |

|

| ||

| Person | 95.8 | |

|

| ||

| Person | 95.6 | |

|

| ||

| Person | 95.3 | |

|

| ||

| Person | 94.7 | |

|

| ||

| Person | 93.8 | |

|

| ||

| Person | 93.7 | |

|

| ||

| Person | 93.3 | |

|

| ||

| Person | 92.8 | |

|

| ||

| Person | 92.7 | |

|

| ||

| Jacket | 92.2 | |

|

| ||

| Person | 90.6 | |

|

| ||

| Face | 89.1 | |

|

| ||

| Head | 89.1 | |

|

| ||

| Person | 85.1 | |

|

| ||

| Adult | 83.3 | |

|

| ||

| Male | 83.3 | |

|

| ||

| Man | 83.3 | |

|

| ||

| Person | 83.3 | |

|

| ||

| Cap | 81.6 | |

|

| ||

| Railway | 75.8 | |

|

| ||

| Transportation | 75.8 | |

|

| ||

| Footwear | 68.3 | |

|

| ||

| Shoe | 68.3 | |

|

| ||

| Person | 64.8 | |

|

| ||

| People | 64.5 | |

|

| ||

| Terminal | 61.7 | |

|

| ||

| Person | 61.1 | |

|

| ||

| Train | 57.5 | |

|

| ||

| Train Station | 57.5 | |

|

| ||

| Vehicle | 57.5 | |

|

| ||

| Shoe | 57.1 | |

|

| ||

| Accessories | 56.2 | |

|

| ||

| Photography | 56.1 | |

|

| ||

| Portrait | 56.1 | |

|

| ||

| Baseball Cap | 55.7 | |

|

| ||

| Helmet | 55.5 | |

|

| ||

| Sunglasses | 55.3 | |

|

| ||

Clarifai

created on 2018-05-11

Imagga

created on 2023-10-06

| helmet | 34.1 | |

|

| ||

| war | 27.9 | |

|

| ||

| mask | 27.3 | |

|

| ||

| protection | 26.4 | |

|

| ||

| soldier | 25.4 | |

|

| ||

| military | 25.1 | |

|

| ||

| man | 24.9 | |

|

| ||

| armor | 22.4 | |

|

| ||

| warrior | 19.5 | |

|

| ||

| male | 19.2 | |

|

| ||

| person | 18.5 | |

|

| ||

| clothing | 17.6 | |

|

| ||

| covering | 17.1 | |

|

| ||

| weapon | 17 | |

|

| ||

| history | 17 | |

|

| ||

| shield | 16.3 | |

|

| ||

| protective covering | 15.8 | |

|

| ||

| people | 15.6 | |

|

| ||

| historical | 14.1 | |

|

| ||

| army | 13.6 | |

|

| ||

| suit | 13.5 | |

|

| ||

| uniform | 12.9 | |

|

| ||

| knight | 12.8 | |

|

| ||

| statue | 12.7 | |

|

| ||

| danger | 12.7 | |

|

| ||

| battle | 12.7 | |

|

| ||

| city | 12.5 | |

|

| ||

| photographer | 12.3 | |

|

| ||

| safety | 12 | |

|

| ||

| disaster | 11.7 | |

|

| ||

| gas | 11.5 | |

|

| ||

| old | 11.1 | |

|

| ||

| sword | 11.1 | |

|

| ||

| attendant | 10.7 | |

|

| ||

| protective | 10.7 | |

|

| ||

| headdress | 10.5 | |

|

| ||

| horse | 10.4 | |

|

| ||

| ancient | 10.4 | |

|

| ||

| men | 10.3 | |

|

| ||

| culture | 10.2 | |

|

| ||

| gun | 10.2 | |

|

| ||

| equipment | 10.2 | |

|

| ||

| vehicle | 10.1 | |

|

| ||

| sport | 10 | |

|

| ||

| toxic | 9.8 | |

|

| ||

| human | 9.7 | |

|

| ||

| portrait | 9.7 | |

|

| ||

| metal | 9.7 | |

|

| ||

| urban | 9.6 | |

|

| ||

| medieval | 9.6 | |

|

| ||

| pedestrian | 9.4 | |

|

| ||

| religion | 9 | |

|

| ||

| radiation | 8.8 | |

|

| ||

| conflict | 8.8 | |

|

| ||

| fight | 8.7 | |

|

| ||

| travel | 8.4 | |

|

| ||

| stone | 8.4 | |

|

| ||

| black | 8.4 | |

|

| ||

| traditional | 8.3 | |

|

| ||

| breastplate | 8.3 | |

|

| ||

| street | 8.3 | |

|

| ||

| historic | 8.2 | |

|

| ||

| world | 8 | |

|

| ||

| football helmet | 7.9 | |

|

| ||

| back | 7.9 | |

|

| ||

| radioactive | 7.8 | |

|

| ||

| adult | 7.8 | |

|

| ||

| fear | 7.7 | |

|

| ||

| chemical | 7.7 | |

|

| ||

| fire | 7.5 | |

|

| ||

| outdoors | 7.5 | |

|

| ||

| vintage | 7.4 | |

|

| ||

| security | 7.3 | |

|

| ||

| art | 7.3 | |

|

| ||

| costume | 7.3 | |

|

| ||

| conveyance | 7.2 | |

|

| ||

| day | 7.1 | |

|

| ||

| architecture | 7 | |

|

| ||

Google

created on 2018-05-11

| photograph | 95 | |

|

| ||

| black and white | 89.1 | |

|

| ||

| crew | 83 | |

|

| ||

| motor vehicle | 79 | |

|

| ||

| monochrome photography | 73.4 | |

|

| ||

| militia | 70.7 | |

|

| ||

| troop | 67.7 | |

|

| ||

| monochrome | 60.8 | |

|

| ||

| military officer | 56.3 | |

|

| ||

| military person | 51.3 | |

|

| ||

| stock photography | 51.2 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

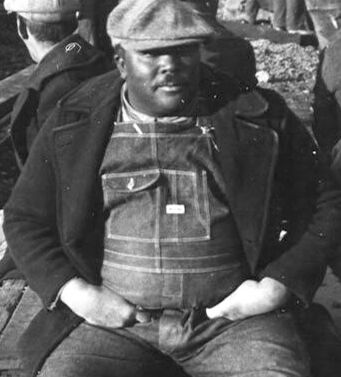

| Age | 22-30 |

| Gender | Male, 100% |

| Sad | 100% |

| Calm | 8.3% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Confused | 0.3% |

| Angry | 0.1% |

| Happy | 0.1% |

| Disgusted | 0.1% |

AWS Rekognition

| Age | 37-45 |

| Gender | Male, 99.9% |

| Calm | 94.5% |

| Surprised | 6.4% |

| Fear | 6.1% |

| Sad | 2.5% |

| Angry | 1.8% |

| Confused | 0.7% |

| Disgusted | 0.5% |

| Happy | 0.3% |

AWS Rekognition

| Age | 25-35 |

| Gender | Male, 100% |

| Happy | 95.5% |

| Surprised | 6.4% |

| Fear | 5.9% |

| Sad | 3.5% |

| Confused | 0.2% |

| Angry | 0.2% |

| Calm | 0.2% |

| Disgusted | 0.1% |

AWS Rekognition

| Age | 25-35 |

| Gender | Male, 91.5% |

| Disgusted | 37.2% |

| Sad | 32.1% |

| Fear | 13.5% |

| Surprised | 12.2% |

| Confused | 11.9% |

| Angry | 2.7% |

| Happy | 2.3% |

| Calm | 2.2% |

AWS Rekognition

| Age | 21-29 |

| Gender | Male, 85.7% |

| Calm | 88.1% |

| Disgusted | 8.8% |

| Surprised | 6.6% |

| Fear | 5.9% |

| Sad | 2.4% |

| Confused | 0.6% |

| Angry | 0.5% |

| Happy | 0.3% |

AWS Rekognition

| Age | 22-30 |

| Gender | Male, 86% |

| Calm | 93.5% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 4.4% |

| Confused | 0.4% |

| Happy | 0.4% |

| Disgusted | 0.1% |

| Angry | 0.1% |

AWS Rekognition

| Age | 23-31 |

| Gender | Male, 90.9% |

| Calm | 66.1% |

| Confused | 15.3% |

| Surprised | 8.5% |

| Fear | 8.2% |

| Sad | 3.1% |

| Happy | 3% |

| Angry | 2.6% |

| Disgusted | 1.2% |

AWS Rekognition

| Age | 25-35 |

| Gender | Male, 56.8% |

| Calm | 95.4% |

| Surprised | 6.4% |

| Fear | 5.9% |

| Sad | 2.4% |

| Confused | 2% |

| Angry | 0.7% |

| Disgusted | 0.5% |

| Happy | 0.2% |

AWS Rekognition

| Age | 24-34 |

| Gender | Male, 87.5% |

| Sad | 99.9% |

| Angry | 15.4% |

| Surprised | 6.4% |

| Fear | 6.3% |

| Calm | 3.5% |

| Disgusted | 0.5% |

| Confused | 0.5% |

| Happy | 0.3% |

AWS Rekognition

| Age | 25-35 |

| Gender | Male, 99.5% |

| Calm | 73.5% |

| Sad | 18.3% |

| Surprised | 7.8% |

| Fear | 6% |

| Disgusted | 2.8% |

| Confused | 1.8% |

| Happy | 1.4% |

| Angry | 1.1% |

Microsoft Cognitive Services

| Age | 45 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very likely |

| Blurred | Very unlikely |

Imagga

| Traits | no traits identified |

Imagga

| Traits | no traits identified |

Imagga

| Traits | no traits identified |

Feature analysis

Amazon

Adult

Male

Man

Person

Jeans

Jacket

Shoe

| Adult | 99.1% | |

|

| ||

| Adult | 98.8% | |

|

| ||

| Adult | 98.2% | |

|

| ||

| Adult | 83.3% | |

|

| ||

| Male | 99.1% | |

|

| ||

| Male | 98.8% | |

|

| ||

| Male | 98.2% | |

|

| ||

| Male | 83.3% | |

|

| ||

| Man | 99.1% | |

|

| ||

| Man | 98.8% | |

|

| ||

| Man | 98.2% | |

|

| ||

| Man | 83.3% | |

|

| ||

| Person | 99.1% | |

|

| ||

| Person | 98.8% | |

|

| ||

| Person | 98.2% | |

|

| ||

| Person | 95.8% | |

|

| ||

| Person | 95.6% | |

|

| ||

| Person | 95.3% | |

|

| ||

| Person | 94.7% | |

|

| ||

| Person | 93.8% | |

|

| ||

| Person | 93.7% | |

|

| ||

| Person | 93.3% | |

|

| ||

| Person | 92.8% | |

|

| ||

| Person | 92.7% | |

|

| ||

| Person | 90.6% | |

|

| ||

| Person | 85.1% | |

|

| ||

| Person | 83.3% | |

|

| ||

| Person | 64.8% | |

|

| ||

| Person | 61.1% | |

|

| ||

| Jeans | 96.5% | |

|

| ||

| Jacket | 92.2% | |

|

| ||

| Shoe | 68.3% | |

|

| ||

| Shoe | 57.1% | |

|

| ||

Categories

Imagga

| paintings art | 89.3% | |

|

| ||

| people portraits | 8.7% | |

|

| ||

Captions

Microsoft

created on 2018-05-11

| a group of people posing for a photo | 97.1% | |

|

| ||

| a group of people sitting posing for the camera | 97% | |

|

| ||

| a group of people posing for the camera | 96.9% | |

|

| ||

Clarifai

created by general-english-image-caption-blip on 2025-05-17

| a photograph of a group of people sitting on a bench in a train station | -100% | |

|

| ||