Machine Generated Data

Tags

Amazon

created on 2023-10-05

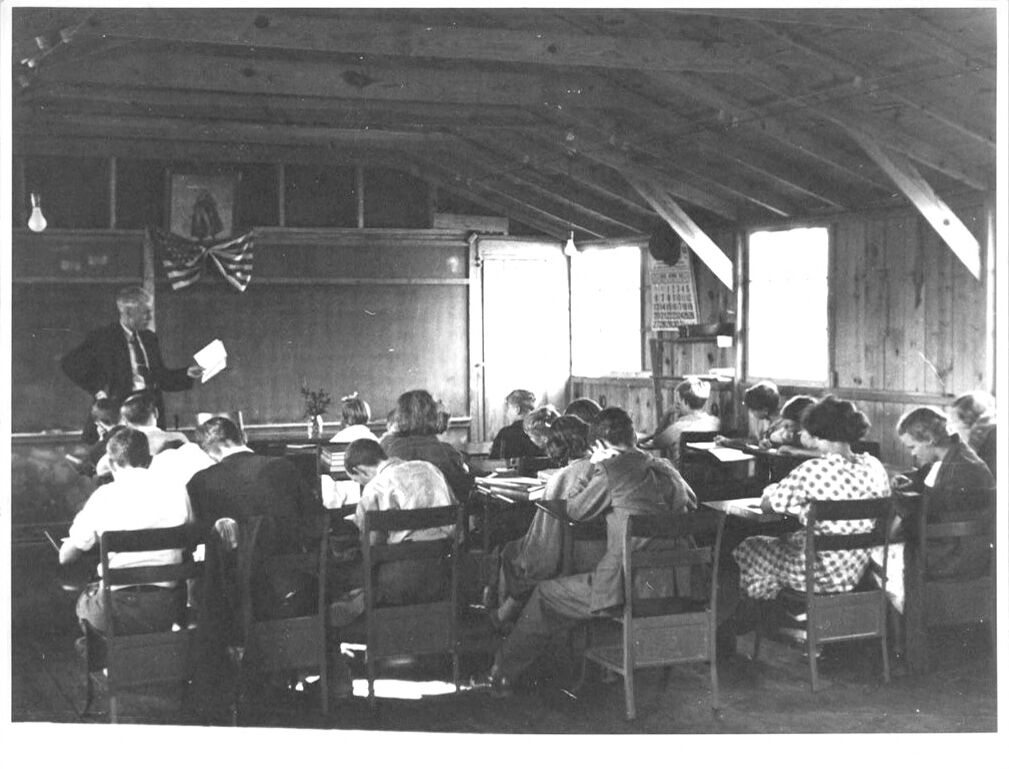

| Cafeteria | 99.7 | |

|

| ||

| Indoors | 99.7 | |

|

| ||

| Restaurant | 99.7 | |

|

| ||

| Adult | 98.6 | |

|

| ||

| Male | 98.6 | |

|

| ||

| Man | 98.6 | |

|

| ||

| Person | 98.6 | |

|

| ||

| Person | 97.6 | |

|

| ||

| Adult | 97.3 | |

|

| ||

| Male | 97.3 | |

|

| ||

| Man | 97.3 | |

|

| ||

| Person | 97.3 | |

|

| ||

| People | 96.5 | |

|

| ||

| Person | 96.4 | |

|

| ||

| Adult | 96.2 | |

|

| ||

| Male | 96.2 | |

|

| ||

| Man | 96.2 | |

|

| ||

| Person | 96.2 | |

|

| ||

| Adult | 96.1 | |

|

| ||

| Male | 96.1 | |

|

| ||

| Man | 96.1 | |

|

| ||

| Person | 96.1 | |

|

| ||

| Architecture | 95.9 | |

|

| ||

| Factory | 95.9 | |

|

| ||

| Adult | 95.7 | |

|

| ||

| Male | 95.7 | |

|

| ||

| Man | 95.7 | |

|

| ||

| Person | 95.7 | |

|

| ||

| Person | 94.6 | |

|

| ||

| Classroom | 94.5 | |

|

| ||

| Room | 94.5 | |

|

| ||

| School | 94.5 | |

|

| ||

| Chair | 94.1 | |

|

| ||

| Furniture | 94.1 | |

|

| ||

| Chair | 93.6 | |

|

| ||

| Adult | 93.4 | |

|

| ||

| Male | 93.4 | |

|

| ||

| Man | 93.4 | |

|

| ||

| Person | 93.4 | |

|

| ||

| Dining Room | 91 | |

|

| ||

| Dining Table | 91 | |

|

| ||

| Table | 91 | |

|

| ||

| Person | 90.7 | |

|

| ||

| Person | 90.2 | |

|

| ||

| Person | 88.5 | |

|

| ||

| Person | 88.2 | |

|

| ||

| Person | 84.6 | |

|

| ||

| Person | 81.6 | |

|

| ||

| Building | 75.3 | |

|

| ||

| Face | 75.1 | |

|

| ||

| Head | 75.1 | |

|

| ||

| Hospital | 74.5 | |

|

| ||

| Person | 69.1 | |

|

| ||

| Manufacturing | 56.7 | |

|

| ||

| Cafe | 56.6 | |

|

| ||

| Crowd | 56.2 | |

|

| ||

Clarifai

created on 2018-05-11

| people | 99.9 | |

|

| ||

| adult | 98.7 | |

|

| ||

| group | 97.9 | |

|

| ||

| vehicle | 97.5 | |

|

| ||

| group together | 97.2 | |

|

| ||

| man | 96.6 | |

|

| ||

| military | 96.4 | |

|

| ||

| furniture | 95.5 | |

|

| ||

| transportation system | 94.2 | |

|

| ||

| war | 93.3 | |

|

| ||

| room | 91.7 | |

|

| ||

| many | 91.6 | |

|

| ||

| soldier | 90.6 | |

|

| ||

| woman | 89.2 | |

|

| ||

| monochrome | 88.1 | |

|

| ||

| sit | 85.1 | |

|

| ||

| administration | 84.6 | |

|

| ||

| chair | 84.2 | |

|

| ||

| uniform | 83.6 | |

|

| ||

| crowd | 82.2 | |

|

| ||

Imagga

created on 2023-10-05

| car | 23.8 | |

|

| ||

| freight car | 22.3 | |

|

| ||

| locomotive | 20.1 | |

|

| ||

| wheeled vehicle | 20 | |

|

| ||

| transportation | 19.7 | |

|

| ||

| vehicle | 19.6 | |

|

| ||

| industry | 18.8 | |

|

| ||

| steam | 16.5 | |

|

| ||

| industrial | 16.3 | |

|

| ||

| steel | 16.1 | |

|

| ||

| machine | 16.1 | |

|

| ||

| factory | 15.8 | |

|

| ||

| drum | 15.4 | |

|

| ||

| percussion instrument | 15 | |

|

| ||

| equipment | 14.9 | |

|

| ||

| train | 14.4 | |

|

| ||

| house | 14.2 | |

|

| ||

| old | 13.9 | |

|

| ||

| building | 13.9 | |

|

| ||

| transport | 13.7 | |

|

| ||

| travel | 13.4 | |

|

| ||

| musical instrument | 13.2 | |

|

| ||

| railroad | 12.8 | |

|

| ||

| power | 12.6 | |

|

| ||

| engine | 12.5 | |

|

| ||

| garage | 12 | |

|

| ||

| device | 11.8 | |

|

| ||

| architecture | 11.7 | |

|

| ||

| man | 11.4 | |

|

| ||

| station | 11.2 | |

|

| ||

| railway | 10.8 | |

|

| ||

| steam locomotive | 10.6 | |

|

| ||

| outdoors | 10.4 | |

|

| ||

| work | 10.3 | |

|

| ||

| vintage | 9.9 | |

|

| ||

| outside | 9.4 | |

|

| ||

| smoke | 9.3 | |

|

| ||

| structure | 9.2 | |

|

| ||

| machinery | 9 | |

|

| ||

| construction | 8.5 | |

|

| ||

| male | 8.5 | |

|

| ||

| black | 8.4 | |

|

| ||

| city | 8.3 | |

|

| ||

| stage | 8.3 | |

|

| ||

| room | 8 | |

|

| ||

| warehouse | 7.8 | |

|

| ||

| track | 7.7 | |

|

| ||

| heavy | 7.6 | |

|

| ||

| heat | 7.4 | |

|

| ||

| safety | 7.4 | |

|

| ||

| conveyance | 7.3 | |

|

| ||

| protection | 7.3 | |

|

| ||

| metal | 7.2 | |

|

| ||

| worker | 7.1 | |

|

| ||

| platform | 7.1 | |

|

| ||

| rural | 7 | |

|

| ||

| indoors | 7 | |

|

| ||

| modern | 7 | |

|

| ||

Google

created on 2018-05-11

| black and white | 81.1 | |

|

| ||

| history | 60.9 | |

|

| ||

| factory | 60.3 | |

|

| ||

| monochrome | 57.4 | |

|

| ||

| monochrome photography | 55.5 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 24-34 |

| Gender | Male, 60% |

| Calm | 85.4% |

| Sad | 7.5% |

| Fear | 6.5% |

| Surprised | 6.3% |

| Disgusted | 1.8% |

| Happy | 1.1% |

| Angry | 0.6% |

| Confused | 0.3% |

AWS Rekognition

| Age | 27-37 |

| Gender | Male, 95.7% |

| Sad | 69.3% |

| Calm | 65.1% |

| Surprised | 6.4% |

| Fear | 6.1% |

| Confused | 0.6% |

| Angry | 0.5% |

| Happy | 0.4% |

| Disgusted | 0.2% |

AWS Rekognition

| Age | 19-27 |

| Gender | Female, 51.3% |

| Calm | 73.2% |

| Fear | 13.4% |

| Surprised | 6.6% |

| Sad | 4.7% |

| Happy | 2.6% |

| Confused | 1.8% |

| Disgusted | 1.2% |

| Angry | 1.2% |

AWS Rekognition

| Age | 16-22 |

| Gender | Male, 77.3% |

| Sad | 99.9% |

| Calm | 11.9% |

| Surprised | 6.6% |

| Fear | 6.6% |

| Angry | 4.4% |

| Happy | 2.1% |

| Confused | 1.5% |

| Disgusted | 1.3% |

Feature analysis

Amazon

Adult

Male

Man

Person

Chair

Building

| Adult | 98.6% | |

|

| ||

| Adult | 97.3% | |

|

| ||

| Adult | 96.2% | |

|

| ||

| Adult | 96.1% | |

|

| ||

| Adult | 95.7% | |

|

| ||

| Adult | 93.4% | |

|

| ||

| Male | 98.6% | |

|

| ||

| Male | 97.3% | |

|

| ||

| Male | 96.2% | |

|

| ||

| Male | 96.1% | |

|

| ||

| Male | 95.7% | |

|

| ||

| Male | 93.4% | |

|

| ||

| Man | 98.6% | |

|

| ||

| Man | 97.3% | |

|

| ||

| Man | 96.2% | |

|

| ||

| Man | 96.1% | |

|

| ||

| Man | 95.7% | |

|

| ||

| Man | 93.4% | |

|

| ||

| Person | 98.6% | |

|

| ||

| Person | 97.6% | |

|

| ||

| Person | 97.3% | |

|

| ||

| Person | 96.4% | |

|

| ||

| Person | 96.2% | |

|

| ||

| Person | 96.1% | |

|

| ||

| Person | 95.7% | |

|

| ||

| Person | 94.6% | |

|

| ||

| Person | 93.4% | |

|

| ||

| Person | 90.7% | |

|

| ||

| Person | 90.2% | |

|

| ||

| Person | 88.5% | |

|

| ||

| Person | 88.2% | |

|

| ||

| Person | 84.6% | |

|

| ||

| Person | 81.6% | |

|

| ||

| Person | 69.1% | |

|

| ||

| Chair | 94.1% | |

|

| ||

| Chair | 93.6% | |

|

| ||

| Building | 75.3% | |

|

| ||

Categories

Imagga

| interior objects | 87.7% | |

|

| ||

| people portraits | 3.5% | |

|

| ||

| streetview architecture | 3.4% | |

|

| ||

| text visuals | 1.1% | |

|

| ||

| pets animals | 1% | |

|

| ||

Captions

Microsoft

created on 2018-05-11

| a group of people in a room | 97.5% | |

|

| ||

| a group of people standing in a room | 96.2% | |

|

| ||

| a group of people sitting at a table in front of a building | 90.2% | |

|

| ||