Machine Generated Data

Tags

Amazon

created on 2023-10-05

| Clothing | 100 | |

|

| ||

| Machine | 100 | |

|

| ||

| Spoke | 100 | |

|

| ||

| Water | 99.5 | |

|

| ||

| Waterfront | 99.5 | |

|

| ||

| Adult | 99.4 | |

|

| ||

| Male | 99.4 | |

|

| ||

| Man | 99.4 | |

|

| ||

| Person | 99.4 | |

|

| ||

| Adult | 99 | |

|

| ||

| Male | 99 | |

|

| ||

| Man | 99 | |

|

| ||

| Person | 99 | |

|

| ||

| Adult | 98.7 | |

|

| ||

| Male | 98.7 | |

|

| ||

| Man | 98.7 | |

|

| ||

| Person | 98.7 | |

|

| ||

| Adult | 98.1 | |

|

| ||

| Male | 98.1 | |

|

| ||

| Man | 98.1 | |

|

| ||

| Person | 98.1 | |

|

| ||

| Wheel | 96.2 | |

|

| ||

| Worker | 95.3 | |

|

| ||

| Outdoors | 87.4 | |

|

| ||

| Coat | 87.3 | |

|

| ||

| Footwear | 72.9 | |

|

| ||

| Shoe | 72.9 | |

|

| ||

| Construction | 71.9 | |

|

| ||

| Bicycle | 71.3 | |

|

| ||

| Transportation | 71.3 | |

|

| ||

| Vehicle | 71.3 | |

|

| ||

| Head | 67.4 | |

|

| ||

| Face | 67.3 | |

|

| ||

| Hat | 66.3 | |

|

| ||

| Shoe | 63.9 | |

|

| ||

| Wheel | 62.3 | |

|

| ||

| Hat | 58.9 | |

|

| ||

| Wood | 57.8 | |

|

| ||

| Architecture | 57.8 | |

|

| ||

| Building | 57.8 | |

|

| ||

| Factory | 57.8 | |

|

| ||

| Manufacturing | 57.8 | |

|

| ||

| Carpenter | 57.2 | |

|

| ||

| Oilfield | 56.6 | |

|

| ||

| Nature | 56.4 | |

|

| ||

| Axle | 56.3 | |

|

| ||

| Weapon | 56.1 | |

|

| ||

| Pants | 56.1 | |

|

| ||

| Coil | 55.8 | |

|

| ||

| Rotor | 55.8 | |

|

| ||

| Spiral | 55.8 | |

|

| ||

| Cap | 55.7 | |

|

| ||

| Pier | 55.3 | |

|

| ||

| Tire | 55.2 | |

|

| ||

| Hardhat | 55.2 | |

|

| ||

| Helmet | 55.2 | |

|

| ||

Clarifai

created on 2018-05-11

Imagga

created on 2023-10-05

| factory | 73 | |

|

| ||

| stretcher | 43.6 | |

|

| ||

| plant | 42.2 | |

|

| ||

| litter | 35 | |

|

| ||

| building complex | 32 | |

|

| ||

| conveyance | 26.6 | |

|

| ||

| machine | 24.2 | |

|

| ||

| structure | 23.2 | |

|

| ||

| man | 22.2 | |

|

| ||

| industry | 19.6 | |

|

| ||

| work | 17.4 | |

|

| ||

| equipment | 17.2 | |

|

| ||

| construction | 16.2 | |

|

| ||

| worker | 15.9 | |

|

| ||

| industrial | 15.4 | |

|

| ||

| people | 15.1 | |

|

| ||

| steel | 14.1 | |

|

| ||

| working | 14.1 | |

|

| ||

| male | 13.5 | |

|

| ||

| old | 13.2 | |

|

| ||

| building | 12.7 | |

|

| ||

| outdoor | 12.2 | |

|

| ||

| sawmill | 11.8 | |

|

| ||

| outdoors | 11.2 | |

|

| ||

| action | 11.1 | |

|

| ||

| power saw | 11 | |

|

| ||

| loom | 10.9 | |

|

| ||

| tool | 10.7 | |

|

| ||

| job | 10.6 | |

|

| ||

| person | 10.6 | |

|

| ||

| power | 10.1 | |

|

| ||

| house | 9.2 | |

|

| ||

| city | 9.1 | |

|

| ||

| park | 9 | |

|

| ||

| activity | 8.9 | |

|

| ||

| textile machine | 8.9 | |

|

| ||

| metal | 8.8 | |

|

| ||

| manual | 8.8 | |

|

| ||

| home | 8.8 | |

|

| ||

| machinery | 8.8 | |

|

| ||

| labor | 8.8 | |

|

| ||

| skill | 8.7 | |

|

| ||

| day | 8.6 | |

|

| ||

| bench | 8.5 | |

|

| ||

| adult | 8.4 | |

|

| ||

| field | 8.4 | |

|

| ||

| summer | 8.4 | |

|

| ||

| wood | 8.3 | |

|

| ||

| leisure | 8.3 | |

|

| ||

| sky | 8.3 | |

|

| ||

| power tool | 8.2 | |

|

| ||

| occupation | 8.2 | |

|

| ||

| landscape | 8.2 | |

|

| ||

| farm | 8 | |

|

| ||

| water | 8 | |

|

| ||

| travel | 7.7 | |

|

| ||

| uniform | 7.7 | |

|

| ||

| men | 7.7 | |

|

| ||

| tropical | 7.7 | |

|

| ||

| heavy | 7.6 | |

|

| ||

| site | 7.5 | |

|

| ||

| iron | 7.5 | |

|

| ||

| protection | 7.3 | |

|

| ||

| lifestyle | 7.2 | |

|

| ||

| active | 7.2 | |

|

| ||

| architecture | 7 | |

|

| ||

Google

created on 2018-05-11

| motor vehicle | 94.1 | |

|

| ||

| black and white | 85.7 | |

|

| ||

| car | 80.9 | |

|

| ||

| vehicle | 75.7 | |

|

| ||

| monochrome photography | 63.1 | |

|

| ||

| monochrome | 58 | |

|

| ||

Color Analysis

Face analysis

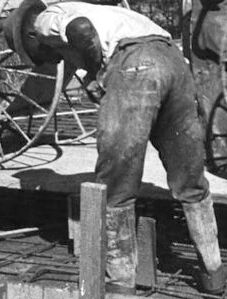

Amazon

Microsoft

AWS Rekognition

| Age | 39-47 |

| Gender | Male, 100% |

| Calm | 62% |

| Surprised | 10.7% |

| Angry | 8.1% |

| Happy | 6.5% |

| Fear | 6.2% |

| Confused | 5.8% |

| Sad | 4.9% |

| Disgusted | 4% |

AWS Rekognition

| Age | 33-41 |

| Gender | Male, 100% |

| Calm | 98.6% |

| Surprised | 6.5% |

| Fear | 5.9% |

| Sad | 2.2% |

| Disgusted | 0.3% |

| Confused | 0.3% |

| Happy | 0.1% |

| Angry | 0% |

AWS Rekognition

| Age | 39-47 |

| Gender | Male, 100% |

| Sad | 100% |

| Calm | 9.3% |

| Surprised | 6.5% |

| Fear | 5.9% |

| Confused | 5.6% |

| Happy | 2.6% |

| Angry | 0.5% |

| Disgusted | 0.4% |

Microsoft Cognitive Services

| Age | 49 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Unlikely |

| Headwear | Very likely |

| Blurred | Very unlikely |

Feature analysis

Amazon

Adult

Male

Man

Person

Wheel

Coat

Shoe

Bicycle

Hat

Categories

Imagga

| paintings art | 88.5% | |

|

| ||

| nature landscape | 4.7% | |

|

| ||

| streetview architecture | 2% | |

|

| ||

| pets animals | 1.7% | |

|

| ||

| people portraits | 1.5% | |

|

| ||

Captions

Microsoft

created on 2018-05-11

| a group of people standing around a bench | 88.5% | |

|

| ||

| a group of people standing on top of a bench | 85.1% | |

|

| ||

| a group of people sitting on a bench | 85% | |

|

| ||