Machine Generated Data

Tags

Amazon

created on 2023-10-06

Clarifai

created on 2018-05-11

Imagga

created on 2023-10-06

| billboard | 29.1 | |

|

| ||

| man | 25.5 | |

|

| ||

| signboard | 23.2 | |

|

| ||

| male | 21.3 | |

|

| ||

| structure | 20.9 | |

|

| ||

| person | 20.4 | |

|

| ||

| business | 20 | |

|

| ||

| building | 17.2 | |

|

| ||

| office | 16.6 | |

|

| ||

| call | 16.6 | |

|

| ||

| old | 15.3 | |

|

| ||

| suit | 15.2 | |

|

| ||

| adult | 14.9 | |

|

| ||

| people | 14.5 | |

|

| ||

| architecture | 12.7 | |

|

| ||

| city | 12.5 | |

|

| ||

| businessman | 12.4 | |

|

| ||

| window | 12 | |

|

| ||

| shop | 11.4 | |

|

| ||

| men | 11.2 | |

|

| ||

| working | 10.6 | |

|

| ||

| one | 10.4 | |

|

| ||

| looking | 10.4 | |

|

| ||

| professional | 10.3 | |

|

| ||

| wall | 10.3 | |

|

| ||

| work | 10.2 | |

|

| ||

| sky | 10.2 | |

|

| ||

| portrait | 9.7 | |

|

| ||

| manager | 9.3 | |

|

| ||

| house | 9.2 | |

|

| ||

| equipment | 9.1 | |

|

| ||

| sign | 9 | |

|

| ||

| success | 8.8 | |

|

| ||

| urban | 8.7 | |

|

| ||

| standing | 8.7 | |

|

| ||

| ancient | 8.6 | |

|

| ||

| corporate | 8.6 | |

|

| ||

| barbershop | 8.5 | |

|

| ||

| casual | 8.5 | |

|

| ||

| black | 8.4 | |

|

| ||

| mercantile establishment | 8.3 | |

|

| ||

| street | 8.3 | |

|

| ||

| confident | 8.2 | |

|

| ||

| guy | 8 | |

|

| ||

| job | 8 | |

|

| ||

| lifestyle | 7.9 | |

|

| ||

| outside | 7.7 | |

|

| ||

| executive | 7.6 | |

|

| ||

| happy | 7.5 | |

|

| ||

| outdoors | 7.5 | |

|

| ||

| town | 7.4 | |

|

| ||

| worker | 7.3 | |

|

| ||

| metal | 7.2 | |

|

| ||

| aged | 7.2 | |

|

| ||

| home | 7.2 | |

|

| ||

| face | 7.1 | |

|

| ||

Google

created on 2018-05-11

| photograph | 95.8 | |

|

| ||

| black and white | 87 | |

|

| ||

| snapshot | 81.8 | |

|

| ||

| photography | 79.8 | |

|

| ||

| standing | 74.2 | |

|

| ||

| vintage clothing | 69.9 | |

|

| ||

| monochrome photography | 68.8 | |

|

| ||

| monochrome | 65.6 | |

|

| ||

| house | 63.5 | |

|

| ||

| history | 59.9 | |

|

| ||

| window | 56 | |

|

| ||

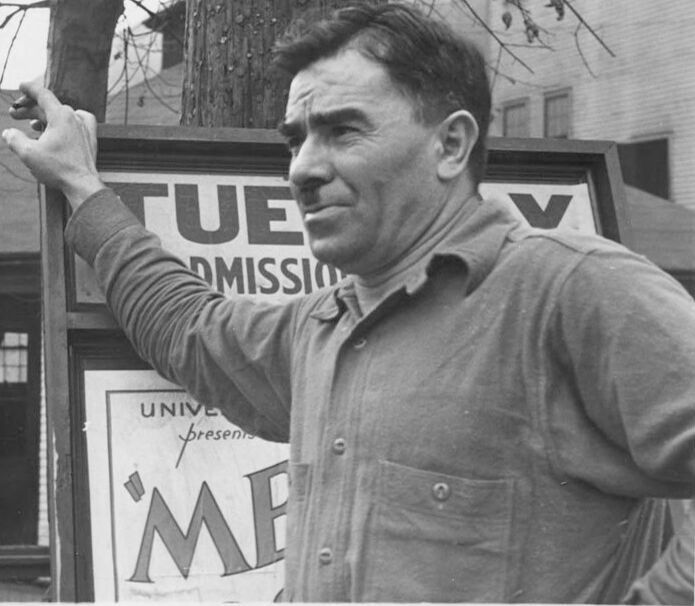

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

| Age | 49-57 |

| Gender | Male, 100% |

| Angry | 38.7% |

| Happy | 38.6% |

| Calm | 9.7% |

| Disgusted | 7.3% |

| Surprised | 6.9% |

| Fear | 6.2% |

| Sad | 2.9% |

| Confused | 1.4% |

Microsoft Cognitive Services

| Age | 39 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Imagga

| Traits | no traits identified |

Feature analysis

Categories

Imagga

| paintings art | 96% | |

|

| ||

| streetview architecture | 3.2% | |

|

| ||

Captions

Microsoft

created by unknown on 2018-05-11

| a man standing in front of a window | 89.6% | |

|

| ||

| a man standing in front of a building | 89.5% | |

|

| ||

| a man that is standing in front of a window | 86.9% | |

|

| ||

Text analysis

Amazon

UNIVE

ME

V

UE

MISSIO

presents

UE

DMISSI

UNI

Fesen

ME

UE

DMISSI

UNI

Fesen

ME