Machine Generated Data

Tags

Amazon

created on 2023-10-06

| Clothing | 100 | |

|

| ||

| Shirt | 100 | |

|

| ||

| Adult | 99.3 | |

|

| ||

| Male | 99.3 | |

|

| ||

| Man | 99.3 | |

|

| ||

| Person | 99.3 | |

|

| ||

| Person | 99.1 | |

|

| ||

| Child | 99.1 | |

|

| ||

| Female | 99.1 | |

|

| ||

| Girl | 99.1 | |

|

| ||

| Face | 92.3 | |

|

| ||

| Head | 92.3 | |

|

| ||

| Furniture | 91.7 | |

|

| ||

| Table | 91.7 | |

|

| ||

| Photography | 89.6 | |

|

| ||

| Portrait | 89.6 | |

|

| ||

| Indoors | 81.5 | |

|

| ||

| Restaurant | 81.5 | |

|

| ||

| Hat | 68.1 | |

|

| ||

| Accessories | 68 | |

|

| ||

| Bag | 68 | |

|

| ||

| Handbag | 68 | |

|

| ||

| Sun Hat | 57.3 | |

|

| ||

| Brick | 57 | |

|

| ||

| Cafeteria | 56.9 | |

|

| ||

| Drum | 56.8 | |

|

| ||

| Musical Instrument | 56.8 | |

|

| ||

| Percussion | 56.8 | |

|

| ||

| Blouse | 56.7 | |

|

| ||

| Dress Shirt | 56.6 | |

|

| ||

| Cap | 56 | |

|

| ||

| Architecture | 55.3 | |

|

| ||

| Building | 55.3 | |

|

| ||

| Dining Room | 55.3 | |

|

| ||

| Dining Table | 55.3 | |

|

| ||

| Room | 55.3 | |

|

| ||

| Smoke | 55.2 | |

|

| ||

| Body Part | 55.1 | |

|

| ||

| Finger | 55.1 | |

|

| ||

| Hand | 55.1 | |

|

| ||

| Baseball Cap | 55 | |

|

| ||

Clarifai

created on 2018-05-11

| people | 100 | |

|

| ||

| adult | 99.4 | |

|

| ||

| man | 97.2 | |

|

| ||

| group | 96.7 | |

|

| ||

| woman | 96.5 | |

|

| ||

| sit | 95 | |

|

| ||

| furniture | 92.4 | |

|

| ||

| monochrome | 91.5 | |

|

| ||

| child | 88.9 | |

|

| ||

| two | 88.1 | |

|

| ||

| group together | 88.1 | |

|

| ||

| wear | 86.7 | |

|

| ||

| portrait | 85.1 | |

|

| ||

| recreation | 84.5 | |

|

| ||

| room | 84.1 | |

|

| ||

| administration | 83.5 | |

|

| ||

| concentration | 83.2 | |

|

| ||

| education | 83 | |

|

| ||

| facial expression | 79.4 | |

|

| ||

| several | 79.3 | |

|

| ||

Imagga

created on 2023-10-06

| man | 36.4 | |

|

| ||

| person | 34.6 | |

|

| ||

| male | 29.1 | |

|

| ||

| people | 26.8 | |

|

| ||

| adult | 25.3 | |

|

| ||

| sitting | 19.8 | |

|

| ||

| seller | 19.6 | |

|

| ||

| couple | 17.4 | |

|

| ||

| businessman | 16.8 | |

|

| ||

| business | 15.8 | |

|

| ||

| clothing | 15.8 | |

|

| ||

| happy | 15.7 | |

|

| ||

| office | 15.6 | |

|

| ||

| smiling | 15.2 | |

|

| ||

| casual | 14.4 | |

|

| ||

| looking | 14.4 | |

|

| ||

| working | 14.1 | |

|

| ||

| indoors | 14.1 | |

|

| ||

| men | 13.7 | |

|

| ||

| portrait | 13.6 | |

|

| ||

| home | 13.6 | |

|

| ||

| work | 12.7 | |

|

| ||

| happiness | 12.5 | |

|

| ||

| professional | 12.4 | |

|

| ||

| table | 12.1 | |

|

| ||

| room | 11.8 | |

|

| ||

| teacher | 11.6 | |

|

| ||

| lifestyle | 11.6 | |

|

| ||

| cheerful | 11.4 | |

|

| ||

| restaurant | 11.3 | |

|

| ||

| smile | 10.7 | |

|

| ||

| computer | 10.5 | |

|

| ||

| attractive | 10.5 | |

|

| ||

| senior | 10.3 | |

|

| ||

| laptop | 10.3 | |

|

| ||

| day | 10.2 | |

|

| ||

| white | 10 | |

|

| ||

| team | 9.9 | |

|

| ||

| 40s | 9.7 | |

|

| ||

| job | 9.7 | |

|

| ||

| 30s | 9.6 | |

|

| ||

| adults | 9.5 | |

|

| ||

| color | 9.5 | |

|

| ||

| clothes | 9.4 | |

|

| ||

| teamwork | 9.3 | |

|

| ||

| horizontal | 9.2 | |

|

| ||

| 20s | 9.2 | |

|

| ||

| food | 9.1 | |

|

| ||

| old | 9.1 | |

|

| ||

| handsome | 8.9 | |

|

| ||

| group | 8.9 | |

|

| ||

| patient | 8.9 | |

|

| ||

| together | 8.8 | |

|

| ||

| mid adult | 8.7 | |

|

| ||

| education | 8.7 | |

|

| ||

| dinner | 8.4 | |

|

| ||

| black | 8.4 | |

|

| ||

| mature | 8.4 | |

|

| ||

| drink | 8.4 | |

|

| ||

| indoor | 8.2 | |

|

| ||

| one | 8.2 | |

|

| ||

| waiter | 8.2 | |

|

| ||

| love | 7.9 | |

|

| ||

| face | 7.8 | |

|

| ||

| chef | 7.8 | |

|

| ||

| worker | 7.8 | |

|

| ||

| newspaper | 7.7 | |

|

| ||

| serious | 7.6 | |

|

| ||

| chair | 7.6 | |

|

| ||

| holding | 7.4 | |

|

| ||

| interior | 7.1 | |

|

| ||

Google

created on 2018-05-11

| photograph | 96.1 | |

|

| ||

| black | 95 | |

|

| ||

| black and white | 89.5 | |

|

| ||

| sitting | 84.2 | |

|

| ||

| vintage clothing | 82.1 | |

|

| ||

| snapshot | 81.8 | |

|

| ||

| photography | 79.2 | |

|

| ||

| human behavior | 78.1 | |

|

| ||

| monochrome photography | 73.8 | |

|

| ||

| monochrome | 68.7 | |

|

| ||

| design | 67.2 | |

|

| ||

| gentleman | 62.2 | |

|

| ||

| stock photography | 51.7 | |

|

| ||

| pattern | 50 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

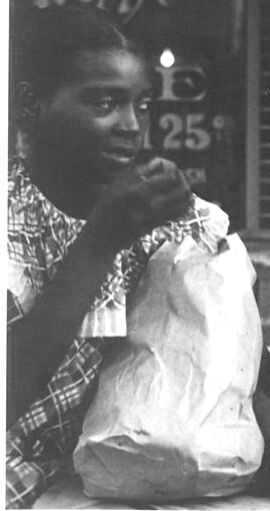

AWS Rekognition

| Age | 18-26 |

| Gender | Female, 77.3% |

| Fear | 36.7% |

| Surprised | 36.4% |

| Calm | 10.6% |

| Confused | 10.1% |

| Disgusted | 8.3% |

| Happy | 5.3% |

| Sad | 4.9% |

| Angry | 1.5% |

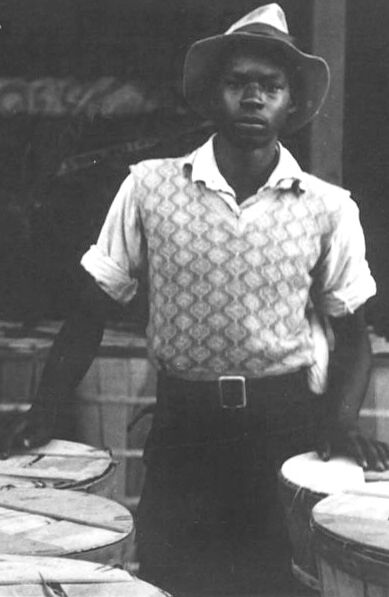

AWS Rekognition

| Age | 24-34 |

| Gender | Male, 99.9% |

| Calm | 72.9% |

| Fear | 13.6% |

| Sad | 8.1% |

| Surprised | 7% |

| Disgusted | 0.9% |

| Confused | 0.6% |

| Angry | 0.4% |

| Happy | 0.3% |

Microsoft Cognitive Services

| Age | 46 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 33 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Possible |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| people portraits | 58.6% | |

|

| ||

| food drinks | 22.7% | |

|

| ||

| paintings art | 6.9% | |

|

| ||

| interior objects | 6.4% | |

|

| ||

| pets animals | 1.7% | |

|

| ||

| events parties | 1.6% | |

|

| ||

Captions

Microsoft

created on 2018-05-11

| a man and a woman sitting on a table | 76.4% | |

|

| ||

| a man and a woman sitting at a table | 76.3% | |

|

| ||

| a man and woman sitting at a table | 76.2% | |

|

| ||

Text analysis

Amazon

25

mays