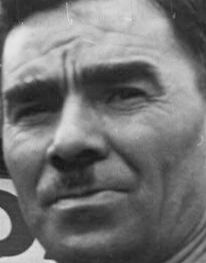

Machine Generated Data

Tags

Amazon

created on 2023-10-06

| Photography | 100 | |

|

| ||

| Face | 100 | |

|

| ||

| Head | 100 | |

|

| ||

| Portrait | 100 | |

|

| ||

| Body Part | 99.8 | |

|

| ||

| Finger | 99.8 | |

|

| ||

| Hand | 99.8 | |

|

| ||

| Person | 99.7 | |

|

| ||

| Adult | 99.7 | |

|

| ||

| Male | 99.7 | |

|

| ||

| Man | 99.7 | |

|

| ||

| Firearm | 91 | |

|

| ||

| Weapon | 91 | |

|

| ||

| Text | 72 | |

|

| ||

| Machine | 63.6 | |

|

| ||

| Wheel | 63.6 | |

|

| ||

| Gun | 61 | |

|

| ||

| Handgun | 61 | |

|

| ||

| Advertisement | 57.9 | |

|

| ||

| Poster | 57.9 | |

|

| ||

| Clothing | 57.4 | |

|

| ||

| Shirt | 57.4 | |

|

| ||

| Electronics | 56.3 | |

|

| ||

| Phone | 56.3 | |

|

| ||

| Coat | 56.2 | |

|

| ||

| License Plate | 56.1 | |

|

| ||

| Transportation | 56.1 | |

|

| ||

| Vehicle | 56.1 | |

|

| ||

| Photographer | 55.7 | |

|

| ||

| Brick | 55.7 | |

|

| ||

| Rifle | 55.6 | |

|

| ||

| Sticker | 55.5 | |

|

| ||

| Jacket | 55.1 | |

|

| ||

| Sign | 55.1 | |

|

| ||

| Symbol | 55.1 | |

|

| ||

Clarifai

created on 2018-05-11

| people | 99.8 | |

|

| ||

| administration | 98.6 | |

|

| ||

| one | 98.1 | |

|

| ||

| adult | 97.9 | |

|

| ||

| portrait | 97.8 | |

|

| ||

| man | 96 | |

|

| ||

| leader | 95.6 | |

|

| ||

| war | 88.6 | |

|

| ||

| military | 85.4 | |

|

| ||

| monochrome | 85.2 | |

|

| ||

| group | 83.9 | |

|

| ||

| offense | 81.9 | |

|

| ||

| election | 81.6 | |

|

| ||

| two | 80.5 | |

|

| ||

| group together | 79.4 | |

|

| ||

| wear | 79.4 | |

|

| ||

| street | 77.8 | |

|

| ||

| candidate | 76.7 | |

|

| ||

| baseball | 76.5 | |

|

| ||

| politician | 76.1 | |

|

| ||

Imagga

created on 2023-10-06

| man | 50.4 | |

|

| ||

| male | 42.6 | |

|

| ||

| person | 32.7 | |

|

| ||

| portrait | 27.8 | |

|

| ||

| washboard | 27.7 | |

|

| ||

| adult | 25.2 | |

|

| ||

| people | 24.5 | |

|

| ||

| device | 23.3 | |

|

| ||

| face | 21.3 | |

|

| ||

| military uniform | 21 | |

|

| ||

| uniform | 20.9 | |

|

| ||

| clothing | 20.8 | |

|

| ||

| business | 18.2 | |

|

| ||

| guy | 17.9 | |

|

| ||

| businessman | 17.7 | |

|

| ||

| casual | 16.9 | |

|

| ||

| black | 16.8 | |

|

| ||

| old | 16.7 | |

|

| ||

| men | 16.3 | |

|

| ||

| happy | 16.3 | |

|

| ||

| senior | 15.9 | |

|

| ||

| work | 15.7 | |

|

| ||

| handsome | 15.2 | |

|

| ||

| expression | 14.5 | |

|

| ||

| looking | 14.4 | |

|

| ||

| glasses | 13.9 | |

|

| ||

| smile | 13.5 | |

|

| ||

| working | 13.3 | |

|

| ||

| office | 13.2 | |

|

| ||

| smiling | 13 | |

|

| ||

| alone | 12.8 | |

|

| ||

| one | 12.7 | |

|

| ||

| worker | 12.4 | |

|

| ||

| serious | 12.4 | |

|

| ||

| mature | 12.1 | |

|

| ||

| home | 12 | |

|

| ||

| professional | 11.9 | |

|

| ||

| job | 11.5 | |

|

| ||

| elderly | 11.5 | |

|

| ||

| beard | 11.4 | |

|

| ||

| shop | 11.3 | |

|

| ||

| equipment | 10 | |

|

| ||

| covering | 9.8 | |

|

| ||

| look | 9.6 | |

|

| ||

| construction | 9.4 | |

|

| ||

| laptop | 9.2 | |

|

| ||

| hand | 9.2 | |

|

| ||

| billboard | 9.2 | |

|

| ||

| suit | 9.2 | |

|

| ||

| occupation | 9.2 | |

|

| ||

| old-timer | 9.1 | |

|

| ||

| consumer goods | 9.1 | |

|

| ||

| standing | 8.7 | |

|

| ||

| outside | 8.6 | |

|

| ||

| jacket | 8.5 | |

|

| ||

| mercantile establishment | 8.2 | |

|

| ||

| shirt | 8.2 | |

|

| ||

| building | 8.2 | |

|

| ||

| aged | 8.1 | |

|

| ||

| gray | 8.1 | |

|

| ||

| grandfather | 8 | |

|

| ||

| boy | 8 | |

|

| ||

| hair | 7.9 | |

|

| ||

| signboard | 7.8 | |

|

| ||

| sitting | 7.7 | |

|

| ||

| attractive | 7.7 | |

|

| ||

| retirement | 7.7 | |

|

| ||

| outdoor | 7.6 | |

|

| ||

| age | 7.6 | |

|

| ||

| head | 7.6 | |

|

| ||

| hat | 7.6 | |

|

| ||

| manager | 7.4 | |

|

| ||

| closeup | 7.4 | |

|

| ||

| safety | 7.4 | |

|

| ||

| computer | 7.2 | |

|

| ||

| modern | 7 | |

|

| ||

Google

created on 2018-05-11

| photograph | 95.6 | |

|

| ||

| black and white | 90.6 | |

|

| ||

| snapshot | 81.8 | |

|

| ||

| photography | 80.7 | |

|

| ||

| monochrome photography | 78.8 | |

|

| ||

| poster | 71.2 | |

|

| ||

| monochrome | 67.7 | |

|

| ||

| human behavior | 63.4 | |

|

| ||

| history | 54.3 | |

|

| ||

| font | 52.4 | |

|

| ||

| vintage clothing | 51.4 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 48-54 |

| Gender | Male, 100% |

| Calm | 97.2% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.3% |

| Happy | 1.1% |

| Angry | 0.7% |

| Confused | 0.4% |

| Disgusted | 0.1% |

Microsoft Cognitive Services

| Age | 48 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Likely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| paintings art | 99.7% | |

|

| ||

Captions

Microsoft

created on 2018-05-11

| a man holding a sign | 84.1% | |

|

| ||

| a man holding a sign in front of a building | 77.5% | |

|

| ||

| a man standing in front of a sign | 77.4% | |

|

| ||

Text analysis

Amazon

presents

MEE

UESD

UNIVERS

OF

SION

UES

SSION

UNIVER

resents

UES

SSION

UNIVER

resents