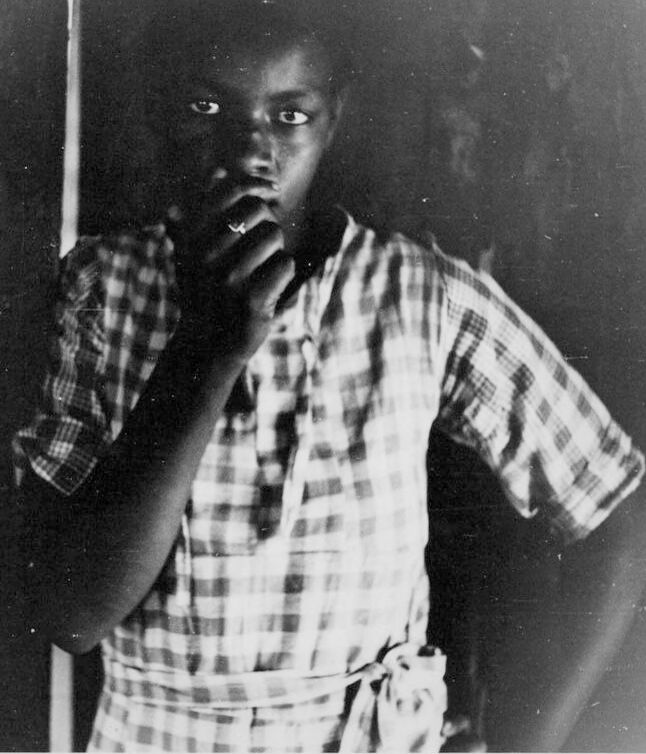

Machine Generated Data

Tags

Amazon

created on 2023-10-06

| Photography | 100 | |

|

| ||

| Face | 100 | |

|

| ||

| Head | 100 | |

|

| ||

| Portrait | 100 | |

|

| ||

| Clothing | 100 | |

|

| ||

| Shirt | 100 | |

|

| ||

| Person | 99.6 | |

|

| ||

| Adult | 99.6 | |

|

| ||

| Male | 99.6 | |

|

| ||

| Man | 99.6 | |

|

| ||

| Electrical Device | 97.3 | |

|

| ||

| Microphone | 97.3 | |

|

| ||

| Body Part | 95.1 | |

|

| ||

| Finger | 95.1 | |

|

| ||

| Hand | 95.1 | |

|

| ||

| Smoke | 90.7 | |

|

| ||

| Astronomy | 55.8 | |

|

| ||

| Outer Space | 55.8 | |

|

| ||

| Blouse | 55.6 | |

|

| ||

| Smoking | 55.6 | |

|

| ||

Clarifai

created on 2018-05-11

Imagga

created on 2023-10-06

| pay-phone | 100 | |

|

| ||

| telephone | 89 | |

|

| ||

| electronic equipment | 67.2 | |

|

| ||

| equipment | 39.8 | |

|

| ||

| man | 34.2 | |

|

| ||

| male | 27.6 | |

|

| ||

| people | 24 | |

|

| ||

| portrait | 23.9 | |

|

| ||

| person | 23.5 | |

|

| ||

| black | 22.4 | |

|

| ||

| adult | 21.3 | |

|

| ||

| call | 19 | |

|

| ||

| face | 18.5 | |

|

| ||

| one | 17.9 | |

|

| ||

| expression | 17.9 | |

|

| ||

| guy | 16.6 | |

|

| ||

| attractive | 15.4 | |

|

| ||

| human | 15 | |

|

| ||

| eyes | 14.6 | |

|

| ||

| looking | 14.4 | |

|

| ||

| model | 14 | |

|

| ||

| hair | 13.5 | |

|

| ||

| serious | 13.3 | |

|

| ||

| suit | 12.9 | |

|

| ||

| businessman | 12.3 | |

|

| ||

| business | 12.1 | |

|

| ||

| hand | 11.4 | |

|

| ||

| hands | 11.3 | |

|

| ||

| sexy | 11.2 | |

|

| ||

| handsome | 10.7 | |

|

| ||

| fashion | 10.5 | |

|

| ||

| look | 10.5 | |

|

| ||

| body | 10.4 | |

|

| ||

| men | 10.3 | |

|

| ||

| dark | 10 | |

|

| ||

| lifestyle | 9.4 | |

|

| ||

| hope | 8.7 | |

|

| ||

| work | 8.6 | |

|

| ||

| emotion | 8.3 | |

|

| ||

| alone | 8.2 | |

|

| ||

| style | 8.2 | |

|

| ||

| posing | 8 | |

|

| ||

| smile | 7.8 | |

|

| ||

| modern | 7.7 | |

|

| ||

| pretty | 7.7 | |

|

| ||

| casual | 7.6 | |

|

| ||

| professional | 7.6 | |

|

| ||

| tie | 7.6 | |

|

| ||

| head | 7.6 | |

|

| ||

| strong | 7.5 | |

|

| ||

| computer | 7.2 | |

|

| ||

| fitness | 7.2 | |

|

| ||

| macho | 7.1 | |

|

| ||

| love | 7.1 | |

|

| ||

Google

created on 2018-05-11

| white | 95.8 | |

|

| ||

| photograph | 95.7 | |

|

| ||

| black | 95.6 | |

|

| ||

| black and white | 91.5 | |

|

| ||

| photography | 82.3 | |

|

| ||

| standing | 82.3 | |

|

| ||

| snapshot | 81.8 | |

|

| ||

| monochrome photography | 80.5 | |

|

| ||

| human | 70 | |

|

| ||

| monochrome | 63 | |

|

| ||

| facial hair | 54.8 | |

|

| ||

| vintage clothing | 52.8 | |

|

| ||

| portrait | 52.6 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 18-26 |

| Gender | Male, 55.1% |

| Fear | 97.9% |

| Surprised | 6.3% |

| Sad | 2.2% |

| Calm | 1.1% |

| Angry | 0.1% |

| Confused | 0% |

| Disgusted | 0% |

| Happy | 0% |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| cars vehicles | 95.8% | |

|

| ||

| paintings art | 2.7% | |

|

| ||