Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

| Age | 54-64 |

| Gender | Male, 92% |

| Calm | 99.5% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.2% |

| Confused | 0.1% |

| Angry | 0.1% |

| Happy | 0% |

| Disgusted | 0% |

Feature analysis

Amazon

| Person | 99.1% | |

Categories

Imagga

| paintings art | 93.6% | |

| people portraits | 6.3% | |

Captions

Microsoft

created by unknown on 2018-05-11

| a group of people posing for a photo | 91.6% | |

| a group of people posing for the camera | 91.5% | |

| a group of people posing for a picture | 91.4% | |

Clarifai

created by general-english-image-caption-blip on 2025-05-03

| a photograph of a woman holding a baby and two children | -100% | |

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-05-29

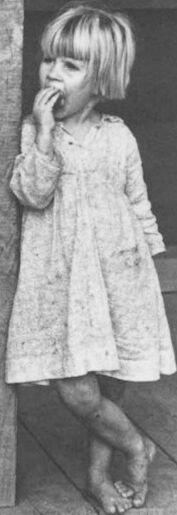

The image is a black-and-white photograph of a woman and four children standing on a porch.

The woman is wearing a long, light-colored dress with a white apron over it. She has her left arm raised and is holding a child in her right arm. The child she is holding is wearing a light-colored shirt and has its left hand in its mouth. The child is barefoot and appears to be around 2 years old.

To the left of the woman is a boy who is around 5 years old. He is wearing a light-colored shirt and overalls that are covered in dirt. His hands are in his pockets, and he is barefoot.

In front of the woman is a boy who is around 4 years old. He is wearing a light-colored shirt and overalls that are covered in dirt. He is barefoot and has his left hand in his mouth.

To the right of the woman is a girl who is around 5 years old. She is wearing a light-colored dress that is covered in dirt. She is barefoot and has her left hand in her mouth.

The background of the image is a wooden porch with a wooden floor and walls. The porch has a roof, but it is not visible in the image. The overall atmosphere of the image suggests that the family is poor and struggling to make ends meet. The children are all barefoot and their clothing is dirty, indicating that they may not have access to clean clothes or shoes. The woman's apron and the children's dirty clothes also suggest that they may be working or helping with chores.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-05-29

The image depicts a black-and-white photograph of a woman standing on the porch of a wooden house, surrounded by four children. The woman is dressed in a long dress and apron, with her right arm resting on a wooden post. She holds a baby in her left arm.

To her left stands a young boy wearing overalls, while another boy sits on the floor in front of her, also dressed in overalls. On the right side of the image, a girl is visible, wearing a dress.

The background of the image features the interior of the house, which appears to be made of wood. The overall atmosphere of the photograph suggests that it was taken during a time of hardship or poverty, as evidenced by the worn clothing and simple surroundings.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-01

Certainly! Here's a description of the image:

Overall Impression:

The image is a black and white photograph depicting a group of children and a woman, seemingly taken during a time of hardship. The setting appears to be a simple wooden structure, likely a porch or the entrance to a house. The expressions on the faces of the subjects suggest a sense of struggle and the image has an overall feeling of bleakness.

Key Elements:

- The Woman: Stands at the center, holding a young child. She wears a simple dress and an apron-like garment. She has a stern expression.

- The Children: Several children are present. One is being held by the woman. Another stands beside her, in a worn-out jumpsuit. Another is leaning against a wooden structure. The youngest is sitting on the floor with his hand on his mouth. They all look very tired and dirty.

- The Setting: The photo is taken on a wooden porch or in a doorway. The wooden planks are weathered. The stark backdrop further emphasizes the poverty.

- Atmosphere/Mood: The mood is somber and serious. The image likely conveys a sense of poverty, hardship, and perhaps resilience.

Possible Context (based on the visual clues):

The image may be from the Great Depression era, or a similar time of economic hardship. The clothing, the setting, and the general look of the subjects support this. The photographer likely aimed to capture a slice of life during a difficult period.

Let me know if you'd like me to expand on any aspect!

Created by gemini-2.0-flash on 2025-05-01

Here's a description of the image:

This is a black and white photograph featuring a woman and four children standing on a wooden porch. The woman is the central figure, standing with one hand resting on a wooden door frame. She is wearing a simple dress and has a large white apron tied around her waist. In her arms, she holds a young child dressed in a light-colored dress.

To the woman's left, a young boy stands with his hands in his pockets. He is dressed in overalls that appear to be worn and possibly dirty. To the right of the woman, a young girl leans against the door frame, appearing to be eating something. She is wearing a simple dress and is barefoot. In front of the woman, another young boy is sitting on the wooden porch, with his elbows resting on his knees and his face in his hands. He is also wearing overalls and a light-colored undershirt.

The setting appears to be a simple, possibly rural home. The wooden porch and the weathered appearance of the clothing suggest a scene of poverty or hardship. The children's expressions range from thoughtful to forlorn, adding to the somber mood of the photograph.