Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Imagga

AWS Rekognition

| Age | 15-25 |

| Gender | Male, 50.4% |

| Happy | 49.5% |

| Disgusted | 49.7% |

| Surprised | 49.5% |

| Calm | 49.5% |

| Angry | 49.5% |

| Sad | 50.2% |

| Confused | 49.5% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.6% | |

Categories

Imagga

created on 2018-03-23

| streetview architecture | 86.8% | |

| paintings art | 8.4% | |

| interior objects | 3.4% | |

| pets animals | 0.4% | |

| nature landscape | 0.4% | |

| food drinks | 0.2% | |

| people portraits | 0.2% | |

| events parties | 0.1% | |

| text visuals | 0.1% | |

| cars vehicles | 0.1% | |

Captions

Microsoft

created by unknown on 2018-03-23

| a group of people sitting on a bench | 62.9% | |

| a group of people sitting at a bench | 62.5% | |

| a group of people that are sitting on a bench | 49.8% | |

Salesforce

Created by general-english-image-caption-blip-2 on 2025-06-28

a group of children looking at a poster for a movie

Created by general-english-image-caption-blip on 2025-05-11

a photograph of a group of children standing in front of a poster board

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-10

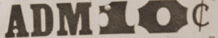

This historical black-and-white photograph depicts a group of four boys, dressed in worn clothing typical of the early 20th century, intently examining a poster board. The board advertises a local event, prominently displayed as “TUESDAYS ADM 10¢,” indicating admission for ten cents on Tuesdays. The poster features a grid-like layout of small images, seemingly depicting scenes from a movie or theatrical production, with working-class figures and community gatherings, suggesting the boys are viewing advertisements for entertainment relevant to their community.

The setting is outdoors, with stairs and a wooden structure in the foreground on the left. In the background, a small rural town is visible, with modest houses, telephone poles, and faint hills marking the landscape. The atmosphere conveys a sense of the era's working-class lifestyle and a simple yet hopeful moment as the children gaze at the display, likely imagining the excitement of the advertised event.

Created by gpt-4o-2024-08-06 on 2025-06-10

The image depicts a historical scene with four boys closely examining a bulletin board or a poster display. The board features various black-and-white movie stills and promotional photographs arranged in a grid. At the top, there's a sign that reads "TUESDAYS ADM 10₵," indicating that admission to a movie show costs ten cents on Tuesdays. The boys are standing in front of a wooden structure, possibly stairs or a building, while the background reveals a rural setting with hills and some buildings, suggesting they might be near a mining town or an industrial area. All the boys appear attentive and engrossed in the details of the images. The photo is in sepia tone, adding to the vintage atmosphere and possibly dating back to the early or mid-20th century, reflecting a time when cinema was an affordable entertainment option.

Anthropic Claude

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-24

This is a black and white historical photograph showing several children looking at photos displayed outside what appears to be a movie theater or entertainment venue. There's a sign visible that reads "TUESDAYS ADM 10¢" (10 cents admission). Multiple photographs are posted on a board, and the children are viewing them with interest. The setting appears to be from several decades ago, possibly the 1930s or 1940s, with wooden steps and a lattice-work pattern visible in the background. The scene captures a simple moment of entertainment and curiosity from a bygone era when 10-cent admission was standard and looking at posted photographs was a source of public interest.

Created by claude-3-haiku-20240307 on 2024-12-31

The image shows a group of people standing in front of a wooden structure, examining a poster advertising "Tuesdays Adm 10c". The poster displays several black and white photographs depicting scenes of people gathered in social settings. The background appears to be a small town or rural area, with buildings and structures visible in the distance. The people in the image seem to be examining the poster, suggesting it is advertising some kind of event or entertainment taking place on Tuesdays for a cost of 10 cents.

Created by claude-3-opus-20240229 on 2024-12-31

The black and white image shows a sign that says "TUESDAYS ADM 10¢" with six small photos underneath it. The sign appears to be attached to a wooden lattice structure. In the foreground, three young boys are looking at the sign and photos, with their backs turned to the camera. The background shows what looks like a small town or neighborhood.

Created by claude-3-5-sonnet-20241022 on 2024-12-31

This is a black and white historical photograph showing several young people looking at a movie theater display board. The sign at the top reads "TUESDAYS ADM 10¢" (admission 10 cents), and there are several promotional photographs arranged in a grid below it. The viewers appear to be dressed in casual clothing typical of what might be the 1930s or 1940s, with one wearing overalls. The setting appears rural, with what looks like houses visible in the background on a hillside. The building they're standing next to has wooden siding and lattice work visible at the top of the frame. This image captures a slice of small-town American entertainment history, when going to the movies was an affordable and popular pastime.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-12

The image depicts a group of men gathered around a sign, likely in the early 20th century. The sign, which is the central focus of the image, reads "TUESDAYS ADM 10¢" in bold letters at the top. Below this, there are six smaller images arranged in two rows of three, showcasing various scenes of people interacting with each other. The sign appears to be made of wood or metal and has a rustic, vintage look.

In the foreground, four men are standing in front of the sign, their backs turned towards the camera. They are all dressed in casual attire, with one man wearing a dark jacket and the others sporting lighter-colored shirts and overalls. The men seem to be engaged in conversation, possibly discussing the content of the sign or the events it is advertising.

To the left of the sign, a set of wooden stairs leads up to a platform or landing, which is partially obscured by the sign. In the background, a small town or village can be seen, with several buildings and houses scattered across the landscape. A dirt road or path winds its way through the town, and a few trees or hills rise up in the distance.

Overall, the image suggests that the men are attending some kind of event or gathering, possibly a social or community function, and are taking a moment to admire the sign and its contents. The vintage aesthetic of the sign and the clothing worn by the men adds to the nostalgic feel of the image, evoking a sense of a bygone era.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-12

The image is a black-and-white photograph of four boys gathered around a sign advertising movie showtimes. The sign, situated on the left side of the image, features a prominent display of "TUESDAYS ADM 10¢" at the top, with six smaller images of movie scenes below it. The boys are positioned in front of the sign, facing away from the camera and towards the sign.

In the background, a set of stairs leads up to a building on the left side of the image, while a dirt road and a few houses are visible on the right side. The overall atmosphere of the image suggests that it was taken during the early 20th century, likely in the United States. The presence of the sign and the boys' interest in it imply that they are eagerly anticipating the start of a movie or are discussing their favorite films. The image provides a glimpse into the lives of young people during this time period and highlights the importance of entertainment and community in their daily lives.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-09

The image shows a black-and-white photograph of three boys standing on a staircase, looking at a movie poster. The movie poster has the title "TUESDAYS" and the admission price of "ADMI0¢". The boys are standing close to each other, with the boy on the left looking at the poster from the side. The staircase has a wooden railing, and there are houses and a mountain in the distance. The image has a vintage look, with a slightly blurry effect.

Created by amazon.nova-pro-v1:0 on 2025-06-09

The black and white photo shows three boys standing in front of a poster. The poster has a title, "TUESDAYS ADM 10¢," and several pictures of people. The boys are looking at the poster, and the boy in the middle is looking at the boy on the left. Behind them, there is a staircase with a wooden railing, and a few houses are located behind the staircase.

Google Gemini

Created by gemini-2.0-flash on 2025-05-26

Here is a description of the image:

In a vintage black and white photograph, four boys huddle together, their backs to the camera, engrossed in looking at a poster. The poster, clearly marked with the words "TUESDAYS ADM 10¢" at the top, displays a collection of smaller photographs. The scenes depicted in these smaller photographs appear to showcase people in various social settings, possibly stills from a movie or event.

The boys themselves are dressed in what appears to be period clothing. One is wearing a darker jacket, while the other three are clad in lighter colored shirts, one with suspenders.

The setting seems to be a small town or rural area. In the background, buildings, power lines, and a hillside can be seen, suggesting a modest community. The poster is positioned beside a set of outdoor stairs, adding to the impression of a public or communal space. The overall atmosphere of the photograph evokes a sense of nostalgia, capturing a moment of simple entertainment in a bygone era.

Created by gemini-2.0-flash-lite on 2025-05-26

Here's a description of the image:

Overall Scene:

The photograph captures a nostalgic, almost cinematic scene. It's in black and white, giving it a vintage feel. The primary focus is on four young boys gazing intently at a poster advertising a movie. The background reveals a small town or village setting, suggesting a simpler time.

Main Subject:

- The Boys: Four boys are positioned in the foreground, seen from the back. They're captivated by the movie poster. Their posture and closeness to the poster emphasize their interest.

- The Movie Poster: A large, rectangular sign displaying the words "TUESDAYS ADM. 10¢" is prominently displayed. Beneath the text are a series of small, square images, likely stills from the movie.

Background and Setting:

- The Staircase: To the left of the boys, there's a flight of stairs leading up, partially obscured by a latticework wooden banister.

- The Town: The background reveals a town or village nestled in a valley, with a glimpse of a distant hill or mountain. There are buildings, likely homes or businesses, and other elements that indicate a small community. There is a railroad track visible.

Mood and Impression:

The photograph evokes a sense of nostalgia, childhood wonder, and the excitement of entertainment. The details suggest that this image may have been taken in the early to mid-20th century. It suggests simplicity and the importance of community.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-05-31

The image depicts a scene from what appears to be an early 20th-century setting. Four boys are standing on a set of wooden stairs, intently looking at a large signboard in front of them. The signboard has a prominent advertisement at the top that reads "TUESDAYS ADM 10¢," indicating a special admission price of 10 cents on Tuesdays. Below the text, there are six photographs displayed on the signboard, showing groups of people, likely scenes from movies or events.

The boys are dressed in clothing typical of the early 20th century, including overalls and suspenders. They appear to be engrossed in the images on the signboard, possibly indicating their interest in the advertised event or movies.

The background shows a rural or small-town setting with a few buildings, a road, and some fencing. The environment suggests a simpler time, with a hilly or mountainous landscape visible in the distance. The overall atmosphere of the image conveys a sense of curiosity and community engagement.