Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Imagga

AWS Rekognition

| Age | 39-47 |

| Gender | Male, 99.9% |

| Calm | 63.1% |

| Angry | 15.7% |

| Disgusted | 9.3% |

| Surprised | 7.2% |

| Fear | 6.4% |

| Confused | 3.4% |

| Sad | 3.3% |

| Happy | 2.2% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Adult | 99.3% | |

Categories

Imagga

created on 2023-10-05

| streetview architecture | 86.6% | |

| nature landscape | 6.7% | |

| paintings art | 5.6% | |

Captions

Microsoft

created by unknown on 2018-05-11

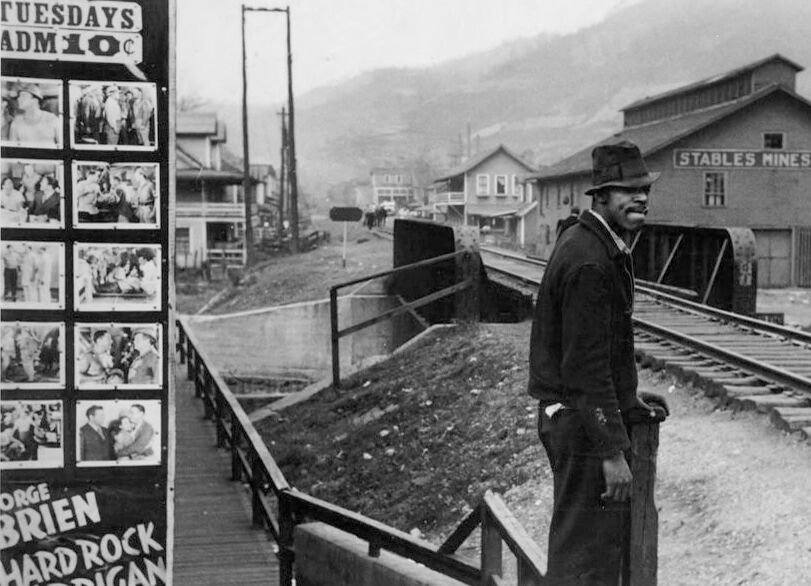

| a man standing in front of a building | 89.6% | |

| a man standing next to a building | 87.7% | |

| a black and white photo of a man | 79.2% | |

Salesforce

Created by general-english-image-caption-blip-2 on 2025-07-07

a man standing on a bridge with a sign that says rock river

Created by general-english-image-caption-blip on 2025-05-04

a photograph of a man standing on a train track with a suitcase

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-10

The image depicts a small industrial-town setting. A wooden walkway juxtaposes with railroad tracks that extend toward a building marked "Stables Mines." The surrounding area features residential houses, hills in the distance, and an unpaved construction area. On the left side of the image is a movie poster advertising "Hard Rock Harrigan" starring George O’Brien, showing various scenes from the film and highlighting an admission fee of 10 cents for Tuesday viewings. Signs and structures suggest the intersection of workplace and leisure environments in a mid-20th-century American town.

Created by gpt-4o-2024-08-06 on 2025-06-10

The image is a black and white photograph depicting a small industrial town scene. In the foreground, a man is standing near a wooden pathway that crosses over a set of railroad tracks. The tracks lead towards a building in the background labeled "STABLES MINES," suggesting the presence of a mining facility. The town appears to be situated in a hilly or mountainous area, as indicated by the sloping terrain and hills in the distant background. To the left, there is a signpost or billboard featuring movie posters that advertise a film called "Hard Rock Harrigan" starring George O'Brien, with an admission price of 10 cents on Tuesdays. The overall atmosphere of the image suggests a mid-20th century setting, possibly during the Great Depression era, with an industrial or working-class backdrop.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-14

The image appears to be a black and white photograph depicting a man standing on a train platform or railway track. The man is wearing a hat and coat, and in the background there are buildings and signs, including one that says "Stables Mines". The image has a vintage or historical feel to it.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-14

This is a black and white historical photograph showing a scene from what appears to be a mining town. In the background, there's a building labeled "STABLES MINES" and railroad tracks. On the left side of the image, there's a movie poster or advertisement board showing "TUESDAYS ADM 10¢" and what appears to be promotional stills from a film called "Hard Rock Harrigan" with George O'Brien. A person wearing work clothes and a hat is standing near the railroad tracks, appearing to be a worker or resident of the mining town. The setting suggests this is from the early-to-mid 20th century, likely a coal or mineral mining community.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-12

The image depicts a black-and-white photograph of a man standing on a platform next to a railroad track, with a sign advertising a movie and a building in the background.

- The man is wearing a dark jacket, pants, and a hat, and is leaning against a wooden railing that separates the platform from the railroad tracks.

- To the left of the man, there is a sign with several small pictures of people and text that reads "TUESDAYS ADM 10¢" at the top. Below this, there are more pictures and text that reads "ORGE BRIEN HARD ROCK HARRIGAN".

- In the background, there is a large building with a sign that reads "STABLES MINES" and a railroad track running through it.

- The overall atmosphere of the image suggests that it was taken in the early 20th century, possibly in a rural or industrial area.

The image provides a glimpse into the daily life of people in the early 20th century, highlighting the importance of transportation and entertainment in their lives.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-12

The image is a black-and-white photograph of a man standing on a platform, with a railroad track and a building in the background. The man is wearing a dark jacket, pants, and a hat, and is leaning against a railing. To his left is a sign with movie posters and advertisements.

In the background, there is a railroad track with a train car on it, and a building with a sign that reads "STABLES MINES 21st". There are also some houses and trees visible in the distance. The overall atmosphere of the image suggests a rural or small-town setting, possibly during the early 20th century.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-09

The image is a black-and-white photograph depicting a man standing on a platform by a railway track. He is dressed in a hat and a long coat, giving him a somewhat formal appearance. The man is looking to the left, possibly observing the surroundings or waiting for something. Behind him, there is a wooden bridge with a fence, leading to a signboard on the left side. The signboard has the words "Tuesdays" and "Adm 10c" written on it, suggesting it is a ticket booth or information board.

To the right of the man, there is a building with the sign "Stables Mines 79" above the entrance. This building appears to be a part of the mining operation, possibly a stable or a related facility. The railway track extends beyond the man, leading into the distance. The setting is a rural or industrial area, with a backdrop of mountains and a clear sky.

The overall composition of the image conveys a sense of historical context, likely from the early to mid-20th century, given the style of the clothing and the presence of a mining operation. The man's posture and the setting suggest a moment of pause or contemplation, adding a human element to the industrial landscape.

Created by amazon.nova-pro-v1:0 on 2025-06-09

The image is a black and white photo of a man standing on a concrete platform, possibly a bridge, with a wooden fence on the left side. The man is wearing a hat and a long-sleeve shirt, with his right hand on the fence. In front of him is a train track with a sign on the right side. Behind him is a building with the words "Stables Mines" on it.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-04

Here is a description of the image:

The image is a black and white photograph depicting a man standing on a bridge or walkway near a railway track. The man is the central focus of the image, wearing a dark hat and a jacket with a collared shirt underneath. He appears to be leaning on a wooden post, gazing slightly to his right.

Behind him are train tracks running parallel to the walkway, and in the distance, a large building with the words "STABLES MINES" on it can be seen. The background reveals a mountain range. To the left of the image is a movie poster advertising a film, "Hard Rock Harrigan" starring George O'Brien. The poster also indicates the movie is being shown on Tuesdays with admission at 10 cents. Overall, the image captures a moment in time, likely from the early to mid-20th century, in a mining town or similar industrial setting.

Created by gemini-2.0-flash on 2025-05-04

Here is a description of the image:

This is a black and white photograph showing a man standing next to train tracks in front of a building labeled "Stables Mines." The photograph seems to have been taken in a small mining town. In the foreground, on the left side of the image, there is a wooden walkway or bridge. A movie poster with the words "ORGE BRIEN HARD ROCK HARRIGAN" and other text is positioned beside the walkway.

To the right of the walkway, there's a slope with train tracks running along it. A man stands on the slope, next to the tracks. He is wearing a dark hat, a collared shirt, a dark jacket, and dark pants. He has a somber expression and is looking off to the right of the frame. Behind the man, the "Stables Mines" building is visible, along with other buildings and what appears to be a mountainous background. The overall feel of the image is industrial and somewhat bleak.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-01

The image is a black-and-white photograph depicting a scene from what appears to be a mining town. In the foreground, there is a man standing next to a railcar filled with what looks like coal or ore. The man is wearing a hat, jacket, and overalls, which suggests he might be a miner or worker.

To the left of the man, there is a large poster advertising a movie showing on Tuesdays for an admission fee of 10 cents. The poster features images of scenes from the movie and prominently displays the names of the actors: George Brien, Hard Rock, and Harrigan.

In the background, there are several buildings, including a prominent structure labeled "Stables Mines Co." This suggests that the location is associated with mining operations. The buildings appear to be residential and industrial, typical of a mining town.

The setting is outdoors, with rail tracks visible and a hilly or mountainous landscape in the distance. The overall scene conveys a sense of historical context, likely from the early to mid-20th century, capturing the daily life and environment of a mining community.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-05

The image is a black and white photograph depicting a man standing on a wooden bridge overlooking a railway track. The man is dressed in dark clothing and a hat, and he is looking off to the side. In the background, there is a building with the sign "STABLES MINES" and another sign that mentions "HARD ROCK." The setting appears to be an industrial area, likely near a mine or a railway station. To the left of the image, there is a movie poster with the text "TUESDAYS ADM 10¢," indicating a movie being advertised, and the names "BRIEN, HARD ROCK, HARRIGAN" are also visible, suggesting actors or performers. The overall atmosphere of the photo suggests a historical or period setting, possibly from the early to mid-20th century.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-05

The image is a black and white photograph depicting a scene from a small town or mining community. In the foreground, a man wearing a hat and overalls is standing on a wooden walkway near railroad tracks. He appears to be leaning on a wooden post. Behind him, there is a sign that reads "STABLES MINES 79" on a building, indicating that this is likely a mining town. The background shows several buildings, including houses and possibly other structures related to the mining industry. There is also a hill or mountain in the distance.

On the left side of the image, there is a vintage-style advertisement with the text "TUESDAYS ADM 10¢" and "HARRIGAN HARD ROCK O'BRIEN." The advertisement features several small photographs of people, suggesting it is promoting a show or event, possibly a film or theatrical performance. The overall scene gives a sense of a historical or early 20th-century setting.