Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

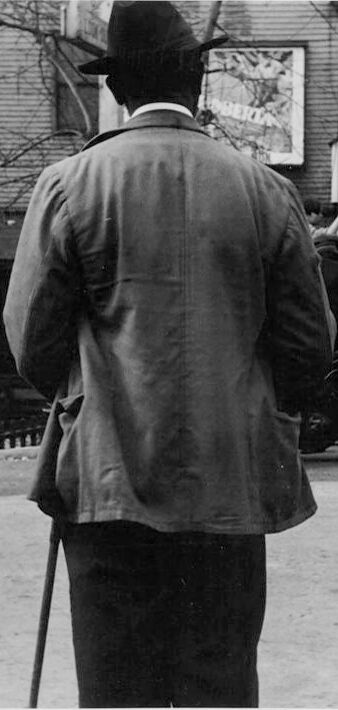

| Age | 31-41 |

| Gender | Male, 77.2% |

| Calm | 78.2% |

| Surprised | 9.8% |

| Fear | 6.3% |

| Sad | 5.5% |

| Angry | 2.9% |

| Disgusted | 2.3% |

| Happy | 1.9% |

| Confused | 1.2% |

Feature analysis

Amazon

| Adult | 99.7% | |

Categories

Imagga

| streetview architecture | 85.1% | |

| nature landscape | 6.4% | |

| paintings art | 6.1% | |

| people portraits | 1.7% | |

Captions

Microsoft

created by unknown on 2018-05-11

| a man standing in front of a building | 85.5% | |

| a man standing on a sidewalk | 84.2% | |

| a man that is standing in front of a building | 82.7% | |

Clarifai

created by general-english-image-caption-blip on 2025-05-03

| a photograph of a man in a suit and hat standing in front of a building | -100% | |

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-05-25

The image is a black-and-white photograph of a man standing on the street, facing away from the camera. He is wearing a suit jacket, trousers, and a hat, and has a cane in his right hand. The man appears to be African American.

In the background, there are several buildings, including a theater with a sign that reads "Hard Rock Harrigan" and "George O'Brien." There are also people walking around and a motorcycle parked on the street. The overall atmosphere of the image suggests a busy urban scene, possibly during the daytime.

The image may have been taken in the early 20th century, given the style of clothing and the presence of a motorcycle. It is difficult to determine the exact location or date of the image without more context.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-05-25

The image depicts a man standing on the street, facing away from the camera, with his back to the viewer. He is dressed in a suit and hat, and is holding a cane in his right hand. In the background, there is a building with a sign that reads "Hard Rock Harrigan" and another sign that says "O'Brien". A motorcycle is parked in front of the building, and several people are standing around. The overall atmosphere of the image suggests that it was taken in the early 20th century, possibly in the 1920s or 1930s. The style of clothing and the type of vehicle suggest a bygone era.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-05-24

The image is a black-and-white photograph depicting a street scene. In the foreground, there is a man standing with his back to the camera, wearing a hat and holding a cane. He appears to be observing the activities around him. Behind him, there is a motorcycle parked on the side of the road. To the right, a few people are standing near a building with a sign that reads "Hard Rock Harrigan." On the left side of the image, there is a tree and a building with a sign that reads "MAR THEATRE."

Created by amazon.nova-pro-v1:0 on 2025-05-24

A black and white photo of a man standing in front of a building with a sign that reads "Hard Rock Harrigan." The man is wearing a hat and holding a cane. Behind him, there is a motorcycle parked in front of the building. On the sidewalk, there are two men standing, one of whom is wearing a hat. In the distance, there is a mountain with trees on top.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-04-29

Here's a description of the image:

Overall Impression:

The image is a black and white photograph, likely taken in the early to mid-20th century. The scene appears to be a street in a small town. The overall mood is somewhat somber, with a focus on the figures and the architectural elements of the surroundings.

Main Subject & Composition:

- The primary subject is a man standing on the street, viewed from the back. He is wearing a hat and a jacket, and he is holding a cane. The photograph is clearly focused on the man's presence.

- The perspective is from a mid-ground, with the subject slightly off-center. The composition leads the viewer's eye from the man towards a theater and other figures, creating a sense of depth.

Background & Details:

- A movie theater, "MAR THEATRE," with a sign for "Hard Rock Harrigan" is prominently displayed.

- There are several other people visible in the background. One man in the foreground is facing the theater.

- A motorcycle is parked near the theater.

- The street is made of asphalt or concrete.

- The architecture of the buildings is of a vintage style and the town appears to be situated in a valley.

Atmosphere & Interpretation:

- The overall mood is of an older time. The image evokes a feeling of nostalgia and perhaps hints at the social dynamics of the era in which it was taken.

- The details like the old-style theater and the man's attire contributes to the image's historic and cultural feel.

Created by gemini-2.0-flash on 2025-04-29

The black-and-white photograph, taken from behind a man in a hat, captures a street scene with a vintage aesthetic. The man, dressed in a jacket and dark pants, has a cane in his right hand and faces away from the camera, obscuring his features. He is wearing a hat.

In the background, a movie theatre stands with its marquee displaying the names "HARRIGAN" and "OBRIEN". Next to the man, there is a motorcycle, and further down the street, another man in a hat and suit stands, leaning slightly, with other figures visible in the background. A building to the left of the man is visible, alongside a branch. A mountain range is visible in the background.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-05-25

The image is a black-and-white photograph depicting a street scene from an earlier era, likely the early to mid-20th century. The focal point of the image is a man standing with his back to the camera, wearing a suit jacket, trousers, and a hat, and holding a walking stick. He appears to be observing the surroundings.

In the background, there is a building with a sign that reads "Mar Theatre." The theater's marquee advertises the movie "Hard Rock Harrigan" starring George O'Brien. To the right of the theater, there is a signboard displaying various advertisements.

A few other people are visible in the scene. One person is standing near the theater entrance, while another is walking on the sidewalk. There is also a motorcycle parked on the street. The setting appears to be in a small town or rural area, with hills or mountains visible in the distance. The overall atmosphere suggests a quiet, possibly early morning or late afternoon moment in a bygone era.