Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

| Age | 18-26 |

| Gender | Female, 99% |

| Sad | 95.8% |

| Angry | 39.4% |

| Surprised | 6.6% |

| Fear | 6.1% |

| Calm | 5.1% |

| Confused | 3.5% |

| Disgusted | 1.7% |

| Happy | 0.4% |

Feature analysis

Amazon

| Person | 99.3% | |

Categories

Imagga

| paintings art | 64.6% | |

| people portraits | 31.1% | |

| streetview architecture | 2.7% | |

Captions

Microsoft

created by unknown on 2018-05-11

| a group of people posing for a photo | 90.3% | |

| a group of people posing for the camera | 90.2% | |

| a group of people posing for a picture | 90.1% | |

Clarifai

created by general-english-image-caption-blip on 2025-05-19

| a photograph of a group of people standing around a doorway | -100% | |

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-05-24

The image depicts a black-and-white photograph of a group of children standing in front of a wooden building. The children are dressed in casual attire, with some wearing hats and others sporting overalls. The building's exterior features horizontal wooden planks, and a message is written on it in white chalk: "No Negroes on this hall."

The overall atmosphere of the image suggests that it was taken during a time when racial segregation was prevalent, as indicated by the discriminatory message on the building. The children's expressions and body language convey a sense of curiosity and possibly even concern or unease, given the context of the message. The image provides a poignant glimpse into a historical period marked by racial tensions and segregation.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-05-24

The image depicts a black-and-white photograph of a group of children standing in front of a wooden building, with a man standing on the porch in the background.

The children are dressed in casual clothing, with some wearing hats and others not. They appear to be gathered around a wooden post or beam, possibly for a photo opportunity. The boy standing on the porch is wearing overalls and a white shirt, and he appears to be looking at the camera.

The wooden building behind the children has a sign written on it in white chalk that reads "No Allaregate on this side." The sign is partially illegible, but it seems to be warning people not to enter or cross over a certain area.

Overall, the image suggests that the children are attending school or participating in some kind of educational activity, and the sign on the building may be related to school rules or safety guidelines.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-05-24

The image is a black-and-white photograph of a group of young children. The children are standing in front of a wooden house. The house is made of planks of wood, and there is a door on the right. The children are looking in different directions, and some of them are touching the house. There is a boy standing inside the house, and he is looking out of the door.

Created by amazon.nova-lite-v1:0 on 2025-05-24

The image is a black-and-white photograph featuring a group of children and an adult standing in front of a wooden building. The children are arranged in a line, with some looking directly at the camera and others looking away. The adult, who appears to be a woman, is standing in the doorway of the building and is reaching out to touch the shoulder of a child. The building has a wooden exterior and is somewhat worn, with visible marks and scratches on the surface. The image has a nostalgic and historical feel, suggesting a time period when photography was not as advanced as it is today.

Google Gemini

Created by gemini-2.0-flash on 2025-05-21

The black and white photograph shows a group of African American children standing in front of a wooden house with the words "No More write on this house" written on the wall. The children are dressed in various outfits. One boy is wearing overalls and standing in the doorway. An adult's arm appears to be motioning him to be quiet. The scene appears to be set in a rural or impoverished area. The overall tone of the photograph is somber, with the children looking serious and contemplative. The message on the wall suggests a lack of respect or care for the property.

Created by gemini-2.0-flash-lite on 2025-05-21

Here's a description of the image:

Overall Impression:

The image is a black and white photograph featuring a group of African-American children. It appears to be taken outdoors, possibly in a rural setting. The photograph has a vintage feel, suggesting it may have been taken some time ago.

Composition:

- The photo is centered around the children.

- They are positioned in front of a weathered wooden building.

- There is a door/entrance on the right side of the image.

- The background includes wooden siding and possibly an interior space.

Details:

- Children: The children are the focal point. Their expressions range from serious to curious. They are dressed in simple clothing, and their ages appear to vary.

- Building: The building is made of wooden planks. The phrase "No More Write on this house" is written in chalk or a similar material on the wall.

- Doorway: In the doorway, a boy wearing overalls is visible and is leaning on a chair as an adult's hand leans down.

Possible Themes:

- Community/Social Setting: The image likely captures a moment in the children's lives, possibly a casual gathering.

- History/Culture: The photograph could offer insights into the historical experiences of African-American communities.

- Childhood: The image is a poignant glimpse into the lives of these children.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-05-24

The image is a black-and-white photograph featuring a group of individuals, likely a family, standing outside a wooden structure. The structure appears to be a simple, rustic building with horizontal wooden planks. There is some writing on the wall, but it is not entirely clear.

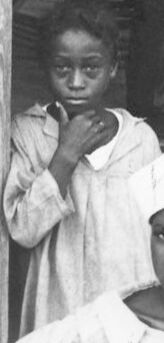

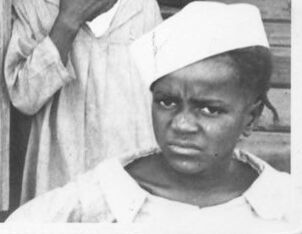

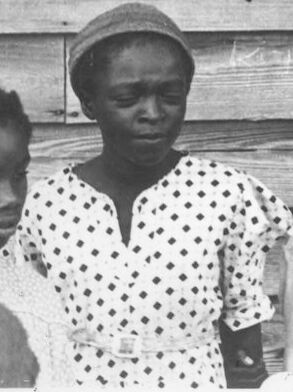

In the foreground, there are five children. The child on the far left is partially obscured. The next child, wearing a polka-dotted shirt and a headscarf, looks directly at the camera. The child in the center, wearing a white shirt, also looks at the camera. To the right, a young girl in a light-colored dress stands with her hand on her chin, and next to her is another child wearing a white headscarf and dress.

In the background, standing on the porch of the wooden structure, is a young boy wearing overalls and a white shirt. He is leaning against the doorframe, looking down at the group below.

The overall scene suggests a rural setting, possibly from an earlier time period, given the style of clothing and the construction of the building. The expressions on the faces of the individuals vary, with some looking directly at the camera and others appearing more contemplative.