Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

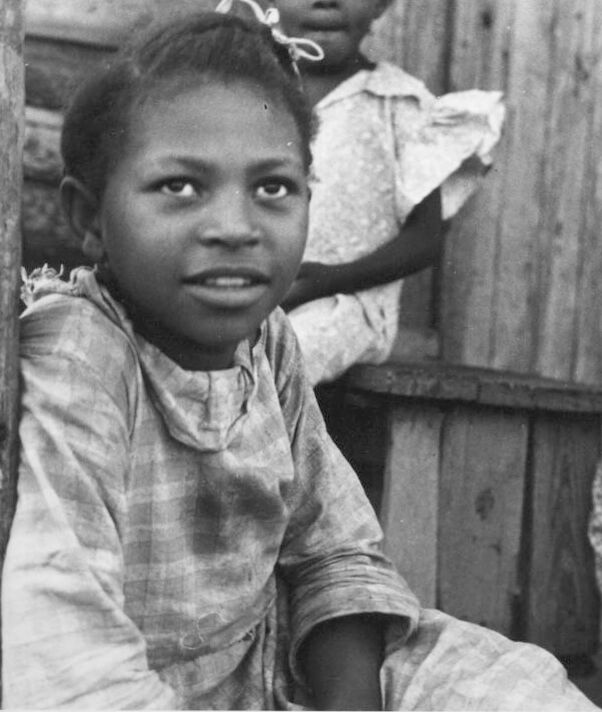

| Age | 13-21 |

| Gender | Female, 99.9% |

| Calm | 98.5% |

| Surprised | 6.3% |

| Fear | 6% |

| Sad | 2.2% |

| Angry | 0.4% |

| Happy | 0.3% |

| Disgusted | 0.1% |

| Confused | 0.1% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.1% | |

Categories

Imagga

created on 2023-10-05

| people portraits | 94.7% | |

| paintings art | 2.9% | |

| pets animals | 2.4% | |

Captions

Microsoft

created by unknown on 2018-05-11

| a man and a woman sitting on a wooden surface | 67.8% | |

| a group of people sitting in front of a window | 67.7% | |

| a person sitting on a wooden surface | 67.6% | |

Clarifai

No captions written

Salesforce

Created by general-english-image-caption-blip-2 on 2025-07-06

a black and white photo of a young girl sitting on a bench

Created by general-english-image-caption-blip on 2025-05-04

a photograph of a group of children sitting on a porch

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-10

The image depicts a group of individuals seated outdoors near wooden structures. Two of the people are wearing patterned clothing, including plaid and floral fabric. The scene appears to reflect a casual or everyday environment, with visible details of wooden textures in the background. The focal point includes a vertical wooden post and clothing details.

Created by gpt-4o-2024-08-06 on 2025-06-10

The image depicts a group of children sitting in front of what appears to be a wooden structure or building. The children are wearing long-sleeved dresses and shirts that seem typical of an earlier era, suggesting that the photograph might be historical. The setting and attire indicate a rural or modest setting. The photo captures a candid, everyday moment, likely taken in black and white with a focus on documenting life or conditions during the time it was taken.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-13

The image shows two young African children, a girl and a boy, standing in front of a wooden structure. The girl is looking directly at the camera with a serious expression on her face. She is wearing a light-colored dress. The boy is standing behind her, also looking at the camera. The background appears to be a rural setting, with wooden structures and a simple environment. The image has a black and white, documentary-style aesthetic, suggesting it was likely taken in the past.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-13

This is a black and white historical photograph showing several young people near what appears to be a wooden structure or building. The image appears to be from an earlier era, possibly the early-to-mid 20th century. In the foreground, there's a youth wearing what looks like a light-colored, somewhat worn shirt or blouse. Two other young people can be seen in the background. The photograph has a documentary-style quality typical of social photography from this period, capturing what seems to be an everyday moment in their lives. The wooden structure behind them appears weathered, and the overall composition suggests this may have been taken in a rural or working-class setting.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-12

The image is a black-and-white photograph of three young African American girls sitting outside a wooden building. The girl in the foreground is leaning against a wooden post, wearing a long-sleeved dress with a checkered pattern. She has short hair and a white ribbon tied in her hair. Behind her, another girl stands, holding a piece of fabric, and wearing a similar dress. To the right, a third girl sits, wearing a checkered shirt and a white headband. The background features a wooden building with vertical planks, suggesting a rural or impoverished setting. The overall atmosphere of the image is one of simplicity and hardship, with the girls' clothing and surroundings indicating a lack of resources.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-12

The image is a black-and-white photograph of three young black girls, likely taken in the early 20th century. The girl in the foreground is positioned on the left side of the image, with her body facing the camera and her head turned slightly to her right. She has dark skin and hair pulled back into a ponytail with a white ribbon. She is wearing a long-sleeved dress with a plaid pattern.

Behind her, to the right, is another girl who is also facing the camera. She has dark skin and hair pulled back into a ponytail with a white ribbon. She is wearing a long-sleeved dress with a white pattern.

In the background, there is a third girl who is partially visible. She is standing behind the first two girls and is facing the camera. She has dark skin and hair pulled back into a ponytail with a white ribbon. She is wearing a long-sleeved dress with a white pattern.

The background of the image appears to be a wooden structure, possibly a house or a fence. The overall atmosphere of the image suggests that it was taken in a rural or impoverished area, given the simple clothing and surroundings of the girls.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-06

The image is a black-and-white photograph featuring three young girls, possibly siblings, sitting outside a wooden structure. The girl in the center, who appears to be the youngest, is sitting on a wooden post and has a serious expression. She is wearing a checkered shirt and has her hair tied back. The girl on her left, who is slightly older, is sitting on a wooden bench and is looking directly at the camera. She is wearing a white dress with a ribbon in her hair. The girl on the right, who is the oldest, is sitting on the ground and is looking down. She is wearing a white shirt and has her hair tied back.

Created by amazon.nova-pro-v1:0 on 2025-06-06

The black-and-white image features three young girls, probably siblings, sitting on the wooden steps of a house. The girl on the left is sitting on a wooden post and looking directly at the camera. She has a serious expression on her face. Behind her, another girl is standing on the wooden steps, looking to the left. On the right, another girl is sitting, looking to the right.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-03

Certainly! Here's a description of the image:

Overall Impression:

The image is a black and white photograph that appears to be from the mid-20th century, possibly documenting everyday life. It features a group of young children, likely in a rural or modest setting.

Details:

Main Subject: The main focus is a young girl, positioned in the foreground. She is looking directly at the camera with a slight smile. She is wearing a light-colored, patterned dress. She has her hair pulled up with a ribbon.

Other Children: Behind the main subject, there is another young girl sitting. Another child is in the right corner. They seem to be interacting.

Setting: The children are positioned near a wooden structure, which could be a house or a porch. The worn wooden planks and the simple clothing suggest a setting with a degree of poverty.

Mood: The overall mood of the photograph is one of innocence, with a touch of hardship.

If you would like, give me another image to describe!

Created by gemini-2.0-flash on 2025-05-03

The black and white photo shows three African-American children near a wood-framed building. The first child, a girl, is leaning against a wooden post and is wearing a patterned shirt. In the background, another child, a girl, is sitting on a wooden platform. In the lower right corner of the frame, is the body of a third child, presumably seated. The children's poses and expressions are relaxed.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-01

The image is a black-and-white photograph depicting three young children. The central focus is on a young girl who is looking directly at the camera. She has a decorative bow in her hair and is wearing a dress with a checkered pattern. She appears to be leaning against a wooden structure, possibly a fence or a wall made of wooden planks.

In the background, two other children are visible. One child, who is partially obscured, is standing behind the girl and appears to be holding onto the wooden structure. The other child is sitting to the right of the central girl and is also looking towards the camera, though their face is partially hidden.

The setting appears to be outdoors, and the overall mood of the image suggests a candid moment captured in a rural or modest community. The children's expressions and the simplicity of their clothing and surroundings evoke a sense of everyday life in a bygone era.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-11

The image appears to be a black and white photograph, likely from a historical period, depicting a group of children. The foreground shows a young girl with a serious expression, looking directly at the camera. She has her hair bound back and is wearing a light-colored, possibly plaid, shirt. Behind her, another child is partially visible, standing with one arm resting on a wooden structure, possibly a porch or a fence. To the right, another child is seated, facing away from the camera, and appears to be wearing a patterned shirt. The setting seems to be rustic, with wooden planks forming the background and structure of the scene. The overall mood of the image suggests a candid moment captured in a simple, unembellished environment.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-11

This black-and-white photograph depicts a group of children sitting and standing near a wooden structure. The main subject is a young girl in the foreground, wearing a light-colored, slightly worn shirt. She is looking directly at the camera with a neutral expression. Behind her, another girl is standing with her arm resting on the wooden structure. To the right, another girl is sitting and appears to be looking off to the side. The wooden structure in the background looks weathered, suggesting an outdoor, possibly rural setting. The overall mood of the image seems candid and reflective of a moment in the children's daily lives.