Machine Generated Data

Tags

Amazon

created on 2023-10-06

| Architecture | 100 | |

|

| ||

| Building | 100 | |

|

| ||

| Outdoors | 100 | |

|

| ||

| Shelter | 100 | |

|

| ||

| Countryside | 100 | |

|

| ||

| Hut | 100 | |

|

| ||

| Nature | 100 | |

|

| ||

| Rural | 100 | |

|

| ||

| Face | 100 | |

|

| ||

| Head | 100 | |

|

| ||

| Photography | 100 | |

|

| ||

| Portrait | 100 | |

|

| ||

| Person | 99.6 | |

|

| ||

| Adult | 99.6 | |

|

| ||

| Male | 99.6 | |

|

| ||

| Man | 99.6 | |

|

| ||

| Person | 99.5 | |

|

| ||

| Child | 99.5 | |

|

| ||

| Female | 99.5 | |

|

| ||

| Girl | 99.5 | |

|

| ||

| Shack | 98.7 | |

|

| ||

| Housing | 94.6 | |

|

| ||

| House | 84.2 | |

|

| ||

| Cabin | 76.4 | |

|

| ||

| Smoke | 57.9 | |

|

| ||

| Log Cabin | 56.1 | |

|

| ||

| Blouse | 55.8 | |

|

| ||

| Clothing | 55.8 | |

|

| ||

| Happy | 55.7 | |

|

| ||

| Smile | 55.7 | |

|

| ||

Clarifai

created on 2018-05-11

Imagga

created on 2023-10-06

| man | 46.4 | |

|

| ||

| male | 37.6 | |

|

| ||

| person | 36.7 | |

|

| ||

| senior | 32.8 | |

|

| ||

| adult | 27.8 | |

|

| ||

| people | 27.3 | |

|

| ||

| portrait | 25.9 | |

|

| ||

| old | 24.4 | |

|

| ||

| face | 24.2 | |

|

| ||

| looking | 22.4 | |

|

| ||

| mature | 22.3 | |

|

| ||

| businessman | 22.1 | |

|

| ||

| grandfather | 21.2 | |

|

| ||

| elderly | 20.1 | |

|

| ||

| business | 17.6 | |

|

| ||

| office | 17.4 | |

|

| ||

| lifestyle | 17.3 | |

|

| ||

| hair | 16.6 | |

|

| ||

| couple | 16.6 | |

|

| ||

| world | 16.1 | |

|

| ||

| human | 15.8 | |

|

| ||

| serious | 15.3 | |

|

| ||

| glasses | 14.8 | |

|

| ||

| professional | 14.8 | |

|

| ||

| handsome | 14.3 | |

|

| ||

| happy | 13.8 | |

|

| ||

| gray | 13.5 | |

|

| ||

| head | 13.4 | |

|

| ||

| hand | 12.9 | |

|

| ||

| sitting | 12.9 | |

|

| ||

| expression | 12.8 | |

|

| ||

| casual | 12.7 | |

|

| ||

| device | 12.3 | |

|

| ||

| look | 12.3 | |

|

| ||

| shirt | 12.1 | |

|

| ||

| corporate | 12 | |

|

| ||

| alone | 11.9 | |

|

| ||

| smiling | 11.6 | |

|

| ||

| indoors | 11.4 | |

|

| ||

| men | 11.2 | |

|

| ||

| love | 11.1 | |

|

| ||

| suit | 11 | |

|

| ||

| smile | 10.7 | |

|

| ||

| retired | 10.7 | |

|

| ||

| retirement | 10.6 | |

|

| ||

| worker | 10.5 | |

|

| ||

| one | 10.5 | |

|

| ||

| thinking | 10.4 | |

|

| ||

| businesspeople | 10.4 | |

|

| ||

| black | 10.2 | |

|

| ||

| work | 10.2 | |

|

| ||

| happiness | 10.2 | |

|

| ||

| husband | 9.6 | |

|

| ||

| talking | 9.5 | |

|

| ||

| executive | 9.5 | |

|

| ||

| manager | 9.3 | |

|

| ||

| phone | 9.2 | |

|

| ||

| cheerful | 8.9 | |

|

| ||

| depression | 8.8 | |

|

| ||

| older | 8.7 | |

|

| ||

| day | 8.6 | |

|

| ||

| desk | 8.5 | |

|

| ||

| holding | 8.3 | |

|

| ||

| aged | 8.1 | |

|

| ||

| lady | 8.1 | |

|

| ||

| home | 8 | |

|

| ||

| job | 8 | |

|

| ||

| worried | 7.8 | |

|

| ||

| middle aged | 7.8 | |

|

| ||

| pretty | 7.7 | |

|

| ||

| aging | 7.7 | |

|

| ||

| facial | 7.7 | |

|

| ||

| telephone | 7.6 | |

|

| ||

| wife | 7.6 | |

|

| ||

| confident | 7.3 | |

|

| ||

| shop | 7.3 | |

|

| ||

| beard | 7.2 | |

|

| ||

| spectator | 7.2 | |

|

| ||

| working | 7.1 | |

|

| ||

| model | 7 | |

|

| ||

Google

created on 2018-05-11

| photograph | 96.5 | |

|

| ||

| black | 95.4 | |

|

| ||

| black and white | 92.6 | |

|

| ||

| standing | 90.2 | |

|

| ||

| monochrome photography | 87 | |

|

| ||

| vintage clothing | 83.5 | |

|

| ||

| photography | 83.2 | |

|

| ||

| snapshot | 81.8 | |

|

| ||

| monochrome | 70.7 | |

|

| ||

| history | 59.7 | |

|

| ||

| portrait | 56.6 | |

|

| ||

| stock photography | 53.6 | |

|

| ||

| girl | 50.6 | |

|

| ||

Microsoft

created on 2018-05-11

| person | 99.3 | |

|

| ||

| man | 94.6 | |

|

| ||

| window | 93.3 | |

|

| ||

| restaurant | 17.4 | |

|

| ||

Color Analysis

Face analysis

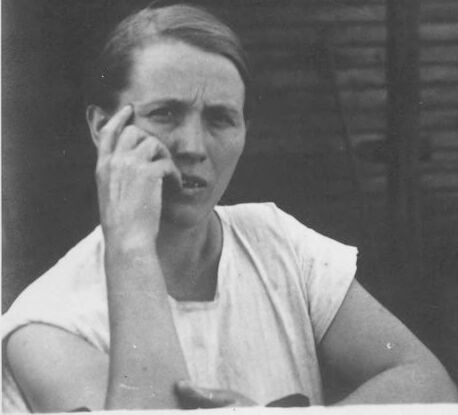

Amazon

Microsoft

Imagga

AWS Rekognition

| Age | 31-41 |

| Gender | Male, 50.3% |

| Confused | 74.6% |

| Sad | 37.2% |

| Surprised | 6.4% |

| Fear | 6% |

| Angry | 0.6% |

| Calm | 0.6% |

| Disgusted | 0.3% |

| Happy | 0.1% |

AWS Rekognition

| Age | 34-42 |

| Gender | Female, 99.6% |

| Sad | 100% |

| Surprised | 6.5% |

| Fear | 6.1% |

| Confused | 5.3% |

| Calm | 5.1% |

| Angry | 1.4% |

| Disgusted | 1.1% |

| Happy | 0.2% |

Microsoft Cognitive Services

| Age | 46 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 32 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Imagga

| Traits | no traits identified |

Imagga

| Traits | no traits identified |

Feature analysis

Categories

Imagga

| people portraits | 44.9% | |

|

| ||

| interior objects | 24.4% | |

|

| ||

| beaches seaside | 11.1% | |

|

| ||

| streetview architecture | 7.1% | |

|

| ||

| paintings art | 3.6% | |

|

| ||

| food drinks | 2.4% | |

|

| ||

| cars vehicles | 2.2% | |

|

| ||

| pets animals | 2.2% | |

|

| ||

| nature landscape | 1.1% | |

|

| ||

Captions

Microsoft

created on 2018-05-11

| a man sitting in front of a window | 93.1% | |

|

| ||

| a man sitting in front of a window posing for the camera | 90.4% | |

|

| ||

| a man is sitting in front of a window | 88.7% | |

|

| ||