Machine Generated Data

Tags

Amazon

created on 2023-10-05

| Adult | 99.1 | |

|

| ||

| Male | 99.1 | |

|

| ||

| Man | 99.1 | |

|

| ||

| Person | 99.1 | |

|

| ||

| Adult | 98.8 | |

|

| ||

| Male | 98.8 | |

|

| ||

| Man | 98.8 | |

|

| ||

| Person | 98.8 | |

|

| ||

| Adult | 96 | |

|

| ||

| Male | 96 | |

|

| ||

| Man | 96 | |

|

| ||

| Person | 96 | |

|

| ||

| Machine | 95.6 | |

|

| ||

| Wheel | 95.6 | |

|

| ||

| Wheel | 94.7 | |

|

| ||

| Clothing | 87.7 | |

|

| ||

| Coat | 87.7 | |

|

| ||

| Car | 84 | |

|

| ||

| Transportation | 84 | |

|

| ||

| Vehicle | 84 | |

|

| ||

| Face | 80.5 | |

|

| ||

| Head | 80.5 | |

|

| ||

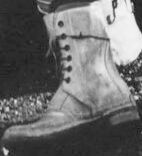

| Footwear | 75.5 | |

|

| ||

| Shoe | 75.5 | |

|

| ||

| Hat | 62.3 | |

|

| ||

| Shoe | 60.3 | |

|

| ||

| People | 57.1 | |

|

| ||

| Boot | 56.6 | |

|

| ||

| Hunting | 56.3 | |

|

| ||

| Photography | 56 | |

|

| ||

| Spoke | 55.1 | |

|

| ||

Clarifai

created on 2018-05-11

Imagga

created on 2023-10-05

| rugby ball | 100 | |

|

| ||

| ball | 100 | |

|

| ||

| game equipment | 69.5 | |

|

| ||

| equipment | 49.8 | |

|

| ||

| sport | 33.4 | |

|

| ||

| football | 28.8 | |

|

| ||

| game | 28.5 | |

|

| ||

| man | 27.5 | |

|

| ||

| grass | 26.9 | |

|

| ||

| field | 26.8 | |

|

| ||

| male | 25.6 | |

|

| ||

| competition | 23.8 | |

|

| ||

| people | 23.4 | |

|

| ||

| play | 22.4 | |

|

| ||

| soccer | 22.1 | |

|

| ||

| player | 21.7 | |

|

| ||

| team | 21.5 | |

|

| ||

| person | 21.3 | |

|

| ||

| kick | 19.5 | |

|

| ||

| athlete | 17.9 | |

|

| ||

| outside | 17.1 | |

|

| ||

| active | 16.4 | |

|

| ||

| adult | 15.6 | |

|

| ||

| sports | 14.8 | |

|

| ||

| men | 14.6 | |

|

| ||

| playing | 14.6 | |

|

| ||

| exercise | 13.6 | |

|

| ||

| goal | 13.4 | |

|

| ||

| outdoors | 13.4 | |

|

| ||

| soccer ball | 13 | |

|

| ||

| foot | 12.4 | |

|

| ||

| outdoor | 12.2 | |

|

| ||

| action | 12 | |

|

| ||

| fun | 12 | |

|

| ||

| happy | 11.9 | |

|

| ||

| players | 11.8 | |

|

| ||

| fitness | 11.7 | |

|

| ||

| recreation | 11.6 | |

|

| ||

| activity | 11.6 | |

|

| ||

| leisure | 11.6 | |

|

| ||

| boy | 11.3 | |

|

| ||

| wheelchair | 11.2 | |

|

| ||

| child | 11.1 | |

|

| ||

| practice | 10.6 | |

|

| ||

| run | 10.6 | |

|

| ||

| running | 10.5 | |

|

| ||

| planner | 10.5 | |

|

| ||

| black | 10.2 | |

|

| ||

| training | 10.2 | |

|

| ||

| lifestyle | 10.1 | |

|

| ||

| holding | 9.9 | |

|

| ||

| park | 9.9 | |

|

| ||

| attractive | 9.8 | |

|

| ||

| chair | 9.6 | |

|

| ||

| league | 8.8 | |

|

| ||

| professional | 8.7 | |

|

| ||

| helmet | 8.4 | |

|

| ||

| summer | 8.4 | |

|

| ||

| children | 8.2 | |

|

| ||

| sitting | 7.7 | |

|

| ||

| balls | 7.7 | |

|

| ||

| youth | 7.7 | |

|

| ||

| athletic | 7.7 | |

|

| ||

| leg | 7.6 | |

|

| ||

| health | 7.6 | |

|

| ||

| healthy | 7.6 | |

|

| ||

| clothing | 7.5 | |

|

| ||

| teamwork | 7.4 | |

|

| ||

| teen | 7.3 | |

|

| ||

| teenager | 7.3 | |

|

| ||

| smiling | 7.2 | |

|

| ||

| body | 7.2 | |

|

| ||

| smile | 7.1 | |

|

| ||

| women | 7.1 | |

|

| ||

| love | 7.1 | |

|

| ||

Google

created on 2018-05-11

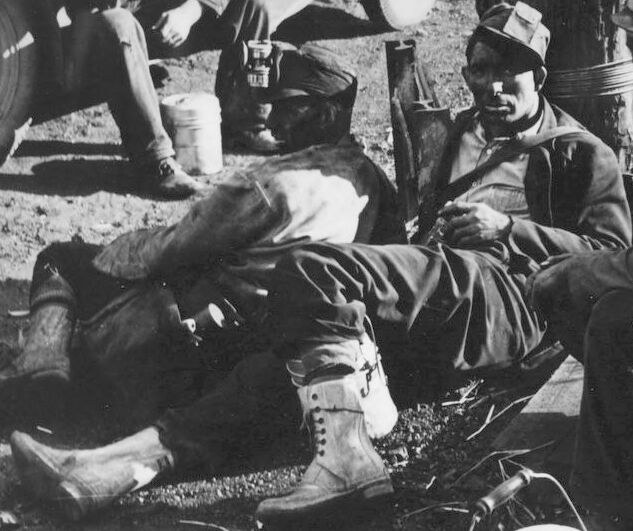

| soldier | 86 | |

|

| ||

| black and white | 85.9 | |

|

| ||

| troop | 81.8 | |

|

| ||

| motor vehicle | 80.7 | |

|

| ||

| army | 77.1 | |

|

| ||

| militia | 73.2 | |

|

| ||

| military | 70.4 | |

|

| ||

| crew | 68.8 | |

|

| ||

| military organization | 62.9 | |

|

| ||

| monochrome photography | 60.3 | |

|

| ||

| vehicle | 59.7 | |

|

| ||

| military person | 57.3 | |

|

| ||

| monochrome | 55.1 | |

|

| ||

| military officer | 55 | |

|

| ||

Color Analysis

Face analysis

Amazon

Imagga

AWS Rekognition

| Age | 24-34 |

| Gender | Female, 58.9% |

| Calm | 99.2% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.3% |

| Confused | 0.1% |

| Disgusted | 0% |

| Angry | 0% |

| Happy | 0% |

AWS Rekognition

| Age | 23-31 |

| Gender | Male, 86.3% |

| Calm | 99.4% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.2% |

| Confused | 0.1% |

| Disgusted | 0.1% |

| Angry | 0.1% |

| Happy | 0% |

Imagga

| Traits | no traits identified |

Feature analysis

Categories

Imagga

| paintings art | 95.2% | |

|

| ||

| people portraits | 3.6% | |

|

| ||

Captions

Microsoft

created by unknown on 2018-05-11

| a group of people posing for a photo | 80.1% | |

|

| ||

| a group of people posing for the camera | 80% | |

|

| ||

| a group of people posing for a picture | 79.9% | |

|

| ||

Clarifai

created by general-english-image-caption-blip on 2025-05-18

| a photograph of a group of men sitting on the ground | -100% | |

|

| ||