Machine Generated Data

Tags

Amazon

created on 2023-10-06

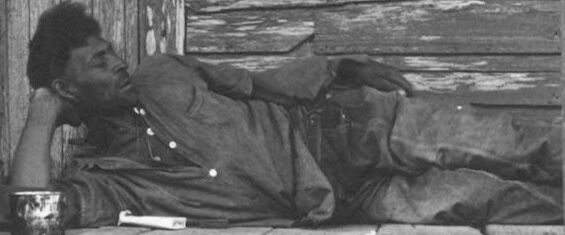

| Adult | 99.2 | |

|

| ||

| Male | 99.2 | |

|

| ||

| Man | 99.2 | |

|

| ||

| Person | 99.2 | |

|

| ||

| Male | 99 | |

|

| ||

| Person | 99 | |

|

| ||

| Boy | 99 | |

|

| ||

| Child | 99 | |

|

| ||

| Adult | 98.4 | |

|

| ||

| Male | 98.4 | |

|

| ||

| Man | 98.4 | |

|

| ||

| Person | 98.4 | |

|

| ||

| Wood | 98.4 | |

|

| ||

| Face | 93.4 | |

|

| ||

| Head | 93.4 | |

|

| ||

| Clothing | 91.1 | |

|

| ||

| Coat | 91.1 | |

|

| ||

| Outdoors | 85.8 | |

|

| ||

| Bench | 84.3 | |

|

| ||

| Furniture | 84.3 | |

|

| ||

| People | 76.2 | |

|

| ||

| Architecture | 75.3 | |

|

| ||

| Building | 75.3 | |

|

| ||

| Shelter | 75.3 | |

|

| ||

| Nature | 71.5 | |

|

| ||

| Art | 71.1 | |

|

| ||

| Painting | 71.1 | |

|

| ||

| Hat | 68.8 | |

|

| ||

| Photography | 66.4 | |

|

| ||

| Portrait | 66.4 | |

|

| ||

| Housing | 60.3 | |

|

| ||

| Countryside | 58 | |

|

| ||

| Hut | 58 | |

|

| ||

| Rural | 58 | |

|

| ||

| Footwear | 57.4 | |

|

| ||

| Shoe | 57.4 | |

|

| ||

| Bus Stop | 55.5 | |

|

| ||

| Shack | 55.5 | |

|

| ||

| Couch | 55.4 | |

|

| ||

| Slum | 55.3 | |

|

| ||

| Cabin | 55 | |

|

| ||

| House | 55 | |

|

| ||

Clarifai

created on 2018-05-11

Imagga

created on 2023-10-06

| stretcher | 45.2 | |

|

| ||

| litter | 36.3 | |

|

| ||

| conveyance | 28.5 | |

|

| ||

| adult | 25.2 | |

|

| ||

| happy | 25.1 | |

|

| ||

| kin | 23.8 | |

|

| ||

| man | 22.9 | |

|

| ||

| male | 22.2 | |

|

| ||

| person | 21.4 | |

|

| ||

| people | 20.6 | |

|

| ||

| sitting | 20.6 | |

|

| ||

| lifestyle | 18.8 | |

|

| ||

| attractive | 17.5 | |

|

| ||

| sofa | 17.4 | |

|

| ||

| couple | 17.4 | |

|

| ||

| couch | 17.4 | |

|

| ||

| smile | 17.1 | |

|

| ||

| portrait | 16.2 | |

|

| ||

| home | 15.1 | |

|

| ||

| love | 15 | |

|

| ||

| happiness | 14.9 | |

|

| ||

| casual | 13.6 | |

|

| ||

| old | 13.2 | |

|

| ||

| child | 12.9 | |

|

| ||

| smiling | 11.6 | |

|

| ||

| family | 11.6 | |

|

| ||

| pretty | 11.2 | |

|

| ||

| men | 11.2 | |

|

| ||

| laptop | 11 | |

|

| ||

| relaxing | 10.9 | |

|

| ||

| relaxation | 10.9 | |

|

| ||

| cadaver | 10.8 | |

|

| ||

| cheerful | 10.6 | |

|

| ||

| indoors | 10.5 | |

|

| ||

| indoor | 10 | |

|

| ||

| house | 10 | |

|

| ||

| joy | 10 | |

|

| ||

| leisure | 10 | |

|

| ||

| fashion | 9.8 | |

|

| ||

| fun | 9.7 | |

|

| ||

| bench | 9.7 | |

|

| ||

| together | 9.6 | |

|

| ||

| computer | 9.6 | |

|

| ||

| boy | 9.6 | |

|

| ||

| hair | 9.5 | |

|

| ||

| model | 9.3 | |

|

| ||

| face | 9.2 | |

|

| ||

| mother | 9.1 | |

|

| ||

| interior | 8.8 | |

|

| ||

| women | 8.7 | |

|

| ||

| cute | 8.6 | |

|

| ||

| jeans | 8.6 | |

|

| ||

| sit | 8.5 | |

|

| ||

| two | 8.5 | |

|

| ||

| relax | 8.4 | |

|

| ||

| black | 8.4 | |

|

| ||

| alone | 8.2 | |

|

| ||

| group | 8.1 | |

|

| ||

| sexy | 8 | |

|

| ||

| work | 8 | |

|

| ||

| room | 7.9 | |

|

| ||

| lonely | 7.7 | |

|

| ||

| resting | 7.6 | |

|

| ||

| enjoy | 7.5 | |

|

| ||

| relaxed | 7.5 | |

|

| ||

| human | 7.5 | |

|

| ||

| senior | 7.5 | |

|

| ||

| outdoors | 7.5 | |

|

| ||

| rest | 7.4 | |

|

| ||

| technology | 7.4 | |

|

| ||

| lady | 7.3 | |

|

| ||

| art | 7.2 | |

|

| ||

| looking | 7.2 | |

|

| ||

| building | 7.1 | |

|

| ||

| handsome | 7.1 | |

|

| ||

| working | 7.1 | |

|

| ||

| day | 7.1 | |

|

| ||

| father | 7 | |

|

| ||

Google

created on 2018-05-11

| photograph | 95.7 | |

|

| ||

| black | 95.3 | |

|

| ||

| black and white | 92.4 | |

|

| ||

| monochrome photography | 83 | |

|

| ||

| snapshot | 81.8 | |

|

| ||

| photography | 80.5 | |

|

| ||

| monochrome | 69.8 | |

|

| ||

| history | 60.8 | |

|

| ||

| visual arts | 59.2 | |

|

| ||

| stock photography | 55.7 | |

|

| ||

| vintage clothing | 54.7 | |

|

| ||

| still life photography | 52.9 | |

|

| ||

Color Analysis

Face analysis

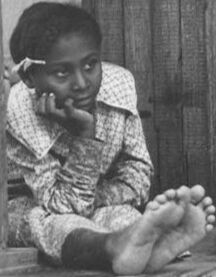

Amazon

Microsoft

AWS Rekognition

| Age | 28-38 |

| Gender | Female, 100% |

| Calm | 93.3% |

| Surprised | 6.4% |

| Fear | 6% |

| Sad | 4.6% |

| Confused | 0.4% |

| Happy | 0.2% |

| Angry | 0.1% |

| Disgusted | 0.1% |

AWS Rekognition

| Age | 34-42 |

| Gender | Male, 99.7% |

| Calm | 81.7% |

| Confused | 13.7% |

| Surprised | 6.5% |

| Fear | 5.9% |

| Sad | 3.2% |

| Happy | 0.4% |

| Disgusted | 0.4% |

| Angry | 0.4% |

AWS Rekognition

| Age | 36-44 |

| Gender | Male, 99.4% |

| Calm | 94.4% |

| Surprised | 6.6% |

| Fear | 5.9% |

| Sad | 2.7% |

| Happy | 1.5% |

| Confused | 1.1% |

| Disgusted | 0.3% |

| Angry | 0.3% |

Microsoft Cognitive Services

| Age | 32 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 41 |

| Gender | Female |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| streetview architecture | 61.1% | |

|

| ||

| paintings art | 18.9% | |

|

| ||

| beaches seaside | 8.6% | |

|

| ||

| nature landscape | 4.9% | |

|

| ||

| cars vehicles | 3.7% | |

|

| ||

| interior objects | 1.2% | |

|

| ||

Captions

Microsoft

created on 2018-05-11

| a man sitting on a bench | 84.1% | |

|

| ||

| a man sitting on a wooden bench | 79.8% | |

|

| ||

| a man sleeping on a bench | 64.9% | |

|

| ||