Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

| Age | 20-28 |

| Gender | Female, 100% |

| Calm | 94.5% |

| Surprised | 6.4% |

| Fear | 6% |

| Sad | 3.5% |

| Happy | 0.5% |

| Confused | 0.3% |

| Angry | 0.3% |

| Disgusted | 0.1% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Adult | 99.5% | |

Categories

Imagga

created on 2023-10-06

| paintings art | 98.4% | |

Captions

Microsoft

created by unknown on 2018-05-11

| a group of people sitting on a bench | 95.2% | |

| a group of people sitting on a bench looking at the camera | 92.5% | |

| a group of people sitting on a bench next to a window | 92.1% | |

Salesforce

Created by general-english-image-caption-blip-2 on 2025-07-04

three men sitting on a bench outside of a shack

Created by general-english-image-caption-blip on 2025-05-05

a photograph of a group of people sitting on a bench

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-14

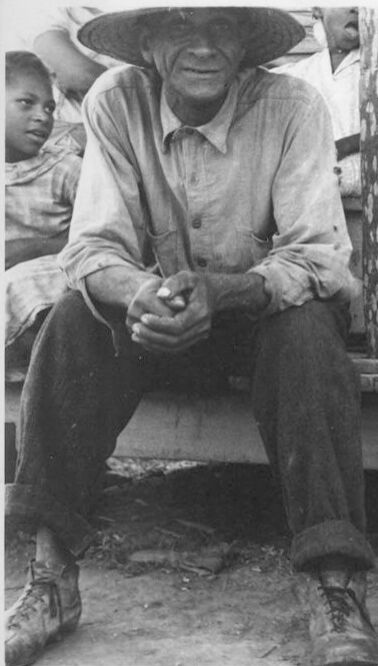

This black-and-white photograph depicts a group of individuals sitting on a wooden porch in a rural setting. One person is wearing a wide-brimmed hat, while the others are dressed in plain, functional clothing typical of laborers or farm workers. The wooden planks and weathered texture of the porch and building suggest modest conditions. A tin cup is placed near one of the seated individuals, adding to the impression of simplicity. The atmosphere reflects a quiet, candid moment of daily life.

Created by gpt-4o-2024-08-06 on 2025-06-14

The image is a black and white photograph depicting a group of people sitting on a wooden porch. The person on the left is wearing a broad-brimmed hat and sitting with their hands clasped. In the center, there is a child in patterned clothing, sitting and leaning against a wooden wall. On the right, a person is lying down on the porch with their arm resting on their knee, and a metal cup is placed next to them on the wooden planks. The backdrop consists of weathered wood planks, suggesting a rustic or rural setting. The clothing of the individuals appears to be modest and practical. The overall scene gives a sense of a moment of rest or pause in an outdoor setting.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-14

The image appears to depict a group of people sitting on a wooden structure, likely a porch or bench. The individuals appear to be older, with weathered and worn expressions on their faces. They are dressed in simple, utilitarian clothing. The setting appears to be a rural or impoverished area, with a wooden structure and sparse surroundings visible in the background. The overall tone of the image is one of hardship and difficult living conditions.

Created by us.anthropic.claude-3-opus-20240229-v1:0 on 2025-06-14

The black and white image depicts a group of four African American individuals sitting on the steps of a wooden structure, likely a cabin or shack. There are two older men wearing hats seated on either side, and a young boy in the center looking directly at the camera. The men have weathered, worn faces suggesting they have lived difficult lives. The setting appears rural and impoverished based on the rudimentary building and the tattered clothing the subjects are wearing. The image provides a glimpse into the harsh realities and living conditions faced by many African Americans, especially in more rural areas, during what was likely the early-to-mid 20th century timeframe of the photograph.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-14

This is a black and white historical photograph that appears to be from the early-to-mid 20th century. The image shows several people sitting on what appears to be a wooden porch or platform. The central figure is wearing light-colored work clothes and a hat. The photograph has a documentary-style quality typical of Depression-era photography, with the weathered wood and simple clothing suggesting a rural, working-class setting. The composition captures what seems to be a candid moment of daily life, with the subjects appearing relaxed and natural in their environment.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-05-31

The image is a black-and-white photograph of a group of people sitting on a wooden bench. The man on the left wears a wide-brimmed hat, a light-colored shirt, dark pants, and boots. He holds an object in his hands. Next to him is a woman with her head resting on her hand, wearing a patterned dress. On the far right lies a man in a light-colored shirt, with his head turned towards the camera.

In the background, there are other people and a wooden structure, possibly a building or a fence. The overall atmosphere suggests a relaxed gathering or social event, possibly in a rural or outdoor setting.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-05-31

The image depicts a group of people sitting on a wooden bench, with a man in the foreground wearing a wide-brimmed hat and a light-colored shirt, and a woman in the middle wearing a checkered dress. The man on the right is reclining on the bench, and there are other people in the background. The image appears to be a black-and-white photograph taken in a rural or outdoor setting, possibly during the early 20th century.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-05-30

The black-and-white photo shows a group of people sitting on a wooden bench. The man on the left is wearing a hat and a long-sleeve shirt. He is holding a cigarette in his hand. The man on the right is lying down on the bench, while the boy in the middle is sitting and holding his chin. Behind them, there is a wooden wall with a door and a child sitting on the ground.

Created by amazon.nova-lite-v1:0 on 2025-05-30

The black-and-white image depicts a family sitting on a wooden porch. An elderly man is seated on the left, wearing a wide-brimmed hat and a long-sleeved shirt. He appears to be looking towards the camera, while his hands are clasped together. To his right, a young boy is sitting, leaning against a wooden post, and resting his chin on his hand. Next to him is another young boy lying down, also looking towards the camera. Behind them, there is a wooden structure, possibly a house, with a person standing near the entrance.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-05

Certainly! Here's a description of the image:

Overall Impression:

The photograph is a black and white image depicting a group of people sitting on a porch. It has a vintage feel and likely captures a moment in time in a rural setting.

Details:

Setting: The scene appears to be outside, possibly in front of a wooden house or building. The ground is dirt or unpaved.

People: The main subjects of the photo are three people sitting on a wooden porch.

- First Person: An older man in a hat is seated, looking directly at the camera. He's wearing a button-down shirt and dark pants, with his hands clasped in his lap.

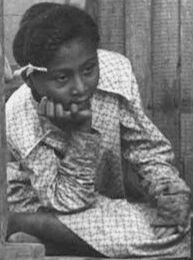

- Second Person: A young person, sitting on the porch with their hand on their chin.

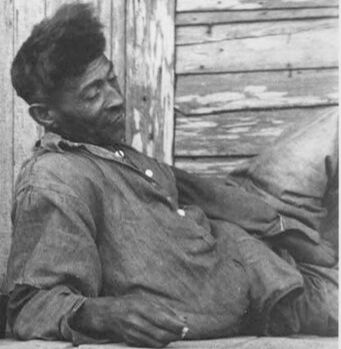

- Third Person: An older man, lying on the porch.

Mood: The photo evokes a sense of stillness and possibly hardship. The subjects' expressions and the simple setting suggest a life of working or living in poverty.

Let me know if you would like a more detailed description or if there is anything specific you would like me to focus on!

Created by gemini-2.0-flash on 2025-05-05

The image is a black and white photograph of a group of people sitting on a porch. The composition is centered around the individuals, with a backdrop of a wooden structure. The quality of the photograph is grainy, which adds to its historical feel.

In the foreground, on the left, is a man wearing a hat, a long-sleeved shirt, and pants. He is sitting with his hands clasped. Next to him is a child with their head resting on their hands, giving the impression of being contemplative. Further to the right, another man is reclined on the porch with a cup nearby. The men are wearing plain attire.

The background is a weathered wooden wall. The overall atmosphere of the photograph conveys a sense of hardship and resilience.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-01

The image is a black-and-white photograph depicting a group of people sitting on a wooden bench or platform. The scene appears to be set in a rural area, possibly in the early to mid-20th century. The people in the photograph are dressed in simple, work-oriented clothing, suggesting they might be farmers or laborers.

From left to right:

- The first person, a man, is sitting with his legs crossed and hands clasped. He is wearing a wide-brimmed hat, a long-sleeved shirt, and trousers. His facial expression appears to be relaxed.

- The second person, a young girl, is seated in the middle. She is wearing a patterned dress and appears to be holding something in her hands, possibly a piece of food or an object. She looks directly at the camera with a neutral expression.

- The third person, another man, is leaning back against the wall with his legs stretched out. He is wearing a long-sleeved shirt and trousers, and his posture suggests he is relaxed or possibly tired.

In the background, there are wooden walls and another child partially visible, indicating the setting might be outside a rustic building or shack. The overall atmosphere of the photograph conveys a sense of simplicity and hard work, reflecting the daily life of people in a rural community during that time period.