Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 43-51 |

| Gender | Female, 100% |

| Calm | 48.1% |

| Happy | 43.5% |

| Surprised | 6.6% |

| Fear | 6.1% |

| Sad | 3.4% |

| Disgusted | 2.4% |

| Angry | 0.8% |

| Confused | 0.5% |

Feature analysis

Amazon

| Adult | 99.5% | |

Categories

Imagga

| paintings art | 96.1% | |

| streetview architecture | 2.4% | |

| people portraits | 1.1% | |

Captions

Microsoft

created on 2018-05-11

| a man and a woman standing in front of a building | 88.1% | |

| a man and a woman standing next to a building | 88% | |

| a man and woman standing next to a building | 85% | |

Clarifai

created by general-english-image-caption-blip on 2025-05-04

| a photograph of a man and a woman standing on a porch | -100% | |

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-04

Here's a description of the image:

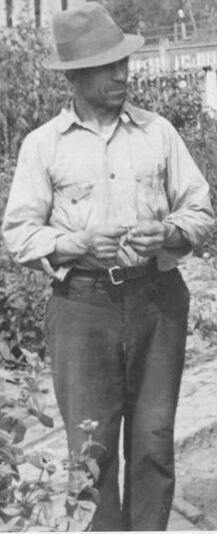

This is a black and white photograph capturing a family scene, likely from the early to mid-20th century.

Main Subjects:

- Man: A man wearing a hat, a light-colored button-down shirt, and dark trousers stands in the foreground. He appears to be holding something in his hands.

- Woman and Child: A woman and a young girl are standing on the porch of a house. The woman is wearing a light-colored dress, and the little girl is also wearing a light-colored dress and socks.

Setting and Composition:

- The scene takes place outdoors, likely in a rural or suburban setting.

- The photograph is framed with the man in the front and the family on the porch in the back.

- The man appears to be in a garden, and there is a path behind him.

- The house has a simple architectural style.

- There are utility poles and lines visible in the background.

- A sign is hanging on the porch.

- The composition suggests a snapshot of everyday life.

Overall Impression:

The image has a vintage quality, suggesting a sense of time and place. The scene has a familial intimacy.

Created by gemini-2.0-flash on 2025-05-04

Certainly! Here's a description of the image:

Overall Impression:

The image is a black and white photograph featuring a family in an outdoor setting. It seems to be from an earlier era, judging by the clothing and overall style.

Detailed Description:

Main Figures: A man stands in the foreground on the left side, wearing a hat, button-down shirt, and pants. He appears to be holding something in his hands. On the right, a woman and a young girl are standing on the steps of a house. The girl is wearing a dress, and the woman stands behind her.

Setting: The scene seems to be a yard or garden area. There are plants and what appears to be a garden path. In the background, there's a house with a white picket fence. The house where the woman and the young girl are standing is a simple wooden one with a porch.

Additional Details: There's a bag hanging near the doorway with writing on it. Also, some objects like buckets and a stool are on the porch.

Overall Mood:

The photograph has a simple, everyday feel. It captures a moment in the lives of this family, and the setting evokes a sense of rural or small-town life.