Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

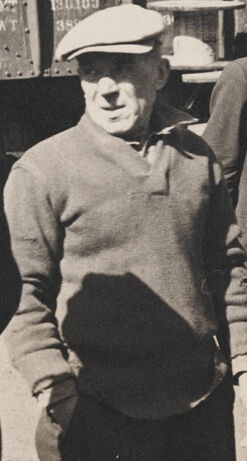

| Age | 27-44 |

| Gender | Female, 51.9% |

| Calm | 53% |

| Sad | 45.7% |

| Confused | 45.2% |

| Happy | 45.2% |

| Disgusted | 45.2% |

| Angry | 45.3% |

| Surprised | 45.3% |

Feature analysis

Amazon

| Person | 99.5% | |

Categories

Imagga

| people portraits | 83.6% | |

| streetview architecture | 7.7% | |

| paintings art | 7.4% | |

| nature landscape | 0.8% | |

| events parties | 0.3% | |

| pets animals | 0.1% | |

Captions

Microsoft

created by unknown on 2018-03-23

| a group of people posing for a photo | 96.4% | |

| a group of people posing for the camera | 96.3% | |

| a group of people standing outside of a building | 96.2% | |

Clarifai

created by general-english-image-caption-blip on 2025-05-11

| a photograph of a group of men standing around a train | -100% | |

Anthropic Claude

Created by claude-3-haiku-20240307 on 2024-12-30

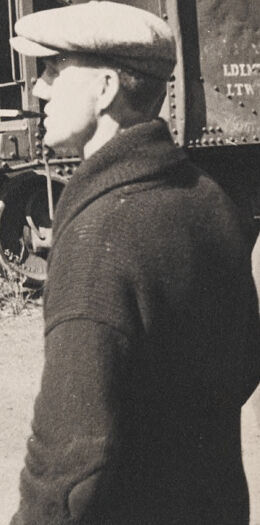

The image appears to depict a group of people standing in front of a train car or shipping container. The container is marked with the initials "P.M.K.Y.", which likely stands for the railroad or shipping company that owns the container. The men in the image are wearing hats and coats, suggesting this is a scene from an earlier time period. The background shows some trees, indicating an outdoor setting. Overall, the image captures a slice of everyday life and transportation from a past era.

Created by claude-3-opus-20240229 on 2024-12-30

The black and white photograph shows a group of men standing in front of an old train car labeled "P.M.K.N.Y." The men are dressed in early 20th century clothing including flat caps, suit jackets, and overcoats. They appear to be workers or employees associated with the train, which looks to be used for transporting goods or materials based on its rusty, industrial appearance. The background shows a dirt or gravel area with some trees, suggesting this was taken at a railyard or depot.

Created by claude-3-5-sonnet-20241022 on 2024-12-30

This is a vintage black and white photograph showing several men standing in front of a railway freight car. The men are dressed in typical early 20th century working class attire, wearing flat caps, sweaters, and suits. Behind them is a large freight car with "P.M.K." lettering visible on its side. The image appears to be taken at a railway yard or loading area, and has the characteristic look of photographs from the 1920s or 1930s. The freight car appears to be constructed of riveted metal panels, typical of railroad equipment from that era.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-05-26

The image is a black-and-white photograph of four men standing in front of a train car. The men are dressed in casual attire, with three wearing hats and one holding a bottle. They appear to be standing on a dirt road or path, with trees visible in the background.

The train car behind them has the letters "P.M.K." and "Y." painted on it, along with some other illegible text. The overall atmosphere of the image suggests that it was taken during the early 20th century, possibly in the 1920s or 1930s.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-05-26

The image is a black-and-white photograph of four men standing in front of a train car.

The men are all wearing hats, with the man on the left wearing a flat cap, the man in the middle wearing a newsboy cap, the man in the middle right wearing a fedora, and the man on the far right wearing a baseball cap. The man on the left is wearing a dark jacket and sweater, the man in the middle is wearing a light-colored sweater, the man in the middle right is wearing a dark suit and tie, and the man on the far right is wearing a light-colored shirt and dark pants.

In the background, there is a train car with the letters "P.M.K." visible on the side. The train car appears to be old and rusty, with a large wheel visible on the left side. The ground around the train car is dirt or gravel, and there are trees visible in the distance.

The overall atmosphere of the image suggests that it was taken in the early 20th century, possibly during the 1920s or 1930s. The men's clothing and hairstyles are consistent with this time period, and the style of the train car and the background suggest a rural or small-town setting.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-05-26

The black-and-white image shows a group of men standing in front of a train car. The train car is black and has the words "P.M.K.Y" on it. The men are wearing hats, and some are wearing coats. The man in the middle is wearing a hat and a sweater. The man on the right is wearing a suit and tie. The man on the left is wearing a hat and a coat.

Created by amazon.nova-lite-v1:0 on 2025-05-26

A black and white photo shows a group of men standing in front of a freight train. They are wearing hats, long-sleeved shirts, and pants. The train has the letters "P.M.K.Y." on its side. The man in the middle is wearing a watch, and the man on the right is holding a cup. The ground is covered in dirt and gravel. There are trees in the background.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-04-30

The black and white photo depicts a group of men standing near a train car. The men are dressed in the style of the mid-20th century, wearing hats, sweaters, suits, and overcoats. The train car is a large, metal boxcar with visible markings including "P.M.K.Y." and the number "626". The background shows some trees and a hint of the sky, indicating the photo was taken outdoors. The overall impression is one of a moment frozen in time from a bygone era.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-05-28

The image is a black-and-white photograph depicting a group of men standing near a railroad car. The railroad car has the initials "P. M. K. Y." and the number "620" painted on its side. The men are dressed in early 20th-century attire, including flat caps, suits, and overcoats. One man in the center is holding a pipe in his mouth, while another man on the right appears to be holding a piece of paper or a document. The setting appears to be outdoors, possibly at a rail yard or a similar industrial location, as there are other railroad cars and equipment visible in the background. The overall atmosphere suggests a historical context, likely related to railroad work or transportation during that era.