Machine Generated Data

Tags

Amazon

created on 2023-10-07

| Indoors | 99.6 | |

|

| ||

| Restaurant | 99.6 | |

|

| ||

| Adult | 97.9 | |

|

| ||

| Male | 97.9 | |

|

| ||

| Man | 97.9 | |

|

| ||

| Person | 97.9 | |

|

| ||

| Architecture | 97.1 | |

|

| ||

| Dining Room | 97.1 | |

|

| ||

| Dining Table | 97.1 | |

|

| ||

| Furniture | 97.1 | |

|

| ||

| Room | 97.1 | |

|

| ||

| Table | 97.1 | |

|

| ||

| Adult | 96.1 | |

|

| ||

| Male | 96.1 | |

|

| ||

| Man | 96.1 | |

|

| ||

| Person | 96.1 | |

|

| ||

| Person | 96 | |

|

| ||

| Cafeteria | 95.7 | |

|

| ||

| Person | 95.6 | |

|

| ||

| Person | 95.2 | |

|

| ||

| Person | 94.4 | |

|

| ||

| Adult | 92.7 | |

|

| ||

| Male | 92.7 | |

|

| ||

| Man | 92.7 | |

|

| ||

| Person | 92.7 | |

|

| ||

| Adult | 91.5 | |

|

| ||

| Male | 91.5 | |

|

| ||

| Man | 91.5 | |

|

| ||

| Person | 91.5 | |

|

| ||

| People | 89.6 | |

|

| ||

| Factory | 87.9 | |

|

| ||

| Building | 86.5 | |

|

| ||

| Person | 86.3 | |

|

| ||

| Hospital | 84.3 | |

|

| ||

| Head | 74.7 | |

|

| ||

| Face | 67.9 | |

|

| ||

| Barbershop | 66.5 | |

|

| ||

| Person | 63.8 | |

|

| ||

| Adult | 63.5 | |

|

| ||

| Male | 63.5 | |

|

| ||

| Man | 63.5 | |

|

| ||

| Person | 63.5 | |

|

| ||

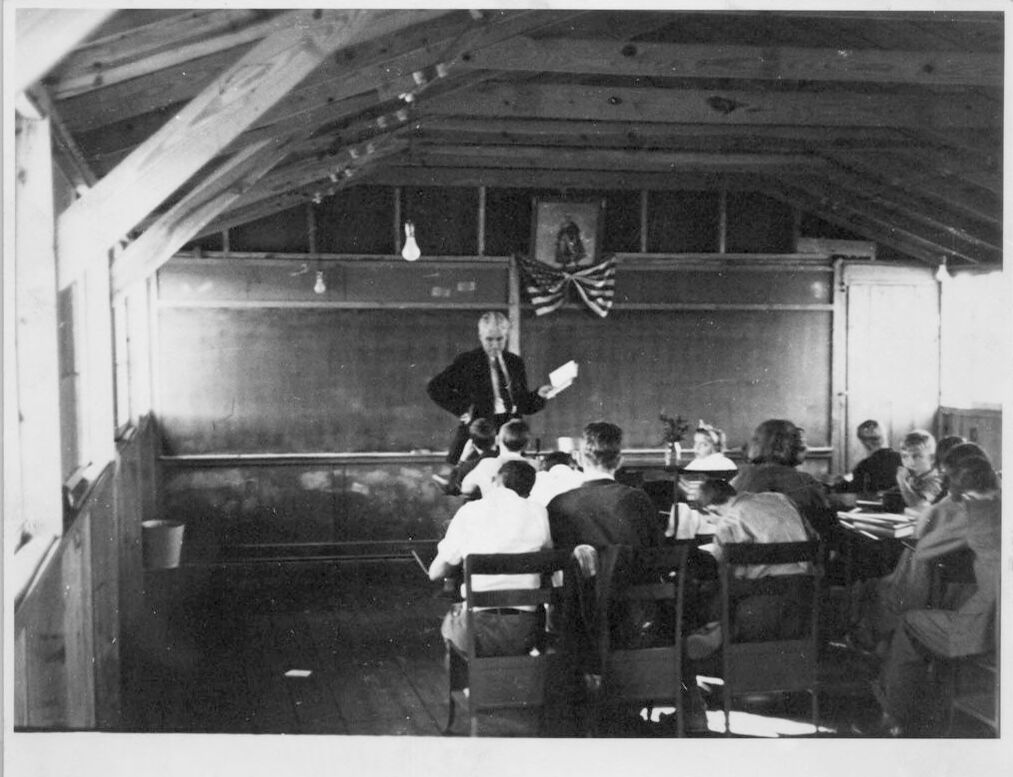

| Classroom | 56.9 | |

|

| ||

| School | 56.9 | |

|

| ||

| Accessories | 56.6 | |

|

| ||

| Bag | 56.6 | |

|

| ||

| Handbag | 56.6 | |

|

| ||

| Cafe | 56.6 | |

|

| ||

| Clinic | 56.5 | |

|

| ||

| Operating Theatre | 56.5 | |

|

| ||

| Flag | 56.3 | |

|

| ||

| American Flag | 55.4 | |

|

| ||

| Chair | 55.2 | |

|

| ||

| Manufacturing | 55.2 | |

|

| ||

| Electrical Device | 55.2 | |

|

| ||

| Microphone | 55.2 | |

|

| ||

Clarifai

created on 2018-05-11

Imagga

created on 2023-10-07

| car | 59.1 | |

|

| ||

| model t | 45.5 | |

|

| ||

| motor vehicle | 28.7 | |

|

| ||

| hut | 28.1 | |

|

| ||

| shelter | 22.9 | |

|

| ||

| wheeled vehicle | 22.6 | |

|

| ||

| structure | 22.1 | |

|

| ||

| vehicle | 19.6 | |

|

| ||

| industry | 17.9 | |

|

| ||

| passenger car | 17.2 | |

|

| ||

| man | 16.1 | |

|

| ||

| industrial | 15.4 | |

|

| ||

| building | 14.8 | |

|

| ||

| transportation | 14.3 | |

|

| ||

| old | 13.9 | |

|

| ||

| television camera | 13.8 | |

|

| ||

| work | 13.3 | |

|

| ||

| architecture | 13.3 | |

|

| ||

| steel | 12.4 | |

|

| ||

| business | 12.1 | |

|

| ||

| people | 11.7 | |

|

| ||

| factory | 11.7 | |

|

| ||

| automobile | 11.5 | |

|

| ||

| travel | 11.2 | |

|

| ||

| television equipment | 11 | |

|

| ||

| equipment | 10.8 | |

|

| ||

| modern | 10.5 | |

|

| ||

| adult | 10.3 | |

|

| ||

| drive | 9.4 | |

|

| ||

| power | 9.2 | |

|

| ||

| male | 9.2 | |

|

| ||

| city | 9.1 | |

|

| ||

| vintage | 9.1 | |

|

| ||

| metal | 8.8 | |

|

| ||

| interior | 8.8 | |

|

| ||

| engine | 8.6 | |

|

| ||

| construction | 8.5 | |

|

| ||

| electronic equipment | 8.3 | |

|

| ||

| device | 8.2 | |

|

| ||

| occupation | 8.2 | |

|

| ||

| worker | 8 | |

|

| ||

| room | 8 | |

|

| ||

| job | 8 | |

|

| ||

| urban | 7.9 | |

|

| ||

| war | 7.7 | |

|

| ||

| window | 7.6 | |

|

| ||

| outdoors | 7.5 | |

|

| ||

| chair | 7.4 | |

|

| ||

| smoke | 7.4 | |

|

| ||

| transport | 7.3 | |

|

| ||

| antique | 7 | |

|

| ||

Google

created on 2018-05-11

| photograph | 94.8 | |

|

| ||

| black and white | 84 | |

|

| ||

| snapshot | 81.8 | |

|

| ||

| monochrome photography | 62.9 | |

|

| ||

| monochrome | 61.9 | |

|

| ||

| vehicle | 55 | |

|

| ||

| vintage clothing | 50.1 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 48-56 |

| Gender | Male, 99.7% |

| Sad | 99.3% |

| Surprised | 29.3% |

| Confused | 8.1% |

| Fear | 6.6% |

| Calm | 5.2% |

| Angry | 1.8% |

| Disgusted | 0.4% |

| Happy | 0.3% |

AWS Rekognition

| Age | 20-28 |

| Gender | Female, 98.5% |

| Calm | 72.5% |

| Sad | 16.1% |

| Fear | 8.2% |

| Surprised | 7.6% |

| Angry | 1.2% |

| Confused | 1.2% |

| Disgusted | 1.1% |

| Happy | 1.1% |

AWS Rekognition

| Age | 1-7 |

| Gender | Female, 90.8% |

| Calm | 75.9% |

| Fear | 7.3% |

| Surprised | 7% |

| Sad | 7% |

| Happy | 5.5% |

| Confused | 2.4% |

| Disgusted | 1.3% |

| Angry | 1.3% |

Feature analysis

Amazon

Adult

Male

Man

Person

Building

Handbag

Chair

| Adult | 97.9% | |

|

| ||

| Adult | 96.1% | |

|

| ||

| Adult | 92.7% | |

|

| ||

| Adult | 91.5% | |

|

| ||

| Adult | 63.5% | |

|

| ||

| Male | 97.9% | |

|

| ||

| Male | 96.1% | |

|

| ||

| Male | 92.7% | |

|

| ||

| Male | 91.5% | |

|

| ||

| Male | 63.5% | |

|

| ||

| Man | 97.9% | |

|

| ||

| Man | 96.1% | |

|

| ||

| Man | 92.7% | |

|

| ||

| Man | 91.5% | |

|

| ||

| Man | 63.5% | |

|

| ||

| Person | 97.9% | |

|

| ||

| Person | 96.1% | |

|

| ||

| Person | 96% | |

|

| ||

| Person | 95.6% | |

|

| ||

| Person | 95.2% | |

|

| ||

| Person | 94.4% | |

|

| ||

| Person | 92.7% | |

|

| ||

| Person | 91.5% | |

|

| ||

| Person | 86.3% | |

|

| ||

| Person | 63.8% | |

|

| ||

| Person | 63.5% | |

|

| ||

| Building | 86.5% | |

|

| ||

| Handbag | 56.6% | |

|

| ||

| Chair | 55.2% | |

|

| ||

Categories

Imagga

| interior objects | 81.9% | |

|

| ||

| cars vehicles | 5.7% | |

|

| ||

| streetview architecture | 5% | |

|

| ||

| food drinks | 4.4% | |

|

| ||

Captions

Microsoft

created on 2018-05-11

| a group of people standing in a room | 91.5% | |

|

| ||

| a group of people standing in front of a building | 81.6% | |

|

| ||

| a group of people in a room | 81.5% | |

|

| ||