Machine Generated Data

Tags

Amazon

created on 2023-10-06

Clarifai

created on 2018-05-11

Imagga

created on 2023-10-06

| building | 25.6 | |

|

| ||

| architecture | 23.5 | |

|

| ||

| door | 20.1 | |

|

| ||

| old | 18.8 | |

|

| ||

| window | 17.7 | |

|

| ||

| wall | 17.6 | |

|

| ||

| structure | 16.3 | |

|

| ||

| city | 15.8 | |

|

| ||

| shop | 14.4 | |

|

| ||

| house | 14.2 | |

|

| ||

| barbershop | 13.9 | |

|

| ||

| travel | 12.7 | |

|

| ||

| ancient | 12.1 | |

|

| ||

| device | 12 | |

|

| ||

| glass | 11.7 | |

|

| ||

| tourism | 11.5 | |

|

| ||

| mercantile establishment | 11.5 | |

|

| ||

| urban | 11.4 | |

|

| ||

| support | 11 | |

|

| ||

| history | 10.7 | |

|

| ||

| construction | 10.3 | |

|

| ||

| stone | 10.1 | |

|

| ||

| bridge | 10.1 | |

|

| ||

| landmark | 9.9 | |

|

| ||

| vintage | 9.9 | |

|

| ||

| sky | 9.6 | |

|

| ||

| buildings | 9.4 | |

|

| ||

| light | 9.4 | |

|

| ||

| jamb | 9.3 | |

|

| ||

| historic | 9.2 | |

|

| ||

| black | 9.1 | |

|

| ||

| outside | 8.6 | |

|

| ||

| wood | 8.3 | |

|

| ||

| street | 8.3 | |

|

| ||

| park | 8.2 | |

|

| ||

| outdoors | 8.2 | |

|

| ||

| office | 8.2 | |

|

| ||

| brick | 8.2 | |

|

| ||

| home | 8 | |

|

| ||

| upright | 7.7 | |

|

| ||

| windows | 7.7 | |

|

| ||

| grunge | 7.7 | |

|

| ||

| place of business | 7.7 | |

|

| ||

| roof | 7.6 | |

|

| ||

| man | 7.4 | |

|

| ||

| exterior | 7.4 | |

|

| ||

| gate | 7.4 | |

|

| ||

| garage | 7.3 | |

|

| ||

| industrial | 7.3 | |

|

| ||

| aged | 7.2 | |

|

| ||

| dirty | 7.2 | |

|

| ||

| structural member | 7.1 | |

|

| ||

| adult | 7.1 | |

|

| ||

| night | 7.1 | |

|

| ||

| male | 7.1 | |

|

| ||

Google

created on 2018-05-11

| black and white | 82.6 | |

|

| ||

| history | 66.8 | |

|

| ||

| monochrome photography | 60.3 | |

|

| ||

| vintage clothing | 56.8 | |

|

| ||

| house | 56.7 | |

|

| ||

| shack | 52.6 | |

|

| ||

| monochrome | 51.4 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 40-48 |

| Gender | Male, 99.9% |

| Calm | 93.3% |

| Surprised | 6.5% |

| Fear | 6% |

| Sad | 3.1% |

| Confused | 2.2% |

| Angry | 0.5% |

| Happy | 0.3% |

| Disgusted | 0.2% |

AWS Rekognition

| Age | 36-44 |

| Gender | Male, 100% |

| Sad | 99.9% |

| Calm | 18.8% |

| Surprised | 6.5% |

| Fear | 6% |

| Confused | 3.5% |

| Disgusted | 0.5% |

| Angry | 0.2% |

| Happy | 0.2% |

AWS Rekognition

| Age | 23-33 |

| Gender | Male, 99.9% |

| Calm | 90% |

| Surprised | 10.3% |

| Fear | 5.9% |

| Sad | 2.8% |

| Confused | 0.6% |

| Happy | 0.4% |

| Disgusted | 0.4% |

| Angry | 0.3% |

Microsoft Cognitive Services

| Age | 40 |

| Gender | Male |

Feature analysis

Categories

Imagga

| streetview architecture | 99.6% | |

|

| ||

Captions

Microsoft

created on 2018-05-11

| a black and white photo of a person | 92.9% | |

|

| ||

| an old black and white photo of a person | 91.6% | |

|

| ||

| black and white photo of a person | 90.9% | |

|

| ||

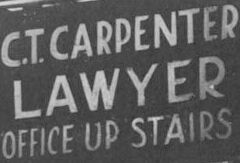

Text analysis

Amazon

STAIRS

OFFICE

OFFICE UP STAIRS

LAWYER

UP

C.T.CARPENTER

C.T. CARPENTER

LAWYER

OFFICE UP STAIRS

LAWYER

OFFICE

UP

STAIRS

C.T.

CARPENTER