Machine Generated Data

Tags

Amazon

created on 2023-10-06

Clarifai

created on 2018-05-11

Imagga

created on 2023-10-06

| newspaper | 37.6 | |

|

| ||

| product | 28.2 | |

|

| ||

| creation | 22 | |

|

| ||

| person | 21.7 | |

|

| ||

| man | 21.6 | |

|

| ||

| male | 18.4 | |

|

| ||

| negative | 18.3 | |

|

| ||

| people | 17.8 | |

|

| ||

| portrait | 16.2 | |

|

| ||

| old | 16 | |

|

| ||

| film | 13.1 | |

|

| ||

| adult | 13 | |

|

| ||

| black | 12.7 | |

|

| ||

| city | 12.5 | |

|

| ||

| urban | 11.4 | |

|

| ||

| dress | 10.8 | |

|

| ||

| men | 10.3 | |

|

| ||

| women | 10.3 | |

|

| ||

| world | 10 | |

|

| ||

| vintage | 9.9 | |

|

| ||

| fashion | 9.8 | |

|

| ||

| art | 9.8 | |

|

| ||

| musical instrument | 9.6 | |

|

| ||

| comedian | 9.6 | |

|

| ||

| building | 9.6 | |

|

| ||

| ancient | 9.5 | |

|

| ||

| wall | 9.4 | |

|

| ||

| photographic paper | 9.4 | |

|

| ||

| performer | 9.3 | |

|

| ||

| dirty | 9 | |

|

| ||

| one | 9 | |

|

| ||

| businessman | 8.8 | |

|

| ||

| mask | 8.8 | |

|

| ||

| cold | 8.6 | |

|

| ||

| sculpture | 8.6 | |

|

| ||

| room | 8.6 | |

|

| ||

| face | 8.5 | |

|

| ||

| grunge | 8.5 | |

|

| ||

| business | 8.5 | |

|

| ||

| street | 8.3 | |

|

| ||

| silhouette | 8.3 | |

|

| ||

| human | 8.2 | |

|

| ||

| religion | 8.1 | |

|

| ||

| history | 8 | |

|

| ||

| decoration | 8 | |

|

| ||

| working | 8 | |

|

| ||

| work | 7.8 | |

|

| ||

| stone | 7.8 | |

|

| ||

| statue | 7.7 | |

|

| ||

| violin | 7.7 | |

|

| ||

| culture | 7.7 | |

|

| ||

| house | 7.5 | |

|

| ||

| happy | 7.5 | |

|

| ||

| style | 7.4 | |

|

| ||

| alone | 7.3 | |

|

| ||

| looking | 7.2 | |

|

| ||

| stringed instrument | 7.1 | |

|

| ||

| architecture | 7 | |

|

| ||

Google

created on 2018-05-11

| photograph | 96.1 | |

|

| ||

| black and white | 91.7 | |

|

| ||

| snapshot | 81.8 | |

|

| ||

| photography | 81 | |

|

| ||

| monochrome photography | 80.3 | |

|

| ||

| vintage clothing | 64.9 | |

|

| ||

| monochrome | 64.1 | |

|

| ||

| history | 53.7 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

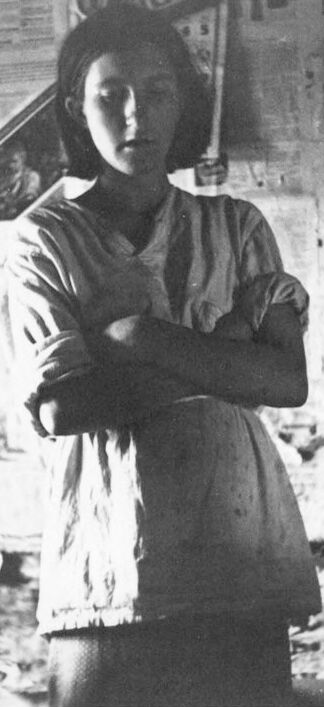

| Age | 21-29 |

| Gender | Female, 87.5% |

| Calm | 88.8% |

| Fear | 9.4% |

| Surprised | 6.8% |

| Sad | 2.5% |

| Happy | 0.6% |

| Angry | 0.4% |

| Disgusted | 0.3% |

| Confused | 0.2% |

AWS Rekognition

| Age | 23-31 |

| Gender | Female, 97.2% |

| Sad | 100% |

| Calm | 7.6% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Happy | 0.4% |

| Confused | 0.3% |

| Angry | 0.1% |

| Disgusted | 0.1% |

AWS Rekognition

| Age | 12-20 |

| Gender | Male, 94.8% |

| Calm | 90.2% |

| Surprised | 6.4% |

| Fear | 6.1% |

| Sad | 3.7% |

| Disgusted | 3.3% |

| Happy | 0.9% |

| Angry | 0.4% |

| Confused | 0.4% |

Microsoft Cognitive Services

| Age | 46 |

| Gender | Male |

Feature analysis

Categories

Imagga

| paintings art | 99.6% | |

|

| ||

Captions

Microsoft

created on 2018-05-11

| a person standing in front of a store | 68.2% | |

|

| ||

| a person standing in front of a building | 68.1% | |

|

| ||

| a person standing in front of a store | 57.2% | |

|

| ||