Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

| Age | 35-52 |

| Gender | Male, 95.3% |

| Sad | 30.7% |

| Disgusted | 5.2% |

| Confused | 1.9% |

| Surprised | 2.8% |

| Calm | 42.9% |

| Angry | 15.3% |

| Happy | 1.2% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Shoe | 100% | |

Categories

Imagga

created on 2018-03-23

| streetview architecture | 74.6% | |

| paintings art | 10.8% | |

| interior objects | 4.4% | |

| pets animals | 4.2% | |

| nature landscape | 3.9% | |

| beaches seaside | 0.7% | |

| cars vehicles | 0.5% | |

| food drinks | 0.3% | |

| text visuals | 0.2% | |

| events parties | 0.2% | |

| people portraits | 0.2% | |

| sunrises sunsets | 0.1% | |

Captions

Microsoft

created by unknown on 2018-03-23

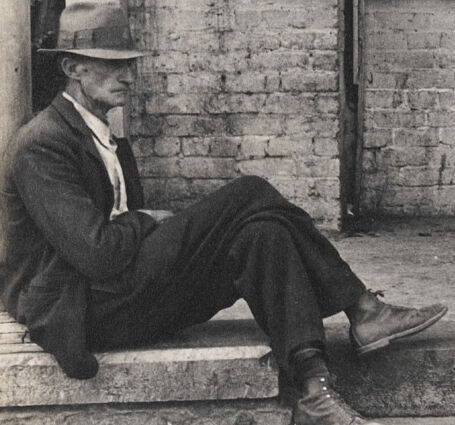

| a man sitting in front of a building | 83.9% | |

| a man sitting on a bench in front of a building | 69.3% | |

| a man standing in front of a building | 69.2% | |

Salesforce

Created by general-english-image-caption-blip-2 on 2025-06-27

a man and a woman sitting on steps outside of a building

Created by general-english-image-caption-blip on 2025-05-11

a photograph of a man sitting on a step up to a door

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-08

The image appears to depict an outdoor scene featuring two individuals positioned near a brick building. One person is seated on a concrete ledge, dressed in a suit and hat, while the other stands in a doorway wearing overalls and a long-sleeved shirt. The building has a rustic and weathered appearance, with posters or papers affixed to the wall near the doorway. The dark entryway behind the standing figure suggests the interior is unlit or minimally illuminated.

Created by gpt-4o-2024-08-06 on 2025-06-08

The image depicts a scene in front of a brick building, which appears to be an entrance or a porch area. On the left, a person is sitting on the edge of a concrete step, wearing a suit with a tie, a hat, and boots. Their posture is relaxed, with legs crossed at the ankle. On the right, another person is standing in a shadowy doorway with hands in pockets, wearing overalls and boots. The wall behind them features several pieces of paper or posters affixed to the bricks. The setting has a vintage or historical feel, suggested by the clothing style and overall composition.

Anthropic Claude

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-25

This is a black and white photograph that appears to be from an earlier era, possibly the early-to-mid 20th century. The image shows two workers at what appears to be a warehouse or industrial building. One worker is sitting on what looks like a concrete step or ledge, wearing a hat and casual work clothes. The other worker is standing in a doorway, wearing overalls and a cardigan sweater. The building has a brick wall with some papers or posters tacked to its wooden siding. The composition creates an interesting contrast between the relaxed posture of the seated figure and the upright stance of the person in the doorway. The photograph has a documentary quality typical of Depression-era photography.

Created by claude-3-haiku-20240307 on 2024-12-31

The image shows two men in a dilapidated urban setting, likely from an earlier time period. One man is seated on a step, appearing to be in a pensive or contemplative pose. The other man is standing in a doorway, wearing overalls and looking directly at the camera. The background is dominated by old brick walls, suggesting this is set in a run-down or impoverished area. The overall mood of the image is somber and reflective.

Created by claude-3-opus-20240229 on 2024-12-31

The black and white image shows two men standing in the doorway of a brick building. One man is sitting on the steps wearing a suit and hat, while the other man is standing in the doorway wearing overalls and an apron. There are posters or signs visible on the brick wall next to the doorway. The building appears old and weathered, suggesting this photograph was taken some time ago, likely in the early to mid 20th century based on the clothing and setting.

Created by claude-3-5-sonnet-20241022 on 2024-12-31

This is a black and white photograph that appears to be from an earlier era, possibly the early-to-mid 20th century. The image shows two workers at what appears to be a warehouse or industrial building. One worker is reclining on some steps in the foreground, wearing a hat and work clothes. The other worker is standing in a doorway, wearing overalls and a cardigan sweater. There are some posters or papers posted on the wooden wall between them. The building has a brick exterior and appears quite industrial in nature. The composition creates an interesting contrast between the relaxed posture of the seated worker and the upright stance of the worker in the doorway.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-04

The image depicts a black-and-white photograph of two men standing outside a building. The man on the left is sitting on a step, wearing a suit and hat, while the man on the right is standing in the doorway, dressed in overalls and a hat. The background features a brick wall with a wooden door and a sign on the wall, suggesting a rural or industrial setting. The overall atmosphere of the image appears to be one of quiet contemplation, with the two men engaged in their own thoughts as they sit or stand outside the building.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-04

This image is a black-and-white photograph of two men standing outside a building. The man on the left is sitting on the steps, wearing a suit and hat, while the man on the right is standing in the doorway, dressed in overalls and a hat. The building appears to be made of brick or stone, with a wooden doorframe and a sign above the door. The overall atmosphere of the image suggests a rural or small-town setting, possibly during the early 20th century.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-03

The image depicts a monochromatic photograph of two men sitting on the steps of a building. The man on the left is sitting on the steps, wearing a hat, a suit, and leather shoes. He has a contemplative expression and is looking to the right. The man standing in the doorway behind him is wearing a hat, a long-sleeve shirt, and overalls. He is holding a cigarette in his right hand and appears to be looking at the man sitting on the steps.

Created by amazon.nova-pro-v1:0 on 2025-06-03

The black-and-white photo shows two men, one sitting on the steps of the building and the other standing at the entrance. The man sitting on the steps is wearing a hat, a suit, and leather shoes. He is looking to his left. The man standing at the entrance is wearing a hat, a shirt, and denim overalls. He is holding a cigarette in his right hand. There are posters on the wall.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-30

Here's a description of the image:

The image is a black and white photograph depicting a scene of two men in a rustic, perhaps rural, setting.

Composition: The composition is divided, with one man seated on a stone step in the foreground and the other man standing in the doorway of a dark, simple building in the background. The lighting suggests a bright day, but the overall tone is somber.

Foreground: A man, likely older, is seated on a stone step. He is wearing a suit, a hat, and boots. He leans against the side of the building, his posture relaxed. His face is lined, and he seems to be deep in thought.

Background: A man stands in the doorway of a simple building. He is wearing overalls and a hat. He is holding a cigarette or similar object. There are a few notices or posters tacked to the wooden side of the building, adding a touch of detail and a hint of the location's purpose.

Atmosphere: The image has a sense of stillness, perhaps reflecting a moment of rest or reflection. It evokes a feeling of the past, both in its style and the subject matter. The overall aesthetic is reminiscent of documentary photography from the early 20th century.

Created by gemini-2.0-flash on 2025-05-30

The black and white photograph shows two men in front of a simple building. On the left, a man wearing a fedora, jacket, and dark pants is sitting on the building's steps. His legs are extended, and he looks off to the side with a contemplative expression.

In the background, through a doorway, stands another man. He is wearing overalls over a long-sleeved shirt and a small hat. He has his hands in his pockets and appears to be watching something outside the frame.

The building is constructed of brick on one side and rough wooden planks on another. Notices are tacked on the wooden section. The ground is paved and appears slightly worn. The overall impression is one of rural simplicity and perhaps economic hardship.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-05-31

The image is a black-and-white photograph depicting two men outside a building. The building appears to be constructed of brick and wood, and the scene suggests a rustic or rural setting.

On the left, an older man is seated on the edge of a raised platform or porch. He is wearing a suit and a hat, with one leg crossed over the other. He seems to be relaxed, possibly taking a break or waiting for someone.

On the right, a younger man stands in the doorway of the building. He is dressed in overalls and a hat, holding a pipe in his hand. He appears to be looking directly at the camera with a stern expression.

The building has several posters or notices attached to the wall beside the door, indicating that it might be a place of business or a community gathering spot. The overall atmosphere of the photograph suggests a moment captured in time, possibly from the early to mid-20th century, reflecting the daily life and attire of the era.