Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

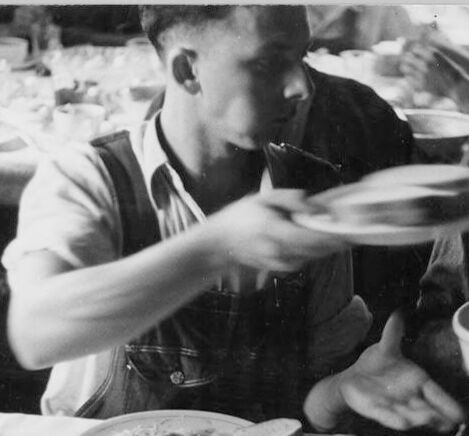

| Age | 43-51 |

| Gender | Male, 99.7% |

| Calm | 78.2% |

| Sad | 25.6% |

| Surprised | 6.4% |

| Fear | 6% |

| Confused | 1.3% |

| Angry | 0.3% |

| Happy | 0.3% |

| Disgusted | 0.2% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Adult | 99% | |

Categories

Imagga

created on 2023-10-06

| food drinks | 98.5% | |

Captions

Microsoft

created by unknown on 2018-05-11

| a group of people sitting at a table eating food | 75.7% | |

| a group of people sitting at a table with food | 75.6% | |

| a group of people sitting around a table eating food | 74.8% | |

Clarifai

No captions written

Salesforce

Created by general-english-image-caption-blip on 2025-05-06

a photograph of a man and woman are sitting at a table

Created by general-english-image-caption-blip-2 on 2025-07-06

two men are eating at a table with plates and bowls

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-12

The image shows a community-style meal gathering, where individuals seated at a table are sharing food. Various dishes are placed on the table, including bowls, plates, and cups filled with different types of food such as rice, soup, and what appears to be stew or casserole. Utensils are laid out, and the atmosphere suggests a casual, communal dining experience. The clothing of the people, including overalls and button-up shirts, hints at a likely rural or working-class setting.

Created by gpt-4o-2024-08-06 on 2025-06-12

The image depicts a mealtime scene around a table where multiple people are gathered, likely eating a meal together. The individuals are passing dishes to one another, indicating a communal dining experience. The table is set with several items, including plates with food, bowls, and a cup. The food appears to be a mix of different dishes, with visible items like rice and possibly a stew. The setting is casual, with the individuals wearing informal attire such as plaid shirts and overalls. The atmosphere suggests a shared meal, typical in a family or community setting.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-15

The image shows a group of people eating a meal together at what appears to be a cafeteria or dining hall. The scene is captured in black and white, giving it a gritty, documentary-style feel. The people in the image are focused on their food, with one person in the foreground serving something from a bowl onto a plate. The table is cluttered with various dishes and utensils, suggesting a simple, hearty meal. The overall atmosphere conveys a sense of community and shared experience around the act of eating.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-15

This is a black and white photograph that appears to be from an earlier era, showing people seated at a table during a meal. The image captures the motion of someone serving or passing food, with bowls and plates spread across the table. The diners appear to be wearing what looks like work clothes or overalls. The setting has a casual, possibly industrial or working-class atmosphere, suggesting it might be a workers' dining hall or cafeteria. There's a sense of movement in the image, particularly with the blurred motion of hands serving food, which gives the photograph a dynamic, candid quality.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-04

The image is a black-and-white photograph of two men sitting at a table, eating from large bowls.

The man on the left is wearing a white shirt and denim overalls. He is holding a plate in his left hand and a spoon in his right hand, with a large bowl of food in front of him. The man on the right is wearing a dark-colored shirt and denim overalls. He is holding a large bowl of food in his left hand and a spoon in his right hand. There are several other plates and bowls of food on the table in front of them.

In the background, there are other people sitting at tables, but they are not clearly visible. The overall atmosphere of the image suggests a casual, informal meal or gathering.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-04

The image is a black-and-white photograph of two men sitting at a table, engaged in eating. The man on the left is wearing overalls and a white shirt, while the man on the right is also wearing overalls but with a dark-colored shirt.

The table is set with various dishes, including plates, bowls, cups, and utensils. The men are focused on their meal, with the man on the left holding a plate and the man on the right holding a bowl.

In the background, there are other people sitting at tables, although they are not clearly visible due to the focus being on the two main subjects. The overall atmosphere suggests a casual dining setting, possibly in a communal or social context.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-03

The image is a black-and-white photograph of a dining scene, possibly from a historical context. It depicts several individuals seated around a table, engaged in eating. The focus is on two men in the foreground. One man, wearing a shirt and suspenders, is holding a plate with food, and another man, also in suspenders, holds a bowl of food. The table is laden with various dishes, including bowls, plates, and cups, suggesting a meal in progress. The atmosphere appears casual and communal, with other people visible in the background, also eating.

Created by amazon.nova-pro-v1:0 on 2025-06-03

The black-and-white image features two men sitting at a dining table with various dishes on it. The man on the left is holding a plate with a fork and a knife. He is looking at the man on the right, who is holding a bowl with a spoon. The man on the right is looking at the man on the left. Behind them, there are other people sitting at the dining table.

Google Gemini

Created by gemini-2.0-flash on 2025-05-07

The black-and-white photograph depicts two men in denim overalls at a table laden with food.

One man, on the left, is in the process of passing a stack of plates. He is wearing overalls over a light-colored shirt, and his head is slightly turned as he concentrates on the task at hand. His left hand is outstretched, guiding the plates.

The other man, to the right, is holding a large bowl filled with food and a spoon. He is wearing a denim shirt, and his gaze is directed downward towards the bowl.

The table is covered with a white tablecloth and is cluttered with various plates, bowls, cups, glasses, and cutlery. The food on the table includes what appears to be stew, bread, beans, coffee and other assorted dishes. The setting suggests a communal or family meal, with the focus on sharing and abundance.

The image is well-lit, but the subjects have deep shadows. The texture is rough, with visible grain.

Created by gemini-2.0-flash-lite on 2025-05-07

Certainly! Here's a description of the image:

Overall Impression:

The photograph is a black and white, snapshot-style image, likely from a past era (judging by the clothing and the film grain). It depicts a group of people eating a meal. The setting is informal, possibly a communal dining area.

Key Elements:

- People: Two men are in the foreground. One on the left is holding a plate of food. The other man on the right is holding a bowl. The other people are on the background.

- Table: A white tablecloth covers the table. It is laden with plates of food, bowls, cups, and various serving dishes.

- Food: There's a variety of food visible: plates of what appear to be cooked meats or vegetables, bowls of what looks like soup or stew, and perhaps bread or other sides.

- Atmosphere: The image suggests a moment of communal sharing, possibly a meal shared among workers or a group of people.

Overall, the photograph provides a glimpse into a past time period, showcasing a simple meal shared among people.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-02

This black-and-white photograph depicts a group of people seated at a table, enjoying a meal together. The scene appears to be from an earlier era, possibly mid-20th century, based on the clothing styles and the overall ambiance.

In the foreground, there are two men prominently featured. The man on the left is wearing a light-colored shirt and overalls, and he is in the process of serving himself food from a large bowl. The man on the right, wearing a dark jacket, is looking towards the camera with a cigarette in his mouth.

The table is laden with various dishes and utensils, including plates of food, bowls, cups, and spoons. The meal seems to be hearty, with a variety of foods visible on the plates and in the bowls. The background shows other diners, suggesting that this might be a communal dining setting, such as a mess hall or a family gathering.

The overall mood of the image is casual and communal, capturing a moment of shared sustenance and camaraderie.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-11

This black-and-white photograph depicts a group of individuals seated around a table, enjoying a meal together. The setting appears to be informal, possibly a communal dining area or a large dining hall where people share food. The table is set with various dishes, bowls, and cups, suggesting a meal shared among friends or colleagues.

In the foreground, one person, dressed in a short-sleeved shirt and suspenders, is holding a plate and appears to be passing food to another person who is holding a bowl. The person receiving the food is wearing a long-sleeved shirt and seems to be reaching out to accept the dish. The scene conveys a sense of camaraderie and mutual sharing.

The background shows other individuals also engaged in eating, with some standing and others seated. The overall atmosphere suggests a communal and relaxed dining environment.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-11

This black-and-white photograph captures a scene of men gathered around a table, seemingly eating a meal. The table is covered with various dishes, including bowls with spoons, plates with food, and cups. One man in the foreground, wearing a light-colored shirt and a dark vest, is passing a plate to another man seated across the table. The man receiving the plate is wearing a dark jacket and appears to be holding a bowl with a spoon. The background is slightly blurred but shows other people and more food on the table, suggesting a communal dining setting. The overall atmosphere appears to be casual and focused on the act of eating. The photograph has a vintage look, likely from the mid-20th century.