Machine Generated Data

Tags

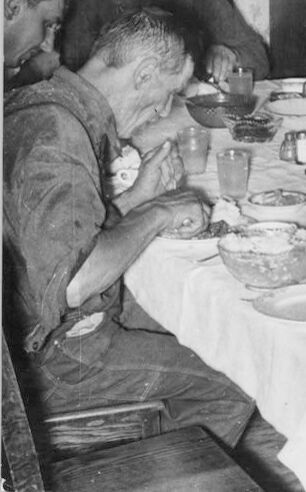

Amazon

created on 2023-10-06

| Architecture | 100 | |

|

| ||

| Building | 100 | |

|

| ||

| Dining Room | 100 | |

|

| ||

| Dining Table | 100 | |

|

| ||

| Furniture | 100 | |

|

| ||

| Indoors | 100 | |

|

| ||

| Room | 100 | |

|

| ||

| Table | 100 | |

|

| ||

| Restaurant | 100 | |

|

| ||

| Cafeteria | 99.8 | |

|

| ||

| Food | 99.4 | |

|

| ||

| Meal | 99.4 | |

|

| ||

| Adult | 98.9 | |

|

| ||

| Male | 98.9 | |

|

| ||

| Man | 98.9 | |

|

| ||

| Person | 98.9 | |

|

| ||

| Adult | 98.8 | |

|

| ||

| Male | 98.8 | |

|

| ||

| Man | 98.8 | |

|

| ||

| Person | 98.8 | |

|

| ||

| Adult | 98.4 | |

|

| ||

| Male | 98.4 | |

|

| ||

| Man | 98.4 | |

|

| ||

| Person | 98.4 | |

|

| ||

| Adult | 98 | |

|

| ||

| Male | 98 | |

|

| ||

| Man | 98 | |

|

| ||

| Person | 98 | |

|

| ||

| Person | 97.4 | |

|

| ||

| Adult | 97.1 | |

|

| ||

| Male | 97.1 | |

|

| ||

| Man | 97.1 | |

|

| ||

| Person | 97.1 | |

|

| ||

| Person | 95.4 | |

|

| ||

| Food Court | 92.7 | |

|

| ||

| Face | 88.2 | |

|

| ||

| Head | 88.2 | |

|

| ||

| Dinner | 79.9 | |

|

| ||

| Person | 79.8 | |

|

| ||

| Dish | 65.7 | |

|

| ||

| People | 56.1 | |

|

| ||

| Cafe | 55.1 | |

|

| ||

Clarifai

created on 2018-05-11

Imagga

created on 2023-10-06

Google

created on 2018-05-11

| photograph | 95.2 | |

|

| ||

| black and white | 89.1 | |

|

| ||

| snapshot | 81.8 | |

|

| ||

| monochrome photography | 75.1 | |

|

| ||

| monochrome | 69 | |

|

| ||

| human behavior | 67.9 | |

|

| ||

| vintage clothing | 54.2 | |

|

| ||

Microsoft

created on 2018-05-11

| person | 99.9 | |

|

| ||

| table | 97.5 | |

|

| ||

| sitting | 97.3 | |

|

| ||

| group | 91.7 | |

|

| ||

| people | 82.3 | |

|

| ||

| restaurant | 38.9 | |

|

| ||

| meal | 33.5 | |

|

| ||

| family | 30.5 | |

|

| ||

| dining table | 8.8 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 20-28 |

| Gender | Male, 99.9% |

| Calm | 100% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.1% |

| Angry | 0% |

| Confused | 0% |

| Disgusted | 0% |

| Happy | 0% |

AWS Rekognition

| Age | 31-41 |

| Gender | Male, 99.8% |

| Calm | 56% |

| Confused | 22.9% |

| Sad | 11.7% |

| Surprised | 7.9% |

| Fear | 6.3% |

| Disgusted | 1.9% |

| Angry | 1.7% |

| Happy | 0.9% |

AWS Rekognition

| Age | 26-36 |

| Gender | Male, 97.9% |

| Calm | 81.2% |

| Sad | 9.5% |

| Surprised | 6.9% |

| Fear | 6.5% |

| Confused | 2.9% |

| Happy | 1.1% |

| Disgusted | 0.6% |

| Angry | 0.5% |

AWS Rekognition

| Age | 23-33 |

| Gender | Male, 100% |

| Calm | 91.2% |

| Surprised | 7.7% |

| Fear | 6% |

| Confused | 3.9% |

| Sad | 2.3% |

| Angry | 0.6% |

| Disgusted | 0.5% |

| Happy | 0.4% |

AWS Rekognition

| Age | 21-29 |

| Gender | Male, 59.8% |

| Calm | 77.4% |

| Angry | 13.8% |

| Surprised | 7.3% |

| Fear | 7.2% |

| Sad | 2.3% |

| Happy | 2.1% |

| Confused | 0.6% |

| Disgusted | 0.4% |

Microsoft Cognitive Services

| Age | 44 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 26 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| people portraits | 79.1% | |

|

| ||

| interior objects | 10.2% | |

|

| ||

| events parties | 4.2% | |

|

| ||

| paintings art | 2.4% | |

|

| ||

| pets animals | 1.4% | |

|

| ||

| food drinks | 1.4% | |

|

| ||

Captions

Microsoft

created on 2018-05-11

| a group of people sitting at a table | 98.8% | |

|

| ||

| a group of people sitting around a table | 98.7% | |

|

| ||

| a group of people sitting at a table in a restaurant | 98.3% | |

|

| ||