Machine Generated Data

Tags

Amazon

created on 2023-10-05

Clarifai

created on 2018-05-11

Imagga

created on 2023-10-05

| old | 20.2 | |

|

| ||

| shopping cart | 17.1 | |

|

| ||

| wheeled vehicle | 17.1 | |

|

| ||

| building | 16.8 | |

|

| ||

| device | 15.3 | |

|

| ||

| handcart | 14.2 | |

|

| ||

| dirty | 12.6 | |

|

| ||

| machine | 12.4 | |

|

| ||

| container | 12 | |

|

| ||

| equipment | 11.9 | |

|

| ||

| architecture | 11.7 | |

|

| ||

| cell | 11.3 | |

|

| ||

| wall | 11.1 | |

|

| ||

| industry | 11.1 | |

|

| ||

| city | 10.8 | |

|

| ||

| work | 10.5 | |

|

| ||

| outdoors | 10.4 | |

|

| ||

| gas pump | 10.2 | |

|

| ||

| forklift | 10.1 | |

|

| ||

| locker | 10.1 | |

|

| ||

| window | 10.1 | |

|

| ||

| industrial | 10 | |

|

| ||

| door | 9.9 | |

|

| ||

| pay-phone | 9.9 | |

|

| ||

| urban | 9.6 | |

|

| ||

| construction | 9.4 | |

|

| ||

| light | 9.4 | |

|

| ||

| water | 9.3 | |

|

| ||

| pump | 9.3 | |

|

| ||

| street | 9.2 | |

|

| ||

| house | 9.2 | |

|

| ||

| outdoor | 9.2 | |

|

| ||

| vehicle | 9.1 | |

|

| ||

| vintage | 9.1 | |

|

| ||

| worker | 8.9 | |

|

| ||

| metal | 8.8 | |

|

| ||

| factory | 8.7 | |

|

| ||

| grunge | 8.5 | |

|

| ||

| musical instrument | 8.5 | |

|

| ||

| black | 8.4 | |

|

| ||

| fastener | 8.3 | |

|

| ||

| safety | 8.3 | |

|

| ||

| warehouse | 8.2 | |

|

| ||

| tool | 8 | |

|

| ||

| chair | 8 | |

|

| ||

| telephone | 7.8 | |

|

| ||

| scene | 7.8 | |

|

| ||

| empty | 7.7 | |

|

| ||

| outside | 7.7 | |

|

| ||

| restraint | 7.2 | |

|

| ||

| history | 7.2 | |

|

| ||

| steel | 7.1 | |

|

| ||

| working | 7.1 | |

|

| ||

| travel | 7 | |

|

| ||

Google

created on 2018-05-11

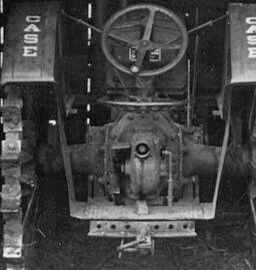

| black and white | 88.6 | |

|

| ||

| monochrome photography | 69.5 | |

|

| ||

| shed | 65.8 | |

|

| ||

| monochrome | 65.4 | |

|

| ||

| shack | 59.4 | |

|

| ||

Color Analysis

Feature analysis

Categories

Imagga

| interior objects | 79.3% | |

|

| ||

| streetview architecture | 13.9% | |

|

| ||

| cars vehicles | 5.7% | |

|

| ||

Captions

Microsoft

created on 2018-05-11

| a black sign with white text on a wooden door | 66% | |

|

| ||

| a black sign with white text on a wooden surface | 62.9% | |

|

| ||

| a black and white photo of a wooden door | 62.8% | |

|

| ||

Text analysis

Amazon

CASE