Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

| Age | 6-12 |

| Gender | Male, 99.8% |

| Disgusted | 47.4% |

| Angry | 32.9% |

| Sad | 13.8% |

| Surprised | 6.5% |

| Fear | 6.3% |

| Confused | 2.4% |

| Calm | 1.6% |

| Happy | 0.4% |

Feature analysis

Amazon

| Adult | 99.1% | |

Categories

Imagga

| paintings art | 92.4% | |

| streetview architecture | 3% | |

| beaches seaside | 1.7% | |

| nature landscape | 1.5% | |

Captions

Microsoft

created by unknown on 2018-05-11

| a vintage photo of a man | 91.3% | |

| a black and white photo of a man | 85.5% | |

| an old photo of a man | 85.4% | |

Clarifai

created by general-english-image-caption-blip on 2025-05-05

| a photograph of a group of men in uniforms and hats are sitting on a truck | -100% | |

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-05-31

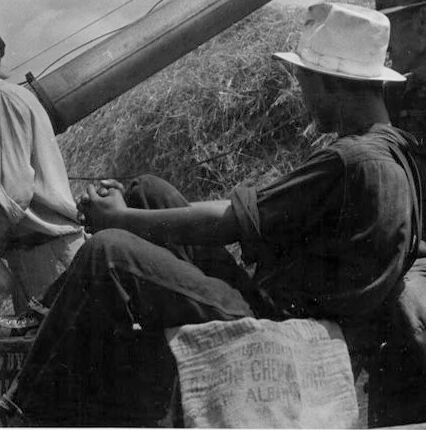

The black-and-white photo shows a group of men on a farm. They are wearing hats and uniforms. Some of them are sitting on a machine, while others are standing. One of them is holding a tool. The machine has the words "Red River Special" written on it. The machine is on top of what seems to be a truck. There is a sack of grain on the truck.

Created by amazon.nova-lite-v1:0 on 2025-05-31

The image is a black-and-white photograph of a group of men working on a farm. They are all wearing hats and work clothes, and they are sitting on a tractor. The tractor has a long, metal arm that is attached to it. The men are looking in different directions, and one of them is holding a piece of paper. The tractor is surrounded by hay, and there is a wooden box in front of it.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-06

Certainly! Here's a description of the image:

Overview:

The image is a black and white photograph showing a group of men, presumably working on a farm. They appear to be harvesting or processing crops. The scene suggests a time in history, likely the early to mid-20th century, judging by the clothing and equipment.

Key Elements and Details:

- People: There are several men in the image, of varying ages. Many are wearing work clothes, including overalls, shirts, and hats (straw hats).

- Machinery: A large piece of agricultural equipment is visible, featuring a long pipe that appears to be labeled "Red River Special." The apparatus is likely used for moving or processing the crop.

- Setting: The background suggests a rural, agricultural setting, with a haystack. The cloudy sky hints at the weather conditions during the work.

- Activity: The men are engaged in some activity related to the crop. They are moving around the equipment.

General Impression:

The photograph captures the hard work and dedication of farmers in a historical context. The composition and subject matter give a sense of rural life and agricultural labor.

Created by gemini-2.0-flash on 2025-05-06

Here's a description of the image:

This black and white photograph captures a group of farmworkers in the midst of harvest. The scene is dominated by a "Red River Special" piece of agricultural equipment.

There are five people visible in the frame. One appears to be a young boy, wearing a straw hat and overalls, holding a long tool. Another farmworker is seen feeding crops into the machine. To the right of them, another person with a hat tends to crops, wearing a loose-fitting, button-down shirt. In the foreground, two men wearing hats and work clothes are seated on what appears to be a filled sack, observing the work. The sky above is cloudy. The work is physical and in the field. The equipment suggests a period earlier in the 20th century.