Machine Generated Data

Tags

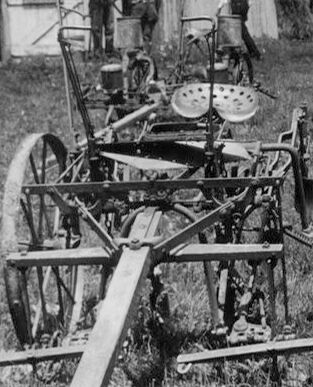

Amazon

created on 2023-10-06

| Clothing | 99.9 | |

|

| ||

| Hat | 99.9 | |

|

| ||

| Outdoors | 99.8 | |

|

| ||

| Nature | 99.7 | |

|

| ||

| Countryside | 99.4 | |

|

| ||

| Machine | 99.4 | |

|

| ||

| Spoke | 99.4 | |

|

| ||

| Adult | 99 | |

|

| ||

| Male | 99 | |

|

| ||

| Man | 99 | |

|

| ||

| Person | 99 | |

|

| ||

| Adult | 99 | |

|

| ||

| Male | 99 | |

|

| ||

| Man | 99 | |

|

| ||

| Person | 99 | |

|

| ||

| Person | 98.4 | |

|

| ||

| Rural | 97.7 | |

|

| ||

| Person | 97.2 | |

|

| ||

| Adult | 96.8 | |

|

| ||

| Male | 96.8 | |

|

| ||

| Man | 96.8 | |

|

| ||

| Person | 96.8 | |

|

| ||

| Person | 96.6 | |

|

| ||

| Person | 96 | |

|

| ||

| Wheel | 95.2 | |

|

| ||

| Wheel | 94.7 | |

|

| ||

| Person | 94.2 | |

|

| ||

| Farm | 93.6 | |

|

| ||

| Person | 93.2 | |

|

| ||

| Person | 91 | |

|

| ||

| Person | 87.3 | |

|

| ||

| Helmet | 83.8 | |

|

| ||

| Car | 78.2 | |

|

| ||

| Transportation | 78.2 | |

|

| ||

| Vehicle | 78.2 | |

|

| ||

| Wheel | 74.2 | |

|

| ||

| Wheel | 73.5 | |

|

| ||

| Person | 67.8 | |

|

| ||

| Bicycle | 65.8 | |

|

| ||

| Bicycle | 64.1 | |

|

| ||

| Wheel | 62.4 | |

|

| ||

| Wheel | 62.3 | |

|

| ||

| Farm Plow | 61.7 | |

|

| ||

| People | 56.9 | |

|

| ||

| Architecture | 56.5 | |

|

| ||

| Building | 56.5 | |

|

| ||

| Factory | 56.5 | |

|

| ||

| Sun Hat | 56.1 | |

|

| ||

| Agriculture | 55.8 | |

|

| ||

| Field | 55.8 | |

|

| ||

| Manufacturing | 55.8 | |

|

| ||

| Garden | 55.4 | |

|

| ||

| Gardener | 55.4 | |

|

| ||

| Gardening | 55.4 | |

|

| ||

Clarifai

created on 2018-05-11

Imagga

created on 2023-10-06

| stretcher | 17.1 | |

|

| ||

| old | 16 | |

|

| ||

| man | 14.8 | |

|

| ||

| litter | 13.8 | |

|

| ||

| outdoor | 13 | |

|

| ||

| conveyance | 12.9 | |

|

| ||

| people | 12.8 | |

|

| ||

| brass | 11.8 | |

|

| ||

| history | 11.6 | |

|

| ||

| military | 11.6 | |

|

| ||

| vintage | 11.6 | |

|

| ||

| wind instrument | 11.3 | |

|

| ||

| travel | 11.3 | |

|

| ||

| adult | 11 | |

|

| ||

| uniform | 11 | |

|

| ||

| danger | 10.9 | |

|

| ||

| city | 10.8 | |

|

| ||

| male | 10.6 | |

|

| ||

| weapon | 9.9 | |

|

| ||

| outdoors | 9.7 | |

|

| ||

| machine | 9.6 | |

|

| ||

| building | 9.6 | |

|

| ||

| war | 9.6 | |

|

| ||

| sax | 9.6 | |

|

| ||

| musical instrument | 9.3 | |

|

| ||

| tree | 9.2 | |

|

| ||

| person | 9.1 | |

|

| ||

| protection | 9.1 | |

|

| ||

| religion | 9 | |

|

| ||

| transportation | 9 | |

|

| ||

| soldier | 8.8 | |

|

| ||

| statue | 8.7 | |

|

| ||

| architecture | 8.6 | |

|

| ||

| men | 8.6 | |

|

| ||

| grunge | 8.5 | |

|

| ||

| clothing | 8.5 | |

|

| ||

| vehicle | 8.5 | |

|

| ||

| house | 8.4 | |

|

| ||

| factory | 8 | |

|

| ||

| boy | 7.8 | |

|

| ||

| trombone | 7.8 | |

|

| ||

| device | 7.6 | |

|

| ||

| power | 7.6 | |

|

| ||

| gun | 7.5 | |

|

| ||

| street | 7.4 | |

|

| ||

| rifle | 7.4 | |

|

| ||

| historic | 7.3 | |

|

| ||

| tool | 7.3 | |

|

| ||

| black | 7.2 | |

|

| ||

| art | 7.2 | |

|

| ||

| day | 7.1 | |

|

| ||

| rural | 7 | |

|

| ||

| wooden | 7 | |

|

| ||

Google

created on 2018-05-11

| motor vehicle | 92.1 | |

|

| ||

| black and white | 84.9 | |

|

| ||

| monochrome photography | 63.9 | |

|

| ||

| vehicle | 62.8 | |

|

| ||

| vintage clothing | 59.3 | |

|

| ||

| monochrome | 53.9 | |

|

| ||

| stock photography | 53.8 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 28-38 |

| Gender | Male, 100% |

| Calm | 99.8% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.2% |

| Angry | 0% |

| Disgusted | 0% |

| Confused | 0% |

| Happy | 0% |

AWS Rekognition

| Age | 16-24 |

| Gender | Male, 99.2% |

| Sad | 38.1% |

| Calm | 32.5% |

| Angry | 29.4% |

| Happy | 7.2% |

| Surprised | 7% |

| Fear | 6.6% |

| Confused | 3% |

| Disgusted | 1.3% |

AWS Rekognition

| Age | 12-20 |

| Gender | Male, 77% |

| Sad | 44.3% |

| Angry | 36.6% |

| Calm | 21.2% |

| Fear | 7.5% |

| Surprised | 6.6% |

| Happy | 5.2% |

| Confused | 5% |

| Disgusted | 2.3% |

AWS Rekognition

| Age | 29-39 |

| Gender | Male, 62.5% |

| Fear | 61.1% |

| Sad | 23% |

| Happy | 13.8% |

| Calm | 9% |

| Surprised | 7.8% |

| Disgusted | 3.4% |

| Angry | 3.3% |

| Confused | 1.5% |

Microsoft Cognitive Services

| Age | 46 |

| Gender | Male |

Feature analysis

Amazon

Adult

Male

Man

Person

Wheel

Helmet

Car

Bicycle

| Adult | 99% | |

|

| ||

| Adult | 99% | |

|

| ||

| Adult | 96.8% | |

|

| ||

| Male | 99% | |

|

| ||

| Male | 99% | |

|

| ||

| Male | 96.8% | |

|

| ||

| Man | 99% | |

|

| ||

| Man | 99% | |

|

| ||

| Man | 96.8% | |

|

| ||

| Person | 99% | |

|

| ||

| Person | 99% | |

|

| ||

| Person | 98.4% | |

|

| ||

| Person | 97.2% | |

|

| ||

| Person | 96.8% | |

|

| ||

| Person | 96.6% | |

|

| ||

| Person | 96% | |

|

| ||

| Person | 94.2% | |

|

| ||

| Person | 93.2% | |

|

| ||

| Person | 91% | |

|

| ||

| Person | 87.3% | |

|

| ||

| Person | 67.8% | |

|

| ||

| Wheel | 95.2% | |

|

| ||

| Wheel | 94.7% | |

|

| ||

| Wheel | 74.2% | |

|

| ||

| Wheel | 73.5% | |

|

| ||

| Wheel | 62.4% | |

|

| ||

| Wheel | 62.3% | |

|

| ||

| Helmet | 83.8% | |

|

| ||

| Car | 78.2% | |

|

| ||

| Bicycle | 65.8% | |

|

| ||

| Bicycle | 64.1% | |

|

| ||

Categories

Imagga

| paintings art | 79.6% | |

|

| ||

| streetview architecture | 12.9% | |

|

| ||

| nature landscape | 5% | |

|

| ||

| beaches seaside | 1.8% | |

|

| ||

Captions

Microsoft

created on 2018-05-11

| an old photo of a group of people posing for the camera | 92.3% | |

|

| ||

| a group of people posing for a photo | 92.2% | |

|

| ||

| an old photo of a group of people posing for a picture | 92.1% | |

|

| ||