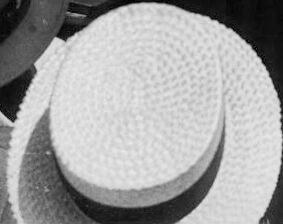

Machine Generated Data

Tags

Amazon

created on 2023-10-06

| Clothing | 100 | |

|

| ||

| Sun Hat | 100 | |

|

| ||

| Hat | 97.8 | |

|

| ||

| Adult | 97.7 | |

|

| ||

| Male | 97.7 | |

|

| ||

| Man | 97.7 | |

|

| ||

| Person | 97.7 | |

|

| ||

| Adult | 97.3 | |

|

| ||

| Male | 97.3 | |

|

| ||

| Man | 97.3 | |

|

| ||

| Person | 97.3 | |

|

| ||

| Adult | 97.3 | |

|

| ||

| Male | 97.3 | |

|

| ||

| Man | 97.3 | |

|

| ||

| Person | 97.3 | |

|

| ||

| Adult | 96.2 | |

|

| ||

| Male | 96.2 | |

|

| ||

| Man | 96.2 | |

|

| ||

| Person | 96.2 | |

|

| ||

| Hat | 95.6 | |

|

| ||

| Person | 95.5 | |

|

| ||

| Hat | 94.7 | |

|

| ||

| Person | 94.5 | |

|

| ||

| Hat | 94.4 | |

|

| ||

| Adult | 93.9 | |

|

| ||

| Person | 93.9 | |

|

| ||

| Female | 93.9 | |

|

| ||

| Woman | 93.9 | |

|

| ||

| Adult | 93.6 | |

|

| ||

| Male | 93.6 | |

|

| ||

| Man | 93.6 | |

|

| ||

| Person | 93.6 | |

|

| ||

| Person | 91.2 | |

|

| ||

| Hat | 89.2 | |

|

| ||

| Hat | 87.7 | |

|

| ||

| Face | 86 | |

|

| ||

| Head | 86 | |

|

| ||

| Hat | 84.1 | |

|

| ||

| Adult | 79.3 | |

|

| ||

| Male | 79.3 | |

|

| ||

| Man | 79.3 | |

|

| ||

| Person | 79.3 | |

|

| ||

| Person | 72 | |

|

| ||

| Hat | 65.1 | |

|

| ||

| Person | 64.5 | |

|

| ||

| Hat | 57.4 | |

|

| ||

| Cowboy Hat | 55.2 | |

|

| ||

| Cap | 55.1 | |

|

| ||

Clarifai

created on 2018-05-11

| people | 100 | |

|

| ||

| group | 99.7 | |

|

| ||

| group together | 99.6 | |

|

| ||

| many | 99.6 | |

|

| ||

| veil | 99.1 | |

|

| ||

| adult | 99.1 | |

|

| ||

| lid | 98.5 | |

|

| ||

| man | 97.2 | |

|

| ||

| several | 96.8 | |

|

| ||

| wear | 96.3 | |

|

| ||

| woman | 95.1 | |

|

| ||

| fedora | 94 | |

|

| ||

| watercraft | 93.7 | |

|

| ||

| recreation | 93.6 | |

|

| ||

| vehicle | 92.5 | |

|

| ||

| transportation system | 92.4 | |

|

| ||

| outfit | 91.3 | |

|

| ||

| percussion instrument | 88.6 | |

|

| ||

| music | 88.5 | |

|

| ||

| one | 88.4 | |

|

| ||

Imagga

created on 2023-10-06

| table | 29 | |

|

| ||

| restaurant | 20.6 | |

|

| ||

| food | 17.1 | |

|

| ||

| seller | 17 | |

|

| ||

| dinner | 16.8 | |

|

| ||

| wedding | 15.6 | |

|

| ||

| people | 15.6 | |

|

| ||

| setting | 15.4 | |

|

| ||

| hat | 14.6 | |

|

| ||

| celebration | 14.3 | |

|

| ||

| cup | 14.1 | |

|

| ||

| meal | 13.9 | |

|

| ||

| party | 13.7 | |

|

| ||

| plate | 13.4 | |

|

| ||

| container | 13.3 | |

|

| ||

| dining | 13.3 | |

|

| ||

| eat | 12.6 | |

|

| ||

| drink | 12.5 | |

|

| ||

| interior | 12.4 | |

|

| ||

| chair | 11.7 | |

|

| ||

| reception | 11.7 | |

|

| ||

| napkin | 11.7 | |

|

| ||

| male | 11.6 | |

|

| ||

| lunch | 11.3 | |

|

| ||

| banquet | 11.1 | |

|

| ||

| decoration | 11 | |

|

| ||

| glass | 11 | |

|

| ||

| man | 10.8 | |

|

| ||

| vessel | 10.7 | |

|

| ||

| home | 10.4 | |

|

| ||

| person | 10.4 | |

|

| ||

| men | 10.3 | |

|

| ||

| luxury | 10.3 | |

|

| ||

| women | 10.3 | |

|

| ||

| coffee | 10.2 | |

|

| ||

| happy | 10 | |

|

| ||

| kitchen | 9.8 | |

|

| ||

| adult | 9.7 | |

|

| ||

| fork | 9.7 | |

|

| ||

| indoors | 9.7 | |

|

| ||

| breakfast | 9.4 | |

|

| ||

| flower | 9.2 | |

|

| ||

| tea | 8.9 | |

|

| ||

| decor | 8.8 | |

|

| ||

| child | 8.8 | |

|

| ||

| porcelain | 8.7 | |

|

| ||

| clothing | 8.7 | |

|

| ||

| serving | 8.7 | |

|

| ||

| lifestyle | 8.7 | |

|

| ||

| china | 8.6 | |

|

| ||

| happiness | 8.6 | |

|

| ||

| cake | 8.5 | |

|

| ||

| rose | 8.4 | |

|

| ||

| event | 8.3 | |

|

| ||

| room | 8.3 | |

|

| ||

| groom | 8.3 | |

|

| ||

| dish | 8.3 | |

|

| ||

| dessert | 8.2 | |

|

| ||

| cuisine | 8 | |

|

| ||

| business | 7.9 | |

|

| ||

| flowers | 7.8 | |

|

| ||

| sitting | 7.7 | |

|

| ||

| eating | 7.6 | |

|

| ||

| house | 7.5 | |

|

| ||

| place | 7.4 | |

|

| ||

| delicious | 7.4 | |

|

| ||

| holding | 7.4 | |

|

| ||

| smiling | 7.2 | |

|

| ||

| sweet | 7.1 | |

|

| ||

| supper | 7 | |

|

| ||

Google

created on 2018-05-11

| black and white | 91.5 | |

|

| ||

| monochrome photography | 83.3 | |

|

| ||

| headgear | 81.4 | |

|

| ||

| monochrome | 71.7 | |

|

| ||

| hat | 60 | |

|

| ||

| human behavior | 53.7 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 37-45 |

| Gender | Female, 98.8% |

| Calm | 25.5% |

| Happy | 24% |

| Disgusted | 21.3% |

| Confused | 12.7% |

| Sad | 7.3% |

| Surprised | 6.8% |

| Fear | 6.7% |

| Angry | 4.3% |

AWS Rekognition

| Age | 29-39 |

| Gender | Female, 83.9% |

| Calm | 71.3% |

| Confused | 15.9% |

| Surprised | 14.5% |

| Fear | 5.9% |

| Sad | 2.3% |

| Angry | 0.9% |

| Happy | 0.2% |

| Disgusted | 0.2% |

AWS Rekognition

| Age | 33-41 |

| Gender | Male, 99.9% |

| Calm | 99.5% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.2% |

| Confused | 0.2% |

| Happy | 0% |

| Angry | 0% |

| Disgusted | 0% |

AWS Rekognition

| Age | 13-21 |

| Gender | Male, 91.7% |

| Calm | 72% |

| Sad | 16.5% |

| Happy | 10.7% |

| Surprised | 6.4% |

| Fear | 6% |

| Confused | 0.6% |

| Angry | 0.5% |

| Disgusted | 0.3% |

Microsoft Cognitive Services

| Age | 51 |

| Gender | Male |

Feature analysis

Amazon

Hat

Adult

Male

Man

Person

Female

Woman

| Hat | 97.8% | |

|

| ||

| Hat | 95.6% | |

|

| ||

| Hat | 94.7% | |

|

| ||

| Hat | 94.4% | |

|

| ||

| Hat | 89.2% | |

|

| ||

| Hat | 87.7% | |

|

| ||

| Hat | 84.1% | |

|

| ||

| Hat | 65.1% | |

|

| ||

| Hat | 57.4% | |

|

| ||

| Adult | 97.7% | |

|

| ||

| Adult | 97.3% | |

|

| ||

| Adult | 97.3% | |

|

| ||

| Adult | 96.2% | |

|

| ||

| Adult | 93.9% | |

|

| ||

| Adult | 93.6% | |

|

| ||

| Adult | 79.3% | |

|

| ||

| Male | 97.7% | |

|

| ||

| Male | 97.3% | |

|

| ||

| Male | 97.3% | |

|

| ||

| Male | 96.2% | |

|

| ||

| Male | 93.6% | |

|

| ||

| Male | 79.3% | |

|

| ||

| Man | 97.7% | |

|

| ||

| Man | 97.3% | |

|

| ||

| Man | 97.3% | |

|

| ||

| Man | 96.2% | |

|

| ||

| Man | 93.6% | |

|

| ||

| Man | 79.3% | |

|

| ||

| Person | 97.7% | |

|

| ||

| Person | 97.3% | |

|

| ||

| Person | 97.3% | |

|

| ||

| Person | 96.2% | |

|

| ||

| Person | 95.5% | |

|

| ||

| Person | 94.5% | |

|

| ||

| Person | 93.9% | |

|

| ||

| Person | 93.6% | |

|

| ||

| Person | 91.2% | |

|

| ||

| Person | 79.3% | |

|

| ||

| Person | 72% | |

|

| ||

| Person | 64.5% | |

|

| ||

| Female | 93.9% | |

|

| ||

| Woman | 93.9% | |

|

| ||

Categories

Imagga

| people portraits | 47.1% | |

|

| ||

| food drinks | 46.9% | |

|

| ||

| paintings art | 4.7% | |

|

| ||

Captions

Microsoft

created on 2018-05-11

| a group of people sitting at a table | 89.4% | |

|

| ||

| a group of people standing around a table | 89.3% | |

|

| ||

| a group of people sitting around a table | 89.2% | |

|

| ||