Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

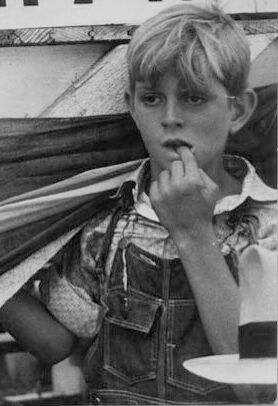

| Age | 16-22 |

| Gender | Male, 54.4% |

| Calm | 39.3% |

| Fear | 30.8% |

| Sad | 11.8% |

| Confused | 8.9% |

| Surprised | 7.4% |

| Angry | 4.3% |

| Disgusted | 2.3% |

| Happy | 1.3% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Boy | 99.3% | |

Categories

Imagga

created on 2023-10-06

| cars vehicles | 100% | |

Captions

Microsoft

created by unknown on 2018-05-11

| a vintage photo of a man | 89% | |

| a man standing in front of a boat | 69.1% | |

| a man sitting on a boat | 53.7% | |

Clarifai

No captions written

Salesforce

Created by general-english-image-caption-blip on 2025-05-31

a photograph of a man and woman sitting at a table with a horse drawn carriage

Created by general-english-image-caption-blip-2 on 2025-07-06

a man and a boy sitting in front of a horse trailer

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-12

The image depicts a scene at what seems to be an outdoor event, with a promotional sign for horse racing that includes a graphic of a horse pulling a sulky and rider. The text on the sign reads "Day and Night SEPT. 7-10," indicating dates for an event. In the foreground, fabric or cloth is stretched, likely part of the setting, while a man in a hat is visible in the background. The overall environment suggests a casual, public gathering.

Created by gpt-4o-2024-08-06 on 2025-06-12

The image shows a section of a fair or festival setting, with a focus on a promotional poster featuring a harness race. The poster displays an illustration of a jockey riding a sulky behind a horse. Text on the poster reads "DAY AND NIGHT" and "SEPT. 7-10," indicating the dates of the event. A man in a hat and suspenders stands in the background, partially visible. In the foreground, there are fabric and some unidentifiable objects, contributing to a lively and busy atmosphere. The image appears to be in black and white, giving it a vintage feel.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-15

The image appears to be a black and white photograph depicting a scene from what seems to be an outdoor event or carnival. In the foreground, there are two people - a man with a serious expression on his face and a young child, possibly a boy, with a pensive look. In the background, there is a large sign advertising a "Day and Night" event scheduled for September 7-10. The sign features an illustration of a person riding a horse-drawn carriage or wagon. The overall atmosphere of the image suggests a nostalgic or historical setting.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-15

This is a black and white photograph that appears to be from a fair or carnival setting. At the top of the image, there's a promotional sign showing an illustration of a horse and sulky (racing cart) with a driver. The sign advertises an event running "DAY AND NIGHT" from "SEPT. 7-10." The image shows several people in what appears to be a casual outdoor setting. One person is wearing overalls, which was common attire for the time period. The photograph has a vintage quality and appears to be from several decades ago, possibly the 1930s or 1940s. The composition and style are characteristic of documentary photography from that era.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-12

The image is a black-and-white photograph of a man and a boy in front of a sign advertising a horse race.

- The sign is white with black text and features an illustration of a man riding a horse in a harness.

- The sign reads "DAY AND NIGHT SEPT. 7-10" in large letters, with the date and event name prominently displayed.

- The illustration of the man and horse is simple yet effective, conveying the excitement and energy of the event.

- In the foreground, a man with short dark hair and a white shirt stands in front of the sign, looking to his left.

- He appears to be in his mid-30s to early 40s, with a serious expression on his face.

- His attire suggests he may be a spectator or participant in the event.

- To the right of the man, a young boy with blond hair and a denim overalls stands, looking at the camera.

- He appears to be around 8-10 years old, with a curious expression on his face.

- His casual attire and relaxed posture suggest he may be a spectator or simply enjoying the atmosphere.

- In the background, a man in a hat and light-colored shirt stands to the left of the sign, facing away from the camera.

- He appears to be older than the man in the foreground, with a more weathered complexion.

- His attire suggests he may be a vendor or official at the event.

- The overall atmosphere of the image is one of excitement and anticipation, as people gather to watch the horse race.

- The sign and illustrations create a sense of energy and movement, drawing the viewer's attention to the event.

- The people in the foreground add a human element to the image, conveying a sense of community and shared experience.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-12

This black and white photograph features a sign advertising a horse racing event, with the text "DAY AND NIGHT SEPT. 7-10" prominently displayed. The sign is accompanied by an illustration of a horse and rider in a cart, set against a backdrop of a wooden structure.

In the foreground, several individuals are visible, including a man wearing a hat and a boy with his hand raised to his mouth. The overall atmosphere of the image suggests a casual, outdoor setting, possibly a fair or festival, where people have gathered to socialize and enjoy the event.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-05

A black-and-white photo shows three people standing in front of a poster. The boy on the right is wearing a denim shirt and a hat, and he is biting his finger. The man in the middle is wearing a white shirt, and he is looking to the left. The man on the left is wearing a hat and a shirt. The poster has a drawing of a horse and a man riding it, along with the text "DAY AND NIGHT SEPT. 7-10."

Created by amazon.nova-pro-v1:0 on 2025-06-05

The black-and-white image features a man and a boy standing in front of a structure with a poster of a man riding a horse. The boy is wearing a denim shirt and has his hand on his mouth. The man beside him is wearing a white polo shirt. Behind them, there is a person wearing a hat and a tie, and another person wearing a hat. They are probably at a horse racing event.

Google Gemini

Created by gemini-2.0-flash on 2025-06-01

Here is a description of the image:

This black and white photograph shows three individuals in the foreground. In the middle is a man who appears to be in his 30s with short, dark hair. He looks directly ahead with a neutral expression. To his right, is a fair-haired boy wearing overalls and glasses. He is looking off to the side with a thoughtful expression, his finger touching his mouth. To the left of the man is another person wearing a hat, but only part of their face is visible.

In the background, there is a sign advertising an event "Day and Night, Sept. 7-10". The sign features an illustration of a horse and buggy. A man in a hat is standing in the background wearing a shirt with a tie. A draped banner, possibly a flag, is partially covering the scene. The backdrop consists of greenery.

Created by gemini-2.0-flash-lite on 2025-06-01

Here's a description of the image:

The image is a black and white photograph, likely taken in the mid-20th century. It appears to be taken at a fair or similar event.

- Central Focus: The focal point is a sign advertising a horse racing event. The sign includes a black illustration of a horse pulling a sulky with a driver, the text "DAY AND NIGHT", and the dates "SEPT. 7-10".

- People: Several people are in the foreground and background. There is a young boy with blonde hair and glasses, wearing overalls, and appears to be sucking his fingers and looking off in contemplation. To his left, a man with dark, short hair is in the center, looking down. In the background, a man in a shirt, tie, and hat stands in the background.

- Atmosphere: The photograph has a nostalgic feel, reflecting the era in which it was taken. The combination of the sign and people suggests a community gathering or event.

- Composition: The composition is slightly off-center. The sign is dominant, while the people are arranged in the foreground, creating a sense of a real-life scene. A striped fabric hangs below the sign, adding an element of visual interest.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-02

The black and white photograph depicts a scene from what appears to be a fair or a festival. The central focus of the image is a large sign advertising an event that features harness racing, as indicated by the illustration of a horse and sulky (a lightweight cart). The text on the sign reads "Day and Night Sept. 7-10," suggesting that the event runs for several days and includes both daytime and nighttime activities.

In the foreground, there are two individuals. One is a young boy with light hair, wearing overalls and a shirt. He is holding something in his mouth, possibly a piece of food. Next to him, partially obscured by a large canvas or tarpaulin, is a man with short hair, wearing a light-colored shirt. His expression appears serious or contemplative.

In the background, there is another person wearing a hat and light-colored clothing, standing near what seems to be a wooden structure or booth. The setting suggests a casual, outdoor environment with a community gathering vibe. The photograph captures a moment in time, providing a glimpse into the social and recreational activities of the era.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-07

The image is a black-and-white photograph that appears to be from a historical context, possibly mid-20th century, judging by the clothing and style. The upper portion of the image features a sign with a cartoon illustration of a horse and jockey in a racing harness. The text on the sign reads, "DAY AND NIGHT SEPT. 7-10," which suggests an event or show taking place over these dates.

In the foreground, there are several people. On the left, part of a man's face is visible, wearing a hat and looking to the side. To the right, a young boy is partially visible, holding something near his mouth, possibly food. The boy is wearing a sleeveless shirt and has light-colored hair. The background includes a wooden structure and some fabric, giving the impression of an outdoor or fairground setting. The overall atmosphere of the image suggests a community gathering or fair.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-07

This black-and-white photograph appears to capture a lively scene, likely at a fair or festival. The image is dated between September 7 and 10, as indicated by the sign in the background, which promotes horse racing events, including day and night races. The sign features an illustration of a jockey and a racehorse pulling a sulky.

In the foreground, there are several people, including a man and a boy. The man is seated and looking directly at the camera with a neutral expression, while the boy, who is also looking at the camera, has his hand near his mouth in a thoughtful or contemplative pose. The boy is dressed in a patterned shirt and a vest.

The background includes a white tent with a striped awning, and there are other people partially visible, suggesting a bustling atmosphere. The overall composition of the photograph suggests it was taken in a casual, candid manner, capturing a moment in time at this event.