Machine Generated Data

Tags

Amazon

created on 2023-10-05

| Clothing | 100 | |

|

| ||

| Shirt | 99.9 | |

|

| ||

| Sun Hat | 99.8 | |

|

| ||

| Adult | 99.6 | |

|

| ||

| Male | 99.6 | |

|

| ||

| Man | 99.6 | |

|

| ||

| Person | 99.6 | |

|

| ||

| Adult | 99.6 | |

|

| ||

| Male | 99.6 | |

|

| ||

| Man | 99.6 | |

|

| ||

| Person | 99.6 | |

|

| ||

| Adult | 99.6 | |

|

| ||

| Male | 99.6 | |

|

| ||

| Man | 99.6 | |

|

| ||

| Person | 99.6 | |

|

| ||

| Adult | 99.5 | |

|

| ||

| Male | 99.5 | |

|

| ||

| Man | 99.5 | |

|

| ||

| Person | 99.5 | |

|

| ||

| Pants | 97.1 | |

|

| ||

| Formal Wear | 96.1 | |

|

| ||

| Brick | 94.8 | |

|

| ||

| Accessories | 94.7 | |

|

| ||

| Jeans | 93.8 | |

|

| ||

| Photography | 90.4 | |

|

| ||

| Tie | 89.2 | |

|

| ||

| City | 86.4 | |

|

| ||

| Face | 81.6 | |

|

| ||

| Head | 81.6 | |

|

| ||

| Road | 78.8 | |

|

| ||

| Street | 78.8 | |

|

| ||

| Urban | 78.8 | |

|

| ||

| Jeans | 75.3 | |

|

| ||

| Hat | 75.2 | |

|

| ||

| Tie | 74.2 | |

|

| ||

| Coat | 72.5 | |

|

| ||

| Hat | 72.2 | |

|

| ||

| Jeans | 70.4 | |

|

| ||

| Portrait | 69.7 | |

|

| ||

| Jeans | 67.9 | |

|

| ||

| Suit | 61 | |

|

| ||

| Cap | 57.8 | |

|

| ||

| Vest | 56.9 | |

|

| ||

| Suspenders | 56.6 | |

|

| ||

| Dress Shirt | 56.3 | |

|

| ||

Clarifai

created on 2018-05-11

Imagga

created on 2023-10-05

| book jacket | 28.3 | |

|

| ||

| man | 26.9 | |

|

| ||

| jacket | 24.1 | |

|

| ||

| business | 20.6 | |

|

| ||

| male | 19.9 | |

|

| ||

| person | 18.9 | |

|

| ||

| businessman | 17.6 | |

|

| ||

| sign | 17.3 | |

|

| ||

| wrapping | 16.7 | |

|

| ||

| people | 15.6 | |

|

| ||

| black | 15 | |

|

| ||

| old | 14.6 | |

|

| ||

| shop | 12.3 | |

|

| ||

| wall | 12.1 | |

|

| ||

| covering | 12 | |

|

| ||

| newspaper | 11.9 | |

|

| ||

| work | 11.8 | |

|

| ||

| world | 11.3 | |

|

| ||

| adult | 10.5 | |

|

| ||

| office | 9.8 | |

|

| ||

| job | 9.7 | |

|

| ||

| success | 9.7 | |

|

| ||

| art | 9.4 | |

|

| ||

| financial | 8.9 | |

|

| ||

| professional | 8.8 | |

|

| ||

| graphic | 8.7 | |

|

| ||

| symbol | 8.7 | |

|

| ||

| education | 8.7 | |

|

| ||

| architecture | 8.6 | |

|

| ||

| construction | 8.6 | |

|

| ||

| finance | 8.4 | |

|

| ||

| design | 8.4 | |

|

| ||

| manager | 8.4 | |

|

| ||

| hand | 8.4 | |

|

| ||

| daily | 8.2 | |

|

| ||

| dirty | 8.1 | |

|

| ||

| building | 8.1 | |

|

| ||

| group | 8.1 | |

|

| ||

| blackboard | 8.1 | |

|

| ||

| idea | 8 | |

|

| ||

| looking | 8 | |

|

| ||

| information | 8 | |

|

| ||

| men | 7.7 | |

|

| ||

| mercantile establishment | 7.6 | |

|

| ||

| student | 7.6 | |

|

| ||

| silhouette | 7.4 | |

|

| ||

| vintage | 7.4 | |

|

| ||

| message | 7.3 | |

|

| ||

| aged | 7.2 | |

|

| ||

| religion | 7.2 | |

|

| ||

| worker | 7.2 | |

|

| ||

| portrait | 7.1 | |

|

| ||

| product | 7.1 | |

|

| ||

| paper | 7.1 | |

|

| ||

Google

created on 2018-05-11

| photograph | 95.8 | |

|

| ||

| black and white | 88.3 | |

|

| ||

| snapshot | 81.8 | |

|

| ||

| vintage clothing | 75 | |

|

| ||

| monochrome photography | 73.8 | |

|

| ||

| monochrome | 65.5 | |

|

| ||

| history | 62.7 | |

|

| ||

| gentleman | 61.5 | |

|

| ||

| human behavior | 61.4 | |

|

| ||

| stock photography | 50.1 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

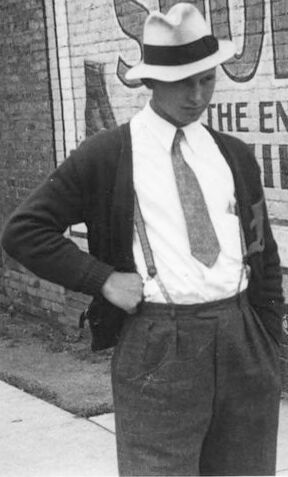

AWS Rekognition

| Age | 49-57 |

| Gender | Male, 100% |

| Sad | 100% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Calm | 0.3% |

| Confused | 0.2% |

| Angry | 0.1% |

| Disgusted | 0% |

| Happy | 0% |

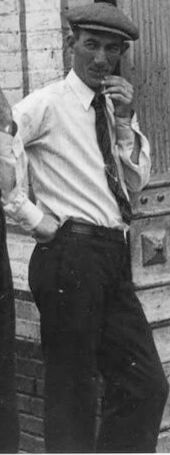

AWS Rekognition

| Age | 21-29 |

| Gender | Female, 99.9% |

| Calm | 99.9% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.2% |

| Confused | 0% |

| Angry | 0% |

| Disgusted | 0% |

| Happy | 0% |

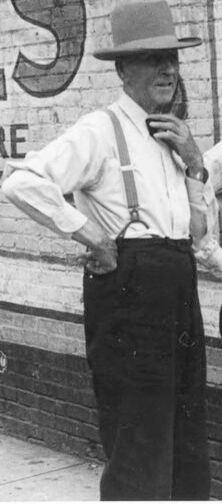

AWS Rekognition

| Age | 56-64 |

| Gender | Male, 99.3% |

| Happy | 93.7% |

| Surprised | 6.5% |

| Fear | 5.9% |

| Sad | 2.8% |

| Calm | 2.7% |

| Confused | 0.4% |

| Angry | 0.2% |

| Disgusted | 0.2% |

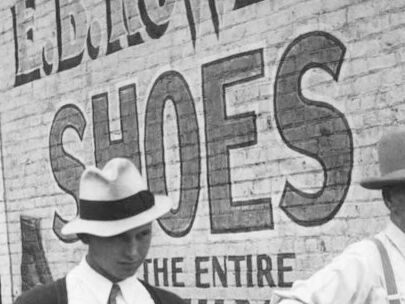

AWS Rekognition

| Age | 45-51 |

| Gender | Male, 100% |

| Calm | 97.2% |

| Surprised | 6.4% |

| Fear | 6.4% |

| Sad | 2.2% |

| Disgusted | 0.3% |

| Happy | 0.3% |

| Confused | 0.2% |

| Angry | 0.2% |

Microsoft Cognitive Services

| Age | 56 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 34 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very likely |

| Blurred | Very unlikely |

Feature analysis

Amazon

Adult

Male

Man

Person

Jeans

Tie

Hat

Categories

Imagga

| paintings art | 96.1% | |

|

| ||

| people portraits | 1.9% | |

|

| ||

| streetview architecture | 1.4% | |

|

| ||

Captions

Microsoft

created on 2018-05-11

| a group of people standing in front of a building | 93.2% | |

|

| ||

| a group of people posing for a photo | 92.9% | |

|

| ||

| a group of people posing for the camera | 92.8% | |

|

| ||

Text analysis

Amazon

ENTIRE

ILY

THE ENTIRE

THE

éCHAFIN

ES

ELECTO

THE ENTIRE

THE

ENTIRE