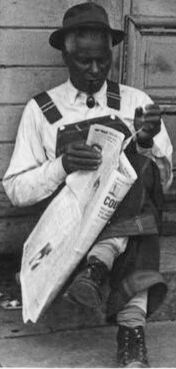

Machine Generated Data

Tags

Amazon

created on 2023-10-06

| Reading | 100 | |

|

| ||

| Photography | 100 | |

|

| ||

| Sitting | 99.5 | |

|

| ||

| Person | 99.2 | |

|

| ||

| Adult | 99.2 | |

|

| ||

| Male | 99.2 | |

|

| ||

| Man | 99.2 | |

|

| ||

| Person | 99.1 | |

|

| ||

| Male | 99.1 | |

|

| ||

| Boy | 99.1 | |

|

| ||

| Child | 99.1 | |

|

| ||

| Clothing | 93 | |

|

| ||

| Footwear | 93 | |

|

| ||

| Shoe | 93 | |

|

| ||

| Shoe | 90.4 | |

|

| ||

| Shoe | 89.6 | |

|

| ||

| Coat | 85.1 | |

|

| ||

| Photographer | 83 | |

|

| ||

| Face | 81.1 | |

|

| ||

| Head | 81.1 | |

|

| ||

| Portrait | 81.1 | |

|

| ||

| City | 61.5 | |

|

| ||

| Shoe | 59.3 | |

|

| ||

| Hat | 59 | |

|

| ||

| Bus Stop | 57.1 | |

|

| ||

| Outdoors | 57.1 | |

|

| ||

| Electronics | 57 | |

|

| ||

| Phone | 57 | |

|

| ||

| Bench | 56.7 | |

|

| ||

| Furniture | 56.7 | |

|

| ||

| Road | 56.2 | |

|

| ||

| Street | 56.2 | |

|

| ||

| Urban | 56.2 | |

|

| ||

| Hairdresser | 56 | |

|

| ||

| Formal Wear | 55.8 | |

|

| ||

| Suit | 55.8 | |

|

| ||

| Transportation | 55.2 | |

|

| ||

| Vehicle | 55.2 | |

|

| ||

| Pants | 55.1 | |

|

| ||

Clarifai

created on 2018-05-11

Imagga

created on 2023-10-06

| wall | 19.7 | |

|

| ||

| child | 18.9 | |

|

| ||

| man | 18.8 | |

|

| ||

| building | 18.7 | |

|

| ||

| city | 18.3 | |

|

| ||

| old | 18.1 | |

|

| ||

| people | 17.3 | |

|

| ||

| adult | 16.2 | |

|

| ||

| person | 16.1 | |

|

| ||

| street | 15.6 | |

|

| ||

| world | 14.1 | |

|

| ||

| door | 14 | |

|

| ||

| sill | 12.9 | |

|

| ||

| male | 12.3 | |

|

| ||

| tricycle | 11.8 | |

|

| ||

| architecture | 11.7 | |

|

| ||

| portrait | 11.6 | |

|

| ||

| support | 11.4 | |

|

| ||

| urban | 11.4 | |

|

| ||

| lifestyle | 10.8 | |

|

| ||

| wheeled vehicle | 10.6 | |

|

| ||

| black | 10.2 | |

|

| ||

| structural member | 10 | |

|

| ||

| outdoor | 9.9 | |

|

| ||

| boy | 9.6 | |

|

| ||

| school | 9.5 | |

|

| ||

| brick | 9.5 | |

|

| ||

| youth | 9.4 | |

|

| ||

| human | 9 | |

|

| ||

| couple | 8.7 | |

|

| ||

| prison | 8.6 | |

|

| ||

| travel | 8.4 | |

|

| ||

| house | 8.4 | |

|

| ||

| vehicle | 8.2 | |

|

| ||

| outdoors | 8.2 | |

|

| ||

| teenager | 8.2 | |

|

| ||

| stairs | 7.9 | |

|

| ||

| parent | 7.9 | |

|

| ||

| structure | 7.9 | |

|

| ||

| ancient | 7.8 | |

|

| ||

| device | 7.7 | |

|

| ||

| statue | 7.6 | |

|

| ||

| stone | 7.6 | |

|

| ||

| mother | 7.6 | |

|

| ||

| one | 7.5 | |

|

| ||

| teen | 7.3 | |

|

| ||

| shop | 7.3 | |

|

| ||

| hair | 7.1 | |

|

| ||

| face | 7.1 | |

|

| ||

| love | 7.1 | |

|

| ||

| newspaper | 7.1 | |

|

| ||

| day | 7.1 | |

|

| ||

Google

created on 2018-05-11

| photograph | 95.9 | |

|

| ||

| black and white | 90.8 | |

|

| ||

| sitting | 87.8 | |

|

| ||

| snapshot | 81.8 | |

|

| ||

| standing | 81.7 | |

|

| ||

| monochrome photography | 80.2 | |

|

| ||

| photography | 79.3 | |

|

| ||

| human behavior | 69.5 | |

|

| ||

| monochrome | 69 | |

|

| ||

| vintage clothing | 66.5 | |

|

| ||

| stock photography | 50.1 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 49-57 |

| Gender | Male, 100% |

| Sad | 100% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Confused | 1.3% |

| Calm | 0.9% |

| Disgusted | 0.1% |

| Angry | 0.1% |

| Happy | 0.1% |

AWS Rekognition

| Age | 48-56 |

| Gender | Male, 100% |

| Sad | 100% |

| Surprised | 6.3% |

| Fear | 6% |

| Confused | 1.4% |

| Calm | 0.4% |

| Disgusted | 0.3% |

| Angry | 0.2% |

| Happy | 0.1% |

Microsoft Cognitive Services

| Age | 63 |

| Gender | Male |

Feature analysis

Categories

Imagga

| interior objects | 94% | |

|

| ||

| pets animals | 4.3% | |

|

| ||

| paintings art | 1.1% | |

|

| ||

Captions

Microsoft

created on 2018-05-11

| a person sitting on a bench in front of a building | 85% | |

|

| ||

| a person sitting on a bench in front of a door | 84.9% | |

|

| ||

| a person sitting on a bench | 84.8% | |

|

| ||

Text analysis

Amazon

COIL