Machine Generated Data

Tags

Amazon

created on 2023-10-06

| Clothing | 100 | |

|

| ||

| Adult | 99.7 | |

|

| ||

| Male | 99.7 | |

|

| ||

| Man | 99.7 | |

|

| ||

| Person | 99.7 | |

|

| ||

| Adult | 99.6 | |

|

| ||

| Male | 99.6 | |

|

| ||

| Man | 99.6 | |

|

| ||

| Person | 99.6 | |

|

| ||

| Face | 99.4 | |

|

| ||

| Head | 99.4 | |

|

| ||

| Photography | 99.4 | |

|

| ||

| Portrait | 99.4 | |

|

| ||

| Adult | 98.3 | |

|

| ||

| Male | 98.3 | |

|

| ||

| Man | 98.3 | |

|

| ||

| Person | 98.3 | |

|

| ||

| Car | 95 | |

|

| ||

| Transportation | 95 | |

|

| ||

| Vehicle | 95 | |

|

| ||

| Hat | 93.5 | |

|

| ||

| Machine | 92.4 | |

|

| ||

| Wheel | 92.4 | |

|

| ||

| Wheel | 85.9 | |

|

| ||

| Sun Hat | 85.8 | |

|

| ||

| Antique Car | 80.5 | |

|

| ||

| Model T | 80.5 | |

|

| ||

| Tire | 75.1 | |

|

| ||

| Wheel | 64.8 | |

|

| ||

| Cap | 61.5 | |

|

| ||

| Spoke | 57.4 | |

|

| ||

| Baseball Cap | 56.9 | |

|

| ||

| Alloy Wheel | 56.8 | |

|

| ||

| Car Wheel | 56.8 | |

|

| ||

| Outdoors | 56.8 | |

|

| ||

Clarifai

created on 2018-05-11

Imagga

created on 2023-10-06

| man | 26.2 | |

|

| ||

| people | 25.1 | |

|

| ||

| male | 22.8 | |

|

| ||

| person | 22.5 | |

|

| ||

| adult | 21.5 | |

|

| ||

| happy | 20 | |

|

| ||

| couple | 16.5 | |

|

| ||

| sitting | 16.3 | |

|

| ||

| smiling | 15.9 | |

|

| ||

| hat | 15 | |

|

| ||

| portrait | 14.9 | |

|

| ||

| smile | 14.2 | |

|

| ||

| love | 14.2 | |

|

| ||

| happiness | 14.1 | |

|

| ||

| clothing | 12.6 | |

|

| ||

| old | 12.5 | |

|

| ||

| men | 12 | |

|

| ||

| outdoors | 11.9 | |

|

| ||

| two | 11.9 | |

|

| ||

| child | 11.1 | |

|

| ||

| grandfather | 10.8 | |

|

| ||

| outside | 10.3 | |

|

| ||

| emotion | 10.1 | |

|

| ||

| city | 10 | |

|

| ||

| fashion | 9.8 | |

|

| ||

| world | 9.7 | |

|

| ||

| statue | 9.6 | |

|

| ||

| looking | 9.6 | |

|

| ||

| building | 9.5 | |

|

| ||

| kin | 9.2 | |

|

| ||

| leisure | 9.1 | |

|

| ||

| human | 9 | |

|

| ||

| fun | 9 | |

|

| ||

| religion | 9 | |

|

| ||

| one | 9 | |

|

| ||

| lady | 8.9 | |

|

| ||

| mother | 8.9 | |

|

| ||

| father | 8.8 | |

|

| ||

| architecture | 8.6 | |

|

| ||

| face | 8.5 | |

|

| ||

| casual | 8.5 | |

|

| ||

| outdoor | 8.4 | |

|

| ||

| cart | 8.4 | |

|

| ||

| joy | 8.3 | |

|

| ||

| girls | 8.2 | |

|

| ||

| home | 8 | |

|

| ||

| parent | 8 | |

|

| ||

| guy | 7.9 | |

|

| ||

| work | 7.8 | |

|

| ||

| youth | 7.7 | |

|

| ||

| sculpture | 7.6 | |

|

| ||

| reading | 7.6 | |

|

| ||

| hand | 7.6 | |

|

| ||

| wife | 7.6 | |

|

| ||

| pair | 7.6 | |

|

| ||

| religious | 7.5 | |

|

| ||

| dad | 7.3 | |

|

| ||

| lifestyle | 7.2 | |

|

| ||

| romance | 7.1 | |

|

| ||

| travel | 7 | |

|

| ||

Google

created on 2018-05-11

| photograph | 95.3 | |

|

| ||

| motor vehicle | 95 | |

|

| ||

| black and white | 86.5 | |

|

| ||

| snapshot | 81.8 | |

|

| ||

| human behavior | 80.3 | |

|

| ||

| vintage clothing | 71.5 | |

|

| ||

| monochrome photography | 69.4 | |

|

| ||

| vehicle | 65.1 | |

|

| ||

| headgear | 64.1 | |

|

| ||

| car | 60.8 | |

|

| ||

| monochrome | 55.7 | |

|

| ||

| stock photography | 52.4 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

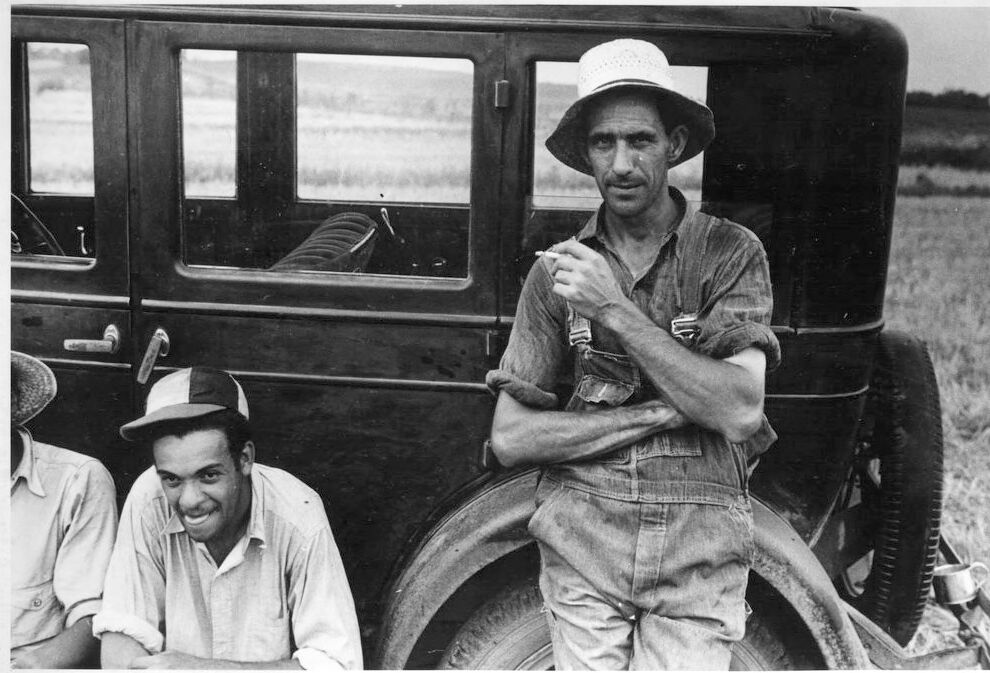

| Age | 22-30 |

| Gender | Male, 99.9% |

| Happy | 94.7% |

| Surprised | 6.4% |

| Fear | 6.1% |

| Angry | 3.5% |

| Sad | 2.2% |

| Confused | 0.4% |

| Calm | 0.2% |

| Disgusted | 0.2% |

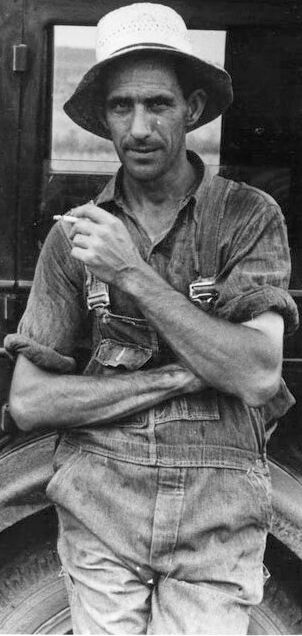

AWS Rekognition

| Age | 36-44 |

| Gender | Male, 100% |

| Calm | 95.3% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.3% |

| Angry | 2% |

| Confused | 1% |

| Happy | 0.7% |

| Disgusted | 0.3% |

Microsoft Cognitive Services

| Age | 48 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 34 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very likely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very likely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| paintings art | 96.8% | |

|

| ||

| people portraits | 2.2% | |

|

| ||

| pets animals | 1% | |

|

| ||

Captions

Microsoft

created on 2018-05-11

| a man sitting on a bus | 75.9% | |

|

| ||

| a vintage photo of a man | 75.8% | |

|

| ||

| a man sitting in a car | 75.7% | |

|

| ||