Machine Generated Data

Tags

Amazon

created on 2023-10-05

| Clothing | 100 | |

|

| ||

| Sun Hat | 100 | |

|

| ||

| Adult | 99.6 | |

|

| ||

| Male | 99.6 | |

|

| ||

| Man | 99.6 | |

|

| ||

| Person | 99.6 | |

|

| ||

| Adult | 99.5 | |

|

| ||

| Male | 99.5 | |

|

| ||

| Man | 99.5 | |

|

| ||

| Person | 99.5 | |

|

| ||

| Adult | 99.5 | |

|

| ||

| Male | 99.5 | |

|

| ||

| Man | 99.5 | |

|

| ||

| Person | 99.5 | |

|

| ||

| Adult | 99.4 | |

|

| ||

| Male | 99.4 | |

|

| ||

| Man | 99.4 | |

|

| ||

| Person | 99.4 | |

|

| ||

| Adult | 98 | |

|

| ||

| Male | 98 | |

|

| ||

| Man | 98 | |

|

| ||

| Person | 98 | |

|

| ||

| Face | 80 | |

|

| ||

| Head | 80 | |

|

| ||

| Barbershop | 77.1 | |

|

| ||

| Indoors | 77.1 | |

|

| ||

| Jeans | 70.6 | |

|

| ||

| Pants | 70.6 | |

|

| ||

| Person | 62.8 | |

|

| ||

| Hat | 60.8 | |

|

| ||

| Shop | 58 | |

|

| ||

| Coat | 57.4 | |

|

| ||

| Hat | 57.1 | |

|

| ||

| Cap | 57 | |

|

| ||

| Shirt | 56.9 | |

|

| ||

| Cowboy Hat | 56.4 | |

|

| ||

| Sitting | 55.7 | |

|

| ||

| Hat | 55.3 | |

|

| ||

Clarifai

created on 2018-05-11

Imagga

created on 2023-10-05

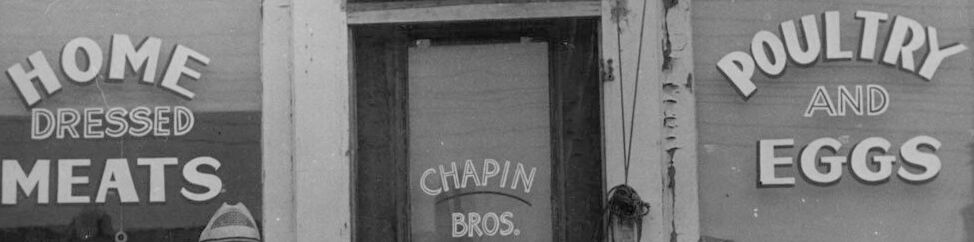

| barbershop | 100 | |

|

| ||

| shop | 100 | |

|

| ||

| mercantile establishment | 85.5 | |

|

| ||

| place of business | 57 | |

|

| ||

| bakery | 29.2 | |

|

| ||

| establishment | 28.6 | |

|

| ||

| building | 25.9 | |

|

| ||

| door | 25.7 | |

|

| ||

| wall | 25.6 | |

|

| ||

| old | 25.1 | |

|

| ||

| architecture | 21.1 | |

|

| ||

| window | 17.4 | |

|

| ||

| house | 15.9 | |

|

| ||

| city | 13.3 | |

|

| ||

| vintage | 13.2 | |

|

| ||

| ancient | 13 | |

|

| ||

| cash machine | 12 | |

|

| ||

| brick | 11.3 | |

|

| ||

| structure | 11.1 | |

|

| ||

| machine | 11.1 | |

|

| ||

| device | 11 | |

|

| ||

| street | 11 | |

|

| ||

| travel | 10.6 | |

|

| ||

| wooden | 10.5 | |

|

| ||

| sign | 10.5 | |

|

| ||

| urban | 10.5 | |

|

| ||

| aged | 10 | |

|

| ||

| guillotine | 9.7 | |

|

| ||

| entrance | 9.7 | |

|

| ||

| antique | 9.5 | |

|

| ||

| construction | 9.4 | |

|

| ||

| people | 8.9 | |

|

| ||

| man | 8.7 | |

|

| ||

| glass | 8.6 | |

|

| ||

| grunge | 8.5 | |

|

| ||

| outdoor | 8.4 | |

|

| ||

| retro | 8.2 | |

|

| ||

| dirty | 8.1 | |

|

| ||

| instrument of execution | 8 | |

|

| ||

| home | 8 | |

|

| ||

| decoration | 8 | |

|

| ||

| doorway | 7.9 | |

|

| ||

| empty | 7.7 | |

|

| ||

| closed | 7.7 | |

|

| ||

| facade | 7.7 | |

|

| ||

| england | 7.6 | |

|

| ||

| wood | 7.5 | |

|

| ||

| town | 7.4 | |

|

| ||

| exterior | 7.4 | |

|

| ||

| industrial | 7.3 | |

|

| ||

| sky | 7 | |

|

| ||

Google

created on 2018-05-11

| black and white | 87.9 | |

|

| ||

| infrastructure | 86.4 | |

|

| ||

| street | 78.3 | |

|

| ||

| standing | 74.7 | |

|

| ||

| monochrome photography | 72.3 | |

|

| ||

| monochrome | 67.8 | |

|

| ||

| road | 58.5 | |

|

| ||

| vehicle | 55.9 | |

|

| ||

| human behavior | 54.5 | |

|

| ||

| advertising | 52.1 | |

|

| ||

| vintage clothing | 51.3 | |

|

| ||

| history | 50.5 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 43-51 |

| Gender | Male, 99.9% |

| Angry | 45.2% |

| Calm | 40.9% |

| Surprised | 7.8% |

| Fear | 6% |

| Confused | 5.5% |

| Sad | 2.9% |

| Disgusted | 2.4% |

| Happy | 0.6% |

AWS Rekognition

| Age | 49-57 |

| Gender | Female, 89.6% |

| Happy | 96.2% |

| Surprised | 6.7% |

| Fear | 6% |

| Sad | 2.2% |

| Calm | 1.3% |

| Angry | 0.4% |

| Disgusted | 0.3% |

| Confused | 0.3% |

AWS Rekognition

| Age | 43-51 |

| Gender | Male, 98.8% |

| Sad | 80.4% |

| Calm | 29.2% |

| Happy | 12% |

| Surprised | 10% |

| Fear | 6.8% |

| Disgusted | 5.7% |

| Angry | 4.3% |

| Confused | 3.8% |

Microsoft Cognitive Services

| Age | 36 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very likely |

| Blurred | Very unlikely |

Feature analysis

Amazon

Adult

Male

Man

Person

Jeans

Hat

Categories

Imagga

| streetview architecture | 98.8% | |

|

| ||

Captions

Microsoft

created on 2018-05-11

| a group of people standing in front of a store | 93.3% | |

|

| ||

| a person standing in front of a store | 93% | |

|

| ||

| a group of people that are standing in front of a store | 87.9% | |

|

| ||

Text analysis

Amazon

HOME

MEATS

EGGS

BROS.

CHAPIN

POULTRY

AND

DRESSED

OULTRY

OME

DRESSED

MEATS

AND

EGGS

CHAPIM

BROS

OULTRY

OME

DRESSED

MEATS

AND

EGGS

CHAPIM

BROS