Machine Generated Data

Tags

Amazon

created on 2023-10-06

| Adult | 99.2 | |

|

| ||

| Male | 99.2 | |

|

| ||

| Man | 99.2 | |

|

| ||

| Person | 99.2 | |

|

| ||

| Person | 96.9 | |

|

| ||

| Person | 95.2 | |

|

| ||

| Sitting | 94.3 | |

|

| ||

| Clothing | 92.6 | |

|

| ||

| Footwear | 92.6 | |

|

| ||

| Person | 92.1 | |

|

| ||

| Shop | 91.5 | |

|

| ||

| Person | 91 | |

|

| ||

| Male | 79.9 | |

|

| ||

| Person | 79.9 | |

|

| ||

| Boy | 79.9 | |

|

| ||

| Child | 79.9 | |

|

| ||

| Shoe | 79.7 | |

|

| ||

| Shoe | 77.7 | |

|

| ||

| Face | 69.9 | |

|

| ||

| Head | 69.9 | |

|

| ||

| Coat | 69.6 | |

|

| ||

| Shoe | 61.2 | |

|

| ||

| Shoe Shop | 57.5 | |

|

| ||

| Accessories | 57.5 | |

|

| ||

| Glasses | 57.5 | |

|

| ||

| Indoors | 57.4 | |

|

| ||

| Formal Wear | 57.2 | |

|

| ||

| Suit | 57.2 | |

|

| ||

| Furniture | 55.6 | |

|

| ||

Clarifai

created on 2018-05-11

Imagga

created on 2023-10-06

| stall | 57.1 | |

|

| ||

| computer | 28.1 | |

|

| ||

| technology | 22.3 | |

|

| ||

| working | 22.1 | |

|

| ||

| work | 22 | |

|

| ||

| business | 21.9 | |

|

| ||

| worker | 18.7 | |

|

| ||

| man | 16.8 | |

|

| ||

| shop | 15.2 | |

|

| ||

| person | 15.2 | |

|

| ||

| musical instrument | 14.7 | |

|

| ||

| people | 14.5 | |

|

| ||

| adult | 14.2 | |

|

| ||

| equipment | 14.1 | |

|

| ||

| machine | 13.9 | |

|

| ||

| laptop | 13.8 | |

|

| ||

| office | 13.7 | |

|

| ||

| support | 13 | |

|

| ||

| industry | 12.8 | |

|

| ||

| male | 12.8 | |

|

| ||

| accordion | 12.4 | |

|

| ||

| indoors | 11.4 | |

|

| ||

| happy | 11.3 | |

|

| ||

| modern | 11.2 | |

|

| ||

| sitting | 11.2 | |

|

| ||

| professional | 11 | |

|

| ||

| smile | 10.7 | |

|

| ||

| interior | 10.6 | |

|

| ||

| busy | 10.6 | |

|

| ||

| warehouse | 10.5 | |

|

| ||

| one | 10.4 | |

|

| ||

| smiling | 10.1 | |

|

| ||

| truck | 10.1 | |

|

| ||

| businesswoman | 10 | |

|

| ||

| transportation | 9.9 | |

|

| ||

| factory | 9.6 | |

|

| ||

| keyboard instrument | 9.6 | |

|

| ||

| storage | 9.5 | |

|

| ||

| vehicle | 9.4 | |

|

| ||

| indoor | 9.1 | |

|

| ||

| attractive | 9.1 | |

|

| ||

| black | 9 | |

|

| ||

| wind instrument | 8.9 | |

|

| ||

| center | 8.9 | |

|

| ||

| monitor | 8.8 | |

|

| ||

| home | 8.8 | |

|

| ||

| forklift | 8.7 | |

|

| ||

| hardware | 8.6 | |

|

| ||

| portrait | 8.4 | |

|

| ||

| floor | 8.4 | |

|

| ||

| device | 8.3 | |

|

| ||

| stack | 8.3 | |

|

| ||

| book | 8 | |

|

| ||

| desk | 7.9 | |

|

| ||

| engineer | 7.9 | |

|

| ||

| telephone | 7.8 | |

|

| ||

| men | 7.7 | |

|

| ||

| product | 7.7 | |

|

| ||

| repair | 7.7 | |

|

| ||

| old | 7.7 | |

|

| ||

| case | 7.6 | |

|

| ||

| store | 7.6 | |

|

| ||

| keyboard | 7.5 | |

|

| ||

| shirt | 7.5 | |

|

| ||

| service | 7.4 | |

|

| ||

| phone | 7.4 | |

|

| ||

| room | 7.4 | |

|

| ||

| inside | 7.4 | |

|

| ||

| occupation | 7.3 | |

|

| ||

| industrial | 7.3 | |

|

| ||

| lifestyle | 7.2 | |

|

| ||

| information | 7.1 | |

|

| ||

| job | 7.1 | |

|

| ||

| television | 7.1 | |

|

| ||

Google

created on 2018-05-11

| photograph | 95.6 | |

|

| ||

| black and white | 89.8 | |

|

| ||

| snapshot | 81.8 | |

|

| ||

| photography | 79.6 | |

|

| ||

| monochrome photography | 72.7 | |

|

| ||

| monochrome | 63 | |

|

| ||

| vintage clothing | 56.1 | |

|

| ||

| angle | 54.9 | |

|

| ||

| stock photography | 50.2 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

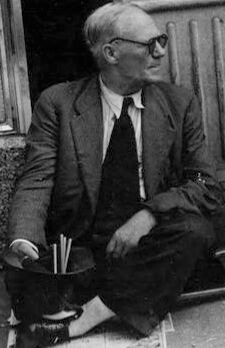

| Age | 58-66 |

| Gender | Male, 100% |

| Calm | 67.9% |

| Sad | 24.6% |

| Surprised | 8.2% |

| Fear | 6.3% |

| Confused | 3.7% |

| Disgusted | 1.8% |

| Angry | 1.8% |

| Happy | 1.3% |

AWS Rekognition

| Age | 6-12 |

| Gender | Female, 86.9% |

| Calm | 93.9% |

| Fear | 7.5% |

| Surprised | 6.3% |

| Sad | 2.5% |

| Disgusted | 0.6% |

| Happy | 0.3% |

| Angry | 0.2% |

| Confused | 0.1% |

AWS Rekognition

| Age | 28-38 |

| Gender | Male, 99.9% |

| Calm | 41.3% |

| Fear | 33.9% |

| Surprised | 14.4% |

| Disgusted | 7.4% |

| Angry | 3.9% |

| Sad | 3% |

| Confused | 2% |

| Happy | 0.9% |

AWS Rekognition

| Age | 38-46 |

| Gender | Female, 99.1% |

| Happy | 95.8% |

| Surprised | 6.5% |

| Fear | 6.5% |

| Sad | 2.2% |

| Calm | 0.7% |

| Confused | 0.6% |

| Disgusted | 0.4% |

| Angry | 0.2% |

AWS Rekognition

| Age | 21-29 |

| Gender | Male, 73.7% |

| Sad | 99.6% |

| Calm | 30.8% |

| Surprised | 6.6% |

| Fear | 6% |

| Happy | 1.5% |

| Disgusted | 0.8% |

| Angry | 0.7% |

| Confused | 0.5% |

Feature analysis

Categories

Imagga

| paintings art | 97.7% | |

|

| ||

| streetview architecture | 2.1% | |

|

| ||

Captions

Microsoft

created on 2018-05-11

| a person sitting in a box | 29.4% | |

|

| ||

Text analysis

Amazon

BATHING

GOODS

25

SANDALS

10

BELTS

(APS

A

A PHOTOS10

BELTS R

D

PHOTOS10

RA

R

GOOD

APS

10

1O

25

GOOD

APS

10

1O

25