Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 42-60 |

| Gender | Male, 54.9% |

| Sad | 45.2% |

| Angry | 45.1% |

| Calm | 45% |

| Confused | 45.1% |

| Happy | 45% |

| Surprised | 47.8% |

| Disgusted | 45% |

| Fear | 51.8% |

Feature analysis

Amazon

| Person | 99.6% | |

Categories

Imagga

| pets animals | 99.9% | |

Captions

Microsoft

created on 2020-04-25

| a group of people looking at a book | 45.1% | |

| a group of people standing next to a book | 45% | |

| a group of people standing on top of a book | 44.5% | |

OpenAI GPT

Created by gpt-4 on 2024-12-12

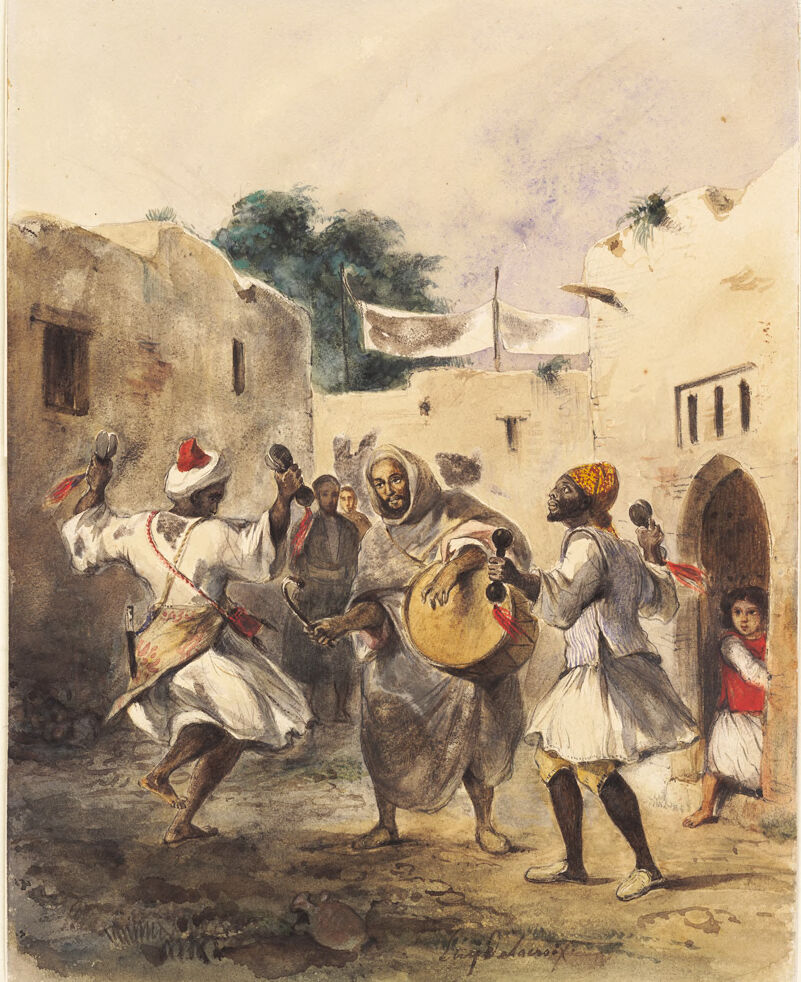

The image depicts a scene of traditional dance or celebration set in an outdoor environment, likely within a courtyard or street of a village or town. Several individuals are dressed in cultural attire that includes white garments with red accents. One of the individuals is playing a large drum, while others are engaged in dance, with dynamic poses that suggest movement and rhythm. Architectural elements such as buildings with arched doorways, along with clothes hanging out to dry, contribute to a sense of everyday life intertwined with a festive atmosphere. The style of the painting suggests it could be from a historical period, capturing the cultural heritage and customs of a community. The earthy tones and the handling of light give it a warm and authentic feel.

Created by gpt-4o-2024-05-13 on 2024-12-12

The image depicts a lively scene with several individuals dressed in traditional attire, engaging in what appears to be a festive or ceremonial dance. The people are positioned in an open area between rustic buildings, possibly in a village or small town setting. Some of them are playing musical instruments, such as drums and tambourines, while others are dancing energetically. A few onlookers are watching the performance, adding to the communal atmosphere. The buildings and the overall ambiance suggest it is a historical or cultural representation, perhaps from a specific region or era. The background features a few trees and what appears to be banners or cloths strung up between the buildings.

Anthropic Claude

Created by claude-3-haiku-20240307 on 2024-12-12

The image depicts a lively scene in what appears to be a Middle Eastern or North African village or town. In the foreground, there is a group of people engaged in some kind of celebration or festival, with several individuals playing drums and other musical instruments. The people in the image are dressed in colorful traditional attire, and the overall atmosphere conveys a sense of vibrant cultural activity. The buildings and structures in the background suggest a rural or semi-urban setting, with a mix of architectural styles and elements. The overall composition and style of the painting suggest it is likely an artistic representation of a cultural scene from this region.

Created by claude-3-5-sonnet-20241022 on 2024-12-12

This is a watercolor painting depicting a street scene, likely from North Africa or the Middle East. The scene shows several people in traditional dress engaged in what appears to be a musical or celebratory gathering. The figures are wearing flowing robes, turbans, and other traditional garments. One person is carrying a large drum or similar percussion instrument, while others appear to be dancing or moving to music. The setting features typical Middle Eastern architecture with adobe-style buildings, archways, and plain walls in earth tones. The overall composition has a dynamic, lively feel with the movement of the figures contrasting against the static architectural elements. The artist has used a muted color palette dominated by browns, whites, and grays, with occasional pops of red in the clothing details.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-12-12

The image depicts a lively scene of a group of men dancing and playing music in a courtyard, likely in North Africa. The men are dressed in traditional attire, with some wearing white robes and turbans, while others wear shorter white tunics. They are barefoot and appear to be in the midst of a joyful celebration. In the foreground, a man is prominently featured, holding a large drum and wearing a yellow turban. He is surrounded by other men, some of whom are playing small drums or other instruments. The atmosphere is one of exuberance and communal celebration, with the men moving in time to the music and seemingly lost in the moment. The background of the image features a series of buildings, including a large stone structure with a pointed archway. The sky above is a warm, sunny yellow, adding to the overall sense of warmth and joy emanating from the scene. Overall, the image captures a vibrant and festive moment in time, showcasing the rich cultural heritage of North Africa.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-12

The image depicts a watercolor painting of a group of men dancing in the street, with one man playing a drum. The scene is set against a backdrop of buildings and trees. **Key Elements:** * A group of men are dancing in the street. * One man is playing a drum. * The scene is set against a backdrop of buildings and trees. * The men are dressed in traditional clothing, with some wearing turbans or headscarves. * The atmosphere appears to be lively and festive, with the men dancing and playing music together. **Overall Impression:** The image suggests a sense of community and celebration, with the men coming together to enjoy music and dance. The traditional clothing and setting add to the cultural significance of the scene, suggesting that it may be a depiction of a specific cultural or religious event.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-01-10

The image depicts a lively scene of a group of men performing a traditional dance in a narrow street. The setting appears to be in a Middle Eastern or North African town, as indicated by the architecture and the attire of the dancers. The men are dressed in white robes and turbans, with some of them carrying musical instruments such as drums and rattles. They are dancing in a circle, with one man in the center playing a drum while the others around him shake their rattles and move their bodies rhythmically. The dancers' faces are expressive, with some of them smiling and others looking focused on their performance. The background shows a few buildings with arched doorways and a tree, suggesting a bustling urban environment. The overall mood of the image is joyful and celebratory, capturing the spirit of traditional dance and music in a Middle Eastern or North African culture.

Created by amazon.nova-pro-v1:0 on 2025-01-10

The image is a painting of a group of people in a street. The people are dressed in traditional clothing and appear to be dancing. The painting depicts a street scene with a group of people dancing and playing music. The people are dressed in traditional clothing, with some wearing hats and others wearing turbans. The man in the center of the group is playing a drum, while the others are dancing and playing various instruments. The street is lined with buildings on either side, and there are trees and other vegetation in the background.