Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 35-51 |

| Gender | Male, 50.4% |

| Sad | 50.1% |

| Calm | 49.5% |

| Angry | 49.6% |

| Surprised | 49.5% |

| Disgusted | 49.5% |

| Happy | 49.5% |

| Confused | 49.5% |

| Fear | 49.8% |

Feature analysis

Amazon

| Person | 94.8% | |

Categories

Imagga

| nature landscape | 26.6% | |

| interior objects | 22.1% | |

| paintings art | 20.6% | |

| text visuals | 16.5% | |

| streetview architecture | 11% | |

| pets animals | 1.4% | |

Captions

Microsoft

created on 2020-05-01

| an old photo of a person | 79.5% | |

| old photo of a person | 77.4% | |

| a black and white photo of a person | 68.3% | |

OpenAI GPT

Created by gpt-4 on 2025-02-18

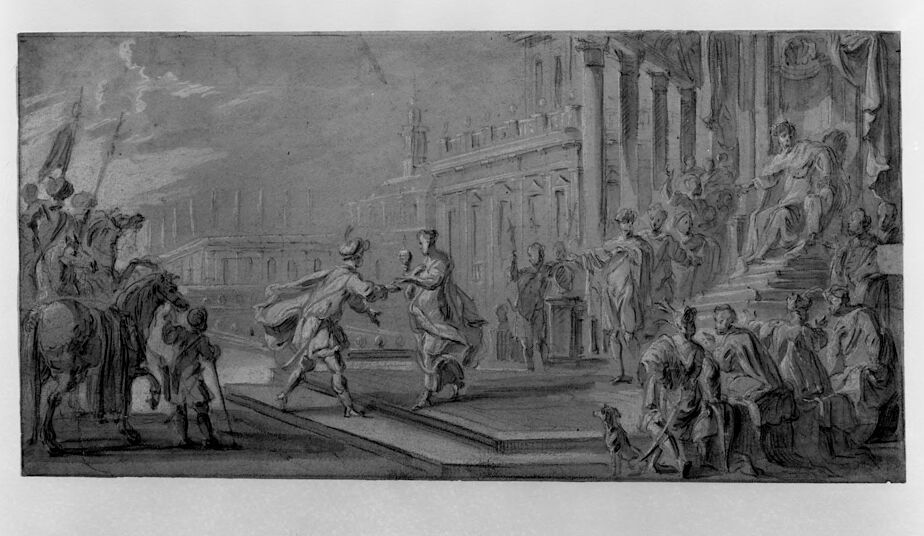

This image appears to be a grayscale reproduction of a classical style drawing or print. There is a dynamic scene depicted which includes several human figures engaging in various activities. In the foreground to the left, a group on horseback is shown, possibly arriving or preparing to depart. The horses are rearing and commanded by riders, indicating movement and possible excitement. In the center and to the right, there are more figures displaying an array of actions and poses. One figure is running towards a set of stairs, where another individual is standing and seems to be either welcoming or directing them. Above the stairs, a seated figure dominates, possibly a person of authority or significance, given their elevated and central position in the composition. This seated figure is surrounded by others who are likely attendants or dignitaries. The background features architectural elements such as columns and a classical facade, suggesting the setting is an important public or ceremonial space, which might be part of a palace or governmental building. The attire of the figures and the architectural style hint at a historical or mythological context. The overall style is reminiscent of historical artwork, possibly from the Renaissance or the Neoclassical period, given the focus on balance, drama, and classical themes. The image does not contain sufficient detail to definitively identify the specific story or event portrayed.

Created by gpt-4o-2024-05-13 on 2025-02-18

The image depicts a detailed drawing or painting featuring a historic or mythological scene set in an architectural backdrop that resembles classical Roman or Greek architecture. On the right, a group of figures is gathered on a grand staircase and around a seated figure who appears to be in a position of authority, possibly a judge or a ruler. The seating area is elevated and columns line the structure, adding to the grandeur of the scene. In the center, two key figures are engaging with each other: one is extending a hand or offering an object to the other, indicating a meeting, a greeting, or a significant exchange. To the left, a group of people, some of whom are mounted on horses, seem to be approaching or witnessing the scene in the middle. The perspective and the alignment of the figures suggest a narrative moment of interaction that is significant within a historical, cultural, or mythological context. In the background, faint outlines of further architectural elements like buildings and perhaps more spectators or participants can be seen, adding depth to the composition. The mood conveyed through the art is one of solemnity, importance, and formality, likely portraying an event of significant historical or mythological importance.

Anthropic Claude

Created by claude-3-haiku-20240307 on 2025-02-18

The image appears to be a black and white drawing or etching depicting an elaborate scene from what seems to be an ancient or historical setting. The central focus is a large architectural structure, possibly a palace or temple, with columns and ornate decorations. In the foreground, there is a procession or gathering of figures, some on horseback and others on foot, engaged in what looks like a ceremonial or celebratory event. The overall composition is intricate and dynamic, with a sense of movement and activity throughout the scene.

Created by claude-3-opus-20240229 on 2025-02-18

The image depicts a detailed sketched scene from what appears to be the 18th century, based on the architectural style and clothing of the figures. The scene shows a grand, multi-story building with classical columns and statuary. In the foreground, there are numerous figures engaged in various activities - some are on horseback, others are walking or standing in groups. The overall impression is of a bustling city square or courtyard in front of an impressive government or institutional building from a past era. The sketch has an antique, historical feel and provides an interesting glimpse into urban life and architecture of that time period.

Created by claude-3-5-sonnet-20241022 on 2025-02-18

This appears to be a black and white historical artwork, likely a sketch or drawing, depicting a grand architectural setting with classical buildings and columns. The scene shows several figures engaged in what seems to be a dramatic moment - there are people on horseback on the left side, and in the center, two figures appear to be in motion, perhaps dancing or engaging in some kind of ceremonial interaction. On the right side, there are steps leading up to a columned entrance where more figures are gathered as spectators. The architectural style suggests a European setting, possibly depicting a scene from a historical event or story. The drawing has a theatrical quality to it, with the composition arranged to draw attention to the central action while the surrounding architecture and onlookers frame the scene.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-03-13

The image depicts a black-and-white drawing of a scene from ancient Rome, likely inspired by classical art. The drawing is set in a courtyard or plaza, with several figures engaged in various activities. In the foreground, a group of people are gathered around a central figure, who appears to be addressing them. Some individuals are seated on steps or benches, while others stand or walk around the area. A few people are dressed in formal attire, suggesting they may be of higher social status. On the left side of the image, a horse-drawn carriage is visible, accompanied by several men in military uniforms. This suggests that the scene may be related to a military or ceremonial event. The background of the image features several buildings, including what appears to be a large temple or palace. The architecture is reminiscent of ancient Roman structures, with columns, arches, and ornate details. Overall, the image presents a vivid depiction of life in ancient Rome, capturing the grandeur and majesty of the time period. The level of detail and realism in the drawing suggests that it may have been created by a skilled artist or illustrator.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-03-13

This image is a black-and-white drawing of a scene from ancient Rome, depicting a dramatic moment with multiple figures in the foreground and background. The central focus is on two men in the middle of the image, one of whom is being led away by another figure. The scene is set against the backdrop of a grand building with columns, suggesting a public or ceremonial setting. In the foreground, several figures are gathered around the central action, some of whom appear to be watching the scene unfold. To the left of the image, a group of people are mounted on horses, adding to the sense of drama and tension. The overall atmosphere of the image is one of intensity and emotion, capturing a pivotal moment in a story or event from ancient Roman history. The image also includes a caption in the bottom-right corner, which reads "Fogg Art Museum, Harvard University" and provides additional information about the artwork, including its accession number and date. This suggests that the image is a reproduction of a historical artwork, possibly created by an artist who was inspired by the culture and history of ancient Rome.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-02-18

The image is a black-and-white drawing depicting a scene from a historical event. The drawing is from the Fogg Art Museum, Harvard, with the accession number 1978.47. The drawing shows a group of people gathered in front of a building with columns. Some of them are standing on the steps of the building, while others are on the street. There is a man in the middle of the drawing who is holding a flag and appears to be speaking to the people gathered. Another man on the left side of the drawing is holding a sword and appears to be walking towards the man with the flag.

Created by amazon.nova-pro-v1:0 on 2025-02-18

The image depicts a sketch of a scene, possibly from a historical event. It shows a group of people in an outdoor setting, with a large building in the background. The people are dressed in period clothing, and some are holding flags or banners. There is a sense of movement and activity in the scene, with people walking and standing in different poses. The sketch is in black and white, and the details are somewhat blurred, giving it a sense of historical authenticity.