Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 24-34 |

| Gender | Female, 50.2% |

| Sad | 100% |

| Fear | 8% |

| Surprised | 6.4% |

| Disgusted | 0.8% |

| Angry | 0.5% |

| Confused | 0.3% |

| Calm | 0.3% |

| Happy | 0.2% |

Feature analysis

Amazon

| Adult | 99.3% | |

Categories

Imagga

| paintings art | 99.9% | |

Captions

Microsoft

created on 2018-12-21

| a close up of a book | 37.5% | |

| close up of a book | 32.5% | |

| a hand holding a book | 32.4% | |

OpenAI GPT

Created by gpt-4 on 2024-12-13

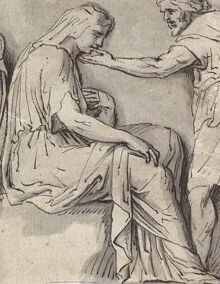

The image shows a series of characters drawn in classical attire, reminiscent of ancient Greco-Roman styles. The clothing drapes elegantly with folds and creases, suggesting a level of detail and attention to the depiction of fabric and form. Four figures stand or interact in different manners, possibly representing scenes from a myth or a story. One character appears to be holding an object that might be a shield, suggesting a possible connection to a narrative involving warriors or heroes. Another figure, adjacent to this character, seems to be looking at the object or engaging with it. The art style indicates this could be a study or a series of character sketches that highlight the artist's understanding of human anatomy, clothing, and possibly narrative context.

Created by gpt-4o-2024-05-13 on 2024-12-13

The image is a classical-style drawing, likely inspired by ancient relief sculptures. It depicts several figures engaged in various activities. From left to right, a standing figure holds something close to their chest, another figure appears to be comforting or assisting a seated figure, a figure stands with a shield, another holds what seems to be a musical instrument (possibly a tambourine or small drum), and two more figures hold shields, with one of them bending down. The figures are dressed in draped garments typical of classical antiquity. The drawing is rendered in a monochromatic style, highlighting the forms and movements of the figures.

Anthropic Claude

Created by claude-3-haiku-20240307 on 2024-12-13

This image appears to depict a classical or mythological scene featuring several human figures. The figures are drawn in a classical, sculptural style and are wearing flowing robes and garments typical of ancient Greek or Roman art. The central figure appears to be a male figure, possibly a warrior or hero, surrounded by other figures that seem to be engaged in some kind of action or ritual. The overall composition and style suggest this is a work of high art, likely a drawing or engraving based on a classical or mythological theme.

Created by claude-3-5-sonnet-20241022 on 2024-12-13

This appears to be a classical or neoclassical sketch or drawing showing multiple figures in ancient Greek or Roman style dress. The artwork is composed of several scenes arranged horizontally, showing figures in flowing robes and tunics engaged in various interactions. The drawing style is characteristic of academic art, with careful attention to drapery and classical poses. The figures are depicted in a relief-like composition, and the medium appears to be ink or graphite on paper. The scenes seem to tell a sequential story or narrative, though the specific myth or historical event it depicts is not immediately clear. The artistry shows skilled draftsmanship in the rendering of the classical figures and their garments.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-12-13

The image depicts a drawing by Rembrandt van Rijn, titled 'The Death of Seneca' (1636). It is a study for a painting and showcases Rembrandt's skill in capturing the dramatic and emotional intensity of the scene. The drawing features six figures, including Seneca, who is shown in a state of despair and contemplation, surrounded by his family and friends. The figures are arranged in a dynamic composition, with Seneca at the center, and the other characters reacting to his death with a mix of shock, grief, and concern. The drawing is executed in Rembrandt's characteristic style, with bold lines, expressive poses, and a focus on capturing the emotional depth of the scene.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-13

This image is a sketch of six figures in classical attire, arranged in two rows of three. The figures are depicted in various poses, with some standing and others seated or leaning against each other. The figures on the left side of the image appear to be engaged in a conversation or interaction, with one figure gesturing towards another. The central figure is shown holding a shield and spear, while the figure on the right side of the image is depicted in a more dynamic pose, with their arm extended and their body leaning forward. The background of the image is a light gray color, which provides a subtle contrast to the darker shading used to define the figures. Overall, the image suggests a sense of movement and energy, as if the figures are about to spring into action. The style of the sketch is reminiscent of classical Greek or Roman art, with its emphasis on proportion, balance, and harmony. The use of simple lines and shading creates a sense of depth and dimensionality, drawing the viewer's eye into the scene.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-01-11

The image shows a black and white drawing of a series of figures. The figures are depicted in a classical style, with a focus on their poses and gestures. The drawing is divided into three sections, each showing a different scene. In the first section, there is a woman sitting on a bench with her hand on her face, possibly crying. Next to her is a man with his hand on her shoulder, possibly comforting her. Another man is standing behind them, possibly looking at them. In the second section, there is a man standing with his arms crossed, holding a shield and a spear. Next to him is another man holding a helmet and a shield. They are both looking in the same direction, possibly towards something or someone. In the third section, there is a man sitting on the ground with his legs crossed, holding a shield. Next to him is another man standing, holding a shield and a spear. They are both looking in the same direction, possibly towards something or someone. The drawing is done in a simple and elegant style, with a focus on the figures and their poses. The use of black and white adds to the classical feel of the image. Overall, the image appears to be a depiction of a scene from ancient Greek or Roman mythology, possibly related to a battle or a tragic event.

Created by amazon.nova-pro-v1:0 on 2025-01-11

The image is a drawing that is divided into three sections. Each section depicts a different scene. In the first section, a woman is sitting on a bench, and a man is standing in front of her. In the second section, a man is standing with a shield, and another man is standing beside him. In the third section, a man is sitting on the ground, and another man is standing beside him.