Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

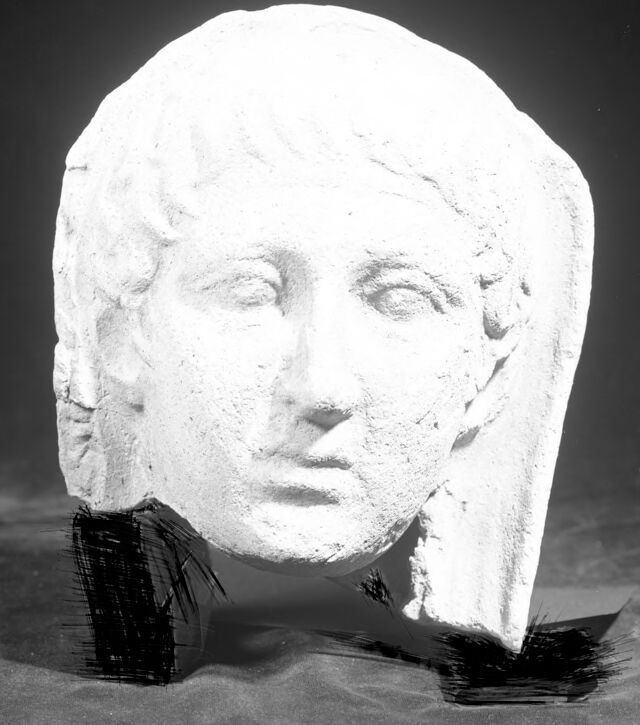

| Age | 26-36 |

| Gender | Male, 89.5% |

| Calm | 53.8% |

| Confused | 33.4% |

| Surprised | 10.8% |

| Fear | 5.9% |

| Sad | 3.7% |

| Disgusted | 1% |

| Angry | 0.5% |

| Happy | 0.2% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 84.1% | |

Categories

Imagga

created on 2022-06-10

| paintings art | 97.7% | |

| people portraits | 2.3% | |

Captions

Microsoft

created by unknown on 2022-06-10

| a vintage photo of a person | 73.3% | |

| a vintage photo of a person | 71.6% | |

| an old photo of a person | 71.5% | |

Clarifai

No captions written

Salesforce

Created by general-english-image-caption-blip on 2025-04-29

a photograph of a head of a head of a woman with a large head of a head of

Created by general-english-image-caption-blip-2 on 2025-07-05

a black and white photo of a head on a stone

OpenAI GPT

Created by gpt-4 on 2025-02-18

The image displays a black and white photo of a sculpture with portions obscured by a white rectangle. The visible parts of the sculpture include a large, round shape that could resemble the upper deal of a head or object, and a distinct, slender form stretching downwards which might suggest a neck-like structure leading to a base or pedestal. The base appears to be standing on a surface that could be interpreted as sandy or grainy, which is consistent with the texture often involved in sculpture displays or studios. The photograph has high contrast with the background being very dark, making the sculpture stand out more prominently.

Created by gpt-4o-2024-05-13 on 2025-02-18

The image shows a stone sculpture or artifact with a smooth surface and distinct contours. The object appears to be set against a dark background, which contrasts with its light color. The lower portion of the sculpture rests on what seems to be a base or stand, and there are shadows and textures visible on the surrounding surface.

Anthropic Claude

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-26

This is a black and white photograph showing a classical-style sculpture head or bust made of what appears to be white marble or plaster. The head is shown against a dark background, creating a stark contrast. The sculpture appears to be resting on or emerging from some dark material or shadow at its base. The face has serene, classical features typical of Greco-Roman style sculpture, with closed or downcast eyes. The lighting in the photograph creates strong highlights on the white surface of the sculpture, emphasizing its three-dimensional form and texture.

Created by claude-3-haiku-20240307 on 2025-02-18

The image shows a white, sculptural bust or head partially submerged in water, creating a surreal and contemplative scene. The facial features appear delicate and expressive, with a serene and pensive expression. The contrast of the smooth, marble-like surface against the dark, textured surroundings creates a striking visual impact. The image evokes a sense of introspection and the transient nature of existence.

Created by claude-3-opus-20240229 on 2025-02-18

The image shows a black and white photograph of a sculpted human head made of white marble or stone, resting on a dark surface. The sculpture depicts the face of a woman looking slightly downward with her eyes closed. The facial features are detailed and realistic, conveying a calm, almost serene expression. Rough, dark brushstrokes are visible around the base of the sculpture, adding contrast and texture to the composition.

Created by claude-3-5-sonnet-20241022 on 2025-02-18

This is a black and white photograph of what appears to be a classical-style marble sculpture head or bust. The sculpture is white and shows serene facial features in a traditional Greco-Roman style. The head appears to be detached or broken off from its body and is resting on what looks like a dark support or base. The background is dark, creating a strong contrast with the bright white marble. The photograph has a stark, dramatic quality typical of art documentation or museum photography.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-03-15

The image depicts a white marble head of a woman, likely from an ancient Greek or Roman sculpture. The head is positioned on a black stand, which is placed on a dark surface. The background is a solid gray color.

The woman's face is rendered in exquisite detail, with a serene expression. Her hair is depicted as being pulled back, and her eyes are cast downward. The overall effect is one of quiet contemplation, as if the woman is lost in thought.

The use of white marble for the sculpture gives it a sense of elegance and refinement, while the dark background helps to emphasize the beauty of the woman's face. The image is likely intended to showcase the skill of the artist who created the sculpture, as well as the beauty of the subject.

Overall, the image presents a striking and thought-provoking portrait of a woman from ancient times, inviting the viewer to reflect on the beauty and mystery of the past.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-03-15

The image presents a black-and-white photograph of a white stone head sculpture, likely a fragment of a larger work. The sculpture features a face with a neutral expression, characterized by a straight mouth and slightly raised eyebrows. The hair is depicted in a style reminiscent of ancient Greek or Roman art, with short bangs and longer hair on the sides.

The sculpture is positioned on a dark surface, which appears to be a table or shelf. A black scribble effect is visible at the bottom of the image, suggesting that it may have been edited or manipulated in some way. The background of the image is a solid black, which helps to emphasize the sculpture and create a sense of depth.

Overall, the image suggests that the sculpture is an ancient artifact, possibly from the classical period, and is being displayed or studied in a museum or academic setting.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-02-18

The image is a black-and-white photograph of a bust sculpture of a woman's head. The sculpture is made of white material, possibly marble or stone, and has a serene and calm expression on her face. The woman's hair is styled in a way that covers her ears and falls down to her shoulders. The sculpture is placed on a flat surface, possibly a table or a shelf, and the background is dark, with no visible objects or distractions. The image is well-lit, with the light source coming from the left side, casting a shadow on the right side of the sculpture.

Created by amazon.nova-pro-v1:0 on 2025-02-18

The image is a black-and-white photograph of a sculpted head of a woman. The head is made of stone or plaster and has a smooth, matte finish. The woman's face is depicted in a serene and calm expression, with her eyes closed and her lips slightly parted. The sculpture is positioned on a flat surface, and the background is plain and uncluttered. The photograph is well-composed, with the head of the sculpture taking up most of the frame, and the lighting is even and soft, highlighting the details of the sculpture. Overall, the image conveys a sense of tranquility and beauty, with the sculpted head of the woman serving as the focal point.

Google Gemini

Created by gemini-2.0-flash on 2025-06-03

The image shows a black and white photograph of a white plaster or marble head sculpture, presumably of a woman. The head is set against a dark, out-of-focus background. The sculpture has a classical style, with a smooth, unblemished face. The hair is styled in soft waves around the face. The eyes are open but lack detail, giving the head a somewhat blank expression.

The sculpture's base is partially visible but obscured by what appears to be intentional black marks or brushstrokes, which add an element of abstraction or defacement to the image. The sculpture is lit from the front, casting a soft shadow on the surface beneath it. The overall tone of the photograph is one of aged beauty and artistic intervention.

Created by gemini-2.0-flash-lite on 2025-06-03

Certainly! Here's a description of the image:

Overall:

The image shows a classical bust of a human head, likely of a woman, set against a dark background. The bust is rendered in a pale, matte material, possibly plaster or stone, and appears to be damaged or weathered.

Details:

- The Head: The head is the central focus. It has classically styled features, including a smooth forehead, a slightly aquiline nose, and full lips. The hair is arranged in soft waves, suggesting a historical or artistic style.

- Damage: There's noticeable damage to the bust, with the bottom of the head having large parts missing. The material itself appears textured, likely due to the process of its creation or aging.

- Background: The background is dark, which helps to highlight the pale color and form of the bust.

- Conditioning: There are black strokes at the bottom of the sculpture, potentially from the original photo development or manipulation of the image.

Impression:

The image has a vintage, almost ghostly quality. The damage to the bust and the black marks suggest a historical artifact or a work of art that has seen age.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-03

The image depicts a sculpted head, likely made of stone, which appears to be an ancient artifact. The sculpture has a serene facial expression with closed eyes and a neutral mouth. The head is adorned with what looks like a head covering or hair, which is intricately detailed. The sculpture is broken at the neck, indicating it may be a fragment of a larger statue. The photograph is in black and white, adding to the antiquated feel of the image. The background is plain and dark, which helps to highlight the details of the sculpture. The overall style and craftsmanship suggest it could be from an ancient civilization, possibly Greek or Roman.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-06-25

The image shows a grayscale photograph of a stone head, likely a classical sculpture, set against a dark background. The head appears to be of a male figure, with features including a chiseled jawline, a straight nose, and a solemn expression. The hair is neatly parted and styled, and the eyes are looking slightly to the right of the viewer. The sculpture is positioned on what seems to be a reflective surface, creating a subtle shadow beneath it. The overall style of the image suggests it is a professional photograph, possibly taken in a museum or gallery setting.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-06-25

The image shows a black-and-white photograph of an ancient stone sculpture of a human head. The sculpture appears to be made of a light-colored material, possibly marble or limestone, and is displayed against a dark background. The head has a serene expression with closed eyes, suggesting it might be from a statue of a deity or a revered figure. The hair is styled in a detailed manner, with curls and waves framing the face. The sculpture is mounted on a dark pedestal, which contrasts with the light color of the stone. The photograph has some dark smudges or scratches, indicating it may be an older print or a photograph of an artifact with some wear and tear.