Machine Generated Data

Tags

Amazon

created on 2021-12-15

Clarifai

created on 2023-10-15

Imagga

created on 2021-12-15

| freight car | 48.3 | |

|

| ||

| car | 41.6 | |

|

| ||

| percussion instrument | 35.9 | |

|

| ||

| steel drum | 35.3 | |

|

| ||

| musical instrument | 33.3 | |

|

| ||

| wheeled vehicle | 29.2 | |

|

| ||

| vehicle | 19.4 | |

|

| ||

| architecture | 16.6 | |

|

| ||

| building | 16.4 | |

|

| ||

| business | 16.4 | |

|

| ||

| man | 15.4 | |

|

| ||

| technology | 14.8 | |

|

| ||

| work | 14.1 | |

|

| ||

| computer | 13.8 | |

|

| ||

| light | 13.4 | |

|

| ||

| modern | 12.6 | |

|

| ||

| city | 12.5 | |

|

| ||

| construction | 12 | |

|

| ||

| laptop | 11.8 | |

|

| ||

| office | 11.8 | |

|

| ||

| people | 11.7 | |

|

| ||

| job | 11.5 | |

|

| ||

| working | 11.5 | |

|

| ||

| industry | 11.1 | |

|

| ||

| worker | 10.7 | |

|

| ||

| digital | 10.5 | |

|

| ||

| metal | 10.5 | |

|

| ||

| device | 10.4 | |

|

| ||

| window | 10.3 | |

|

| ||

| barbershop | 9.9 | |

|

| ||

| old | 9.7 | |

|

| ||

| conveyance | 9.7 | |

|

| ||

| urban | 9.6 | |

|

| ||

| render | 9.5 | |

|

| ||

| shop | 9.5 | |

|

| ||

| equipment | 9.4 | |

|

| ||

| fire | 9.4 | |

|

| ||

| silhouette | 9.1 | |

|

| ||

| structure | 9.1 | |

|

| ||

| machine | 9 | |

|

| ||

| black | 9 | |

|

| ||

| steel | 8.8 | |

|

| ||

| men | 8.6 | |

|

| ||

| finance | 8.4 | |

|

| ||

| industrial | 8.2 | |

|

| ||

| businessman | 7.9 | |

|

| ||

| male | 7.8 | |

|

| ||

| labor | 7.8 | |

|

| ||

| 3d | 7.7 | |

|

| ||

| sky | 7.6 | |

|

| ||

| hand | 7.6 | |

|

| ||

| power | 7.5 | |

|

| ||

| person | 7.4 | |

|

| ||

| safety | 7.4 | |

|

| ||

| room | 7.3 | |

|

| ||

| music | 7.2 | |

|

| ||

| interior | 7.1 | |

|

| ||

| travel | 7 | |

|

| ||

| indoors | 7 | |

|

| ||

Google

created on 2021-12-15

| Black | 89.5 | |

|

| ||

| Hat | 89.1 | |

|

| ||

| Fedora | 87.5 | |

|

| ||

| Window | 86.1 | |

|

| ||

| Black-and-white | 84 | |

|

| ||

| Style | 83.9 | |

|

| ||

| Font | 82.9 | |

|

| ||

| Headgear | 81.9 | |

|

| ||

| Art | 79.6 | |

|

| ||

| Sun hat | 78.7 | |

|

| ||

| Monochrome photography | 72.5 | |

|

| ||

| Monochrome | 71.7 | |

|

| ||

| Room | 68.8 | |

|

| ||

| Cap | 67.3 | |

|

| ||

| Display case | 66.2 | |

|

| ||

| Visual arts | 65.1 | |

|

| ||

| Personal protective equipment | 64.7 | |

|

| ||

| Vintage clothing | 64.2 | |

|

| ||

| Stock photography | 62.2 | |

|

| ||

| Street | 61.8 | |

|

| ||

Microsoft

created on 2021-12-15

| text | 98.1 | |

|

| ||

| outdoor | 88.6 | |

|

| ||

| black and white | 75.3 | |

|

| ||

| hat | 62.2 | |

|

| ||

| cartoon | 56.8 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 4-12 |

| Gender | Female, 87.6% |

| Happy | 76.6% |

| Calm | 19.7% |

| Sad | 2% |

| Surprised | 0.5% |

| Confused | 0.3% |

| Disgusted | 0.3% |

| Angry | 0.3% |

| Fear | 0.3% |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Unlikely |

| Headwear | Unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| interior objects | 60.7% | |

|

| ||

| paintings art | 24.5% | |

|

| ||

| streetview architecture | 8.6% | |

|

| ||

| food drinks | 4.2% | |

|

| ||

Captions

Microsoft

created on 2021-12-15

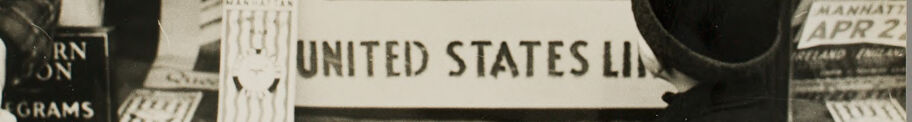

| a person standing in front of a building | 49.9% | |

|

| ||

| a person standing in front of a building | 48.2% | |

|

| ||

| a group of people standing in front of a building | 42.6% | |

|

| ||

Text analysis

Amazon

UNITED

RN

STATES

GRAMS

UNITED STATES LI

ON

APR

APR 2

LI

2

IRELAND

..

MANNATT

-

- and

EMAIL

and

EMAIL 44

Bur

UNIO Bur

44

UNIO

Camaro

MANNATT

APR 2

UNITED STATES LIN

RN

IRELAND ENAN

EGRAMS

MANNATT

APR

2

UNITED

STATES

LIN

RN

IRELAND

ENAN

EGRAMS