Machine Generated Data

Tags

Amazon

created on 2021-12-15

| Person | 99.4 | |

|

| ||

| Human | 99.4 | |

|

| ||

| Person | 98.3 | |

|

| ||

| Person | 97.8 | |

|

| ||

| Person | 96.9 | |

|

| ||

| Person | 96.8 | |

|

| ||

| Person | 96 | |

|

| ||

| Poster | 92.5 | |

|

| ||

| Advertisement | 92.5 | |

|

| ||

| Person | 92.1 | |

|

| ||

| Suit | 87.7 | |

|

| ||

| Coat | 87.7 | |

|

| ||

| Overcoat | 87.7 | |

|

| ||

| Clothing | 87.7 | |

|

| ||

| Apparel | 87.7 | |

|

| ||

| Person | 85 | |

|

| ||

| Outdoors | 69.5 | |

|

| ||

| Nature | 66.8 | |

|

| ||

| People | 63.8 | |

|

| ||

| Military Uniform | 58.6 | |

|

| ||

| Military | 58.6 | |

|

| ||

| Snow | 57.6 | |

|

| ||

| Transportation | 56.7 | |

|

| ||

| Vehicle | 56.5 | |

|

| ||

| Archaeology | 55.5 | |

|

| ||

| Prison | 55.4 | |

|

| ||

| Building | 55.4 | |

|

| ||

Clarifai

created on 2023-10-25

Imagga

created on 2021-12-15

| old | 17.4 | |

|

| ||

| ancient | 15.5 | |

|

| ||

| people | 13.9 | |

|

| ||

| culture | 13.7 | |

|

| ||

| stone | 13.6 | |

|

| ||

| black | 13.3 | |

|

| ||

| hole | 13 | |

|

| ||

| man | 11.7 | |

|

| ||

| religion | 11.6 | |

|

| ||

| travel | 11.3 | |

|

| ||

| vintage | 10.7 | |

|

| ||

| group | 10.5 | |

|

| ||

| art | 10.1 | |

|

| ||

| history | 9.8 | |

|

| ||

| shop | 9.1 | |

|

| ||

| person | 9.1 | |

|

| ||

| sculpture | 8.7 | |

|

| ||

| musical instrument | 8.7 | |

|

| ||

| statue | 8.5 | |

|

| ||

| tourism | 8.2 | |

|

| ||

| work | 8.2 | |

|

| ||

| retro | 8.2 | |

|

| ||

| style | 8.2 | |

|

| ||

| male | 7.9 | |

|

| ||

| film | 7.8 | |

|

| ||

| texture | 7.6 | |

|

| ||

| human | 7.5 | |

|

| ||

| religious | 7.5 | |

|

| ||

| brown | 7.4 | |

|

| ||

| body | 7.2 | |

|

| ||

| earthenware | 7.1 | |

|

| ||

| women | 7.1 | |

|

| ||

Google

created on 2021-12-15

| Photograph | 94.4 | |

|

| ||

| Font | 83.7 | |

|

| ||

| Photographic film | 83.1 | |

|

| ||

| Suit | 74.6 | |

|

| ||

| Snapshot | 74.3 | |

|

| ||

| Negative | 64.6 | |

|

| ||

| Rectangle | 62 | |

|

| ||

| Art | 60.5 | |

|

| ||

| Crew | 57.7 | |

|

| ||

| History | 56.7 | |

|

| ||

| Vintage clothing | 56.7 | |

|

| ||

| Visual arts | 56.3 | |

|

| ||

| Collectable | 56.3 | |

|

| ||

| Paper product | 55.6 | |

|

| ||

| Photo caption | 55.5 | |

|

| ||

| Photographic paper | 55.1 | |

|

| ||

| Advertising | 54.3 | |

|

| ||

| Room | 53.4 | |

|

| ||

| Photography | 53.1 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

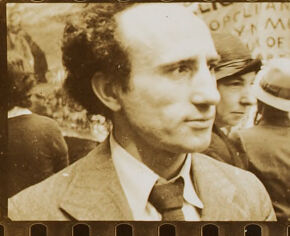

| Age | 23-35 |

| Gender | Male, 97.2% |

| Calm | 94.1% |

| Happy | 2% |

| Confused | 1.6% |

| Sad | 1% |

| Angry | 0.6% |

| Surprised | 0.4% |

| Fear | 0.1% |

| Disgusted | 0.1% |

AWS Rekognition

| Age | 17-29 |

| Gender | Female, 50.6% |

| Calm | 83.1% |

| Sad | 13.6% |

| Happy | 1.3% |

| Angry | 0.8% |

| Fear | 0.7% |

| Confused | 0.2% |

| Surprised | 0.1% |

| Disgusted | 0.1% |

Microsoft Cognitive Services

| Age | 33 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| paintings art | 86.3% | |

|

| ||

| interior objects | 12.3% | |

|

| ||

Captions

Microsoft

created on 2021-12-15

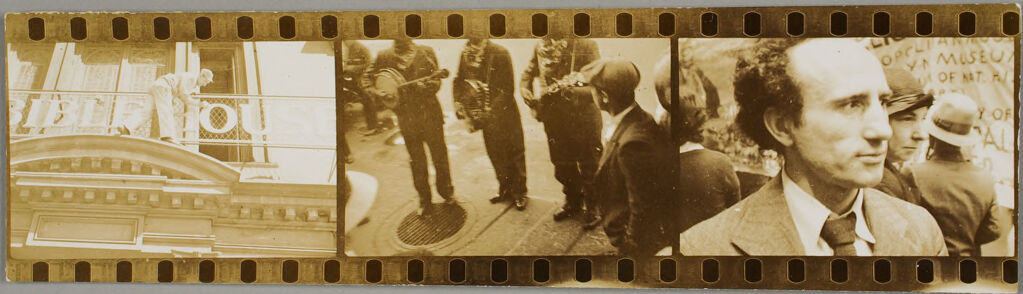

| a group of people sitting at a train station | 28% | |

|

| ||

| a group of people on a train | 27.9% | |

|

| ||

| a group of people standing next to a train | 27.8% | |

|

| ||

Text analysis

Amazon

OF

AL

Y OF

Y

of OF NAT R/

MUSEU

O

У MUSEU

У

of

NAT R/

BIBLE

OPPLIA

MIL

OPCLIA

VMUSEU

OF NAT A

Y OF

OPCLIA

VMUSEU

OF

NAT

A

Y